Why Google Is Consolidating DeepMind and AI Research: Implications for the Future of Gemini

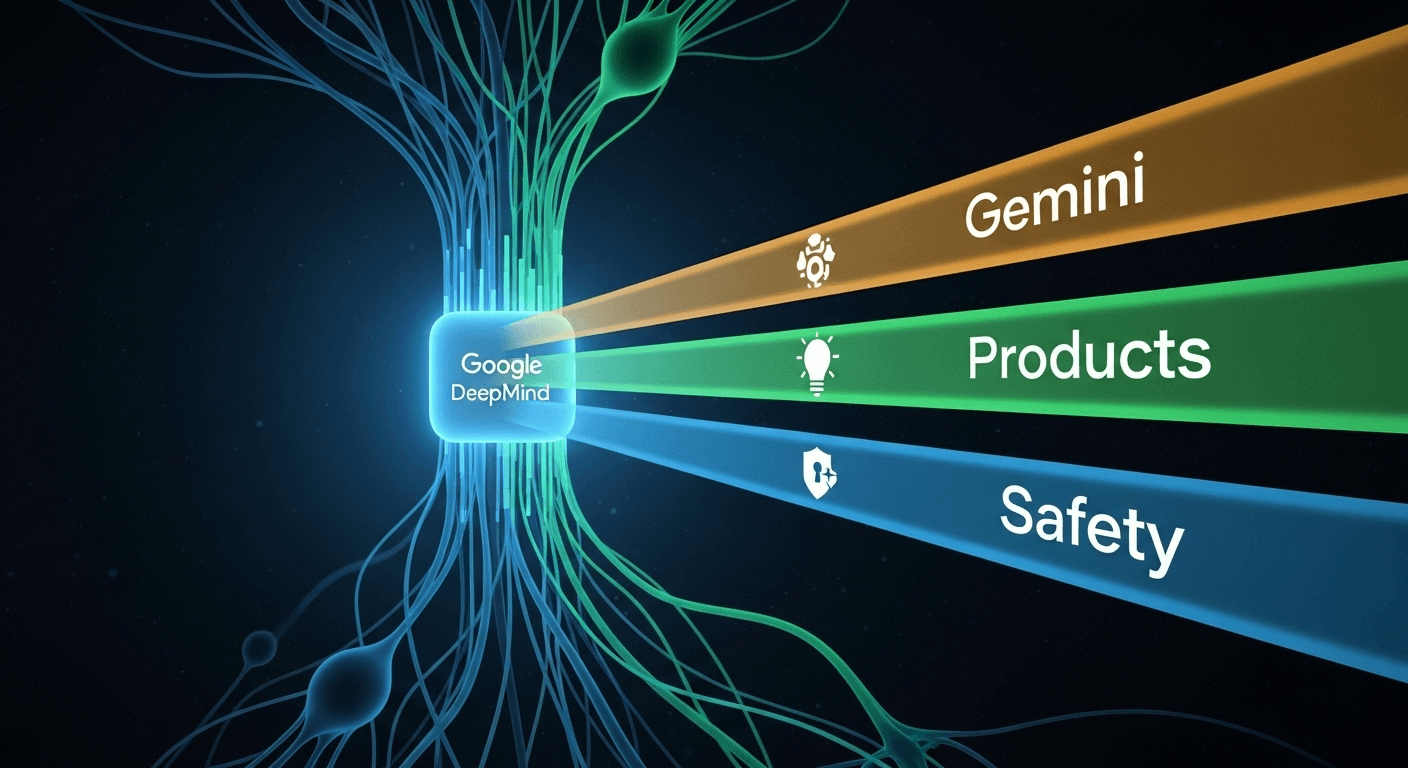

Google is sharpening its focus on artificial intelligence by integrating more of its AI research with Google DeepMind. For those following the swift evolution of AI, this move is more than just a corporate restructuring; it signals Google’s intent to accelerate the development of cutting-edge models like Gemini, translate research breakthroughs into market-ready products faster, and effectively compete with rivals in a space where speed, safety, and scalability are critical.

What Has Changed?

Google has embarked on a multi-year journey to streamline its AI efforts. In April 2023, the company established Google DeepMind by merging DeepMind with the Brain Team from Google Research. This fusion aims to enhance the development of general-purpose AI systems and bring premier scientific advancements closer to product delivery (Google blog). In early 2024, Google further integrated additional AI research and engineering teams into Google DeepMind, uniting its leading model research under a single leadership structure headed by Demis Hassabis. Meanwhile, Google Research will continue focusing on foundational computer science domains such as algorithms, privacy and security, responsible AI practices, and quantum computing (Google), (Reuters overview).

This consolidation means teams concentrated on large-scale model training, inference optimization, and productization for both consumer and enterprise AI are now more closely aligned within Google DeepMind. The company’s clear aim is to shorten the pathway from groundbreaking research to dependable products while ensuring consistent oversight and safety practices.

Why Consolidate Now?

The concise answer: to enhance speed and reduce redundancies. A more comprehensive explanation is that the AI landscape has rapidly transformed. Model development is not only compute-intensive but also requires a cross-disciplinary approach intertwined with product workflows. Bringing leadership together around frontier models simplifies the process of prioritizing compute resources, sharing infrastructure, and coordinating safety evaluations across both research and product teams.

- Model scaling demands: Training and deploying state-of-the-art multimodal systems need massive computational power, specialized hardware, and expert systems engineering. Centralization can minimize friction and costs.

- Product velocity: Google aims for research breakthroughs to be seamlessly integrated into products like Search, Workspace, Android, and Cloud. A unified roadmap minimizes handoffs.

- Safety and reliability: Consistent safety evaluations, red-teaming, and deployment standards can be more effectively implemented within a cohesive organization.

- Competitive pressure: Rivals are rapidly iterating model releases and AI features. Consolidation enables Google to deliver enhancements quickly without compromising quality (Google AI updates).

Implications for Gemini

Gemini represents Google’s suite of multimodal models that manage text, code, images, audio, and video. Since its launch, Google has expanded the Gemini family and enhanced aspects like context length and latency to cater to diverse enterprise and consumer needs (Google I/O 2024). This consolidation under Google DeepMind is designed to speed up developments in several key areas:

- Faster iteration: Integrated research and engineering processes expedite the transition of new capabilities from the lab into Gemini updates.

- Improved system performance: Closer collaboration with infrastructure teams is expected to enhance training efficiency and reduce inference costs, making models more responsive and affordable for developers.

- Consistent safety practices: Centralized evaluations and established policy guidelines aim to minimize regressions and guarantee that new features adhere to internal safety standards.

Throughout 2024, Google has previewed significant advancements in Gemini’s reasoning capabilities and multimodality, including long-context models and optimized variants for speed and cost (Google I/O 2024). Users can expect this consolidation to translate into faster rollouts, improved performance, and clearer product tiers for developers and businesses.

Google Research’s Role

Google Research continues to play a vital role in the company’s innovation strategy. While the bulk of frontier AI work moves under Google DeepMind, Google Research will still focus on long-term scientific advancements and fundamental technologies that benefit the entire ecosystem. Areas of focus include:

- Algorithms and theoretical foundations

- Privacy, security, and responsible AI techniques

- Quantum computing

- Systems and hardware co-design

- Human-computer interaction and applied machine learning

This division of labor follows a model commonly seen in large research organizations: consolidating near-term, product-facing AI work while maintaining a strong research arm focused on foundational breakthroughs that may take years to realize (Google Research).

Leadership, Teams, and Accountability

Google DeepMind is led by Demis Hassabis, who also acts as Chief Scientist at Google. This organization now encompasses teams dedicated to large-scale training, inference optimization, and safety evaluations for models that ultimately power Google’s products. Meanwhile, Google Research continues to publish extensively and engage with the scientific community while contributing foundational technologies to support the company’s AI initiatives (Google).

Key Benefits of Consolidation

- End-to-end alignment: A unified roadmap across data, compute, modelling, safety, and product integration.

- Compute efficiency: Improved scheduling across TPU and GPU clusters, shared tools, and coordinated training runs.

- Reliability: Unified red-teaming and evaluation frameworks will ensure robust releases.

- Talent density: Clustering specialists across research and engineering can reduce duplication and foster peer review.

Potential Risks and Trade-offs

- Speed vs. safety: Rapid development cycles may elevate the risk of regressions or unforeseen behaviors. Strong governance and independent evaluations are essential (Google AI Responsibility).

- Research culture: The consolidation needs to preserve a thriving publication environment and academic collaboration to attract and retain top researchers and advance the field.

- Product pressure: Aligning with product timelines could constrain exploratory research. Balancing long-term inquiries with immediate delivery is critical.

- Organizational clarity: Clearly defining ownership and interfaces between Google DeepMind and Google Research will affect how effectively ideas and personnel can move across boundaries.

Implications for Users and Customers

Everyday users can expect enhanced AI features in Google products like Search, Workspace, Android, and YouTube, with a stronger emphasis on reliability and safety protocols. Developers and businesses should anticipate clearer product tiers, improved documentation, and more predictable performance when working with Gemini APIs and Google Cloud offerings (Google Cloud AI).

For enterprises, consolidated research pipelines should improve:

- Latency and costs for applications relying heavily on inference

- Support for long-context and multimodal workloads

- Tools for observability and governance to ensure safe deployment

- Management and versioning processes throughout the model lifecycle

Responsible AI and Governance

Google has long been committed to responsible AI research, underscoring principles and evaluation frameworks. As AI work centralizes under Google DeepMind, it will be crucial to observe how accountability measures evolve. In 2024, reports have noted changes in how responsible AI teams are integrated within Google’s product divisions, reflecting an industry-wide trend to embed safety within product development rather than isolating it (WIRED), (AI Principles).

Embedding safety in engineering processes can increase effectiveness, particularly when complemented by strong governance, incident response structures, and transparency. Key areas to monitor include model evaluations and reporting, partnerships for external red-teaming, and clear updates regarding known limitations.

Accelerating Infrastructure Advances Through Consolidation

AI is as much about systems engineering as it is about algorithms. By consolidating AI research within Google DeepMind, the organization can tighten the feedback loop with infrastructure teams focusing on TPUs, model parallelism, schedulers, and distributed inference. Google has discussed recent TPU generations and large-scale training clusters designed for high-throughput, cost-effective model training and serving (Google Cloud TPU). As the organization comes together, expect more coordinated advancements in training efficiency, memory optimization, and inference latency.

Industry Trends Toward Unified AI Organizations

Google’s move is not unique. Across the tech landscape, AI leadership increasingly emphasizes unified organizations that merge research with productization. The reasoning is consistent: concentrate talent, reduce duplicative efforts, and create systematic safety practices. However, a potential downside is the risk of narrowing the research scope if motivations skew excessively towards short-term outputs. Optimal results usually depend on fostering a robust internal research culture and maintaining strong ties with academia.

What to Watch Next

- Gemini roadmap: Expect more rapid and stable quality improvements in reasoning, tool usage, and multimodal understanding, especially for long-context applications.

- Enterprise AI: Keep an eye out for clearer service-level agreements (SLAs), strengthened governance tools, and cost efficiencies in Cloud AI offerings.

- Safety transparency: Anticipate more published evaluations, benchmarks, and transparency notes post-release.

- Research output: Conference publications and open-source tools will indicate how Google negotiates the balance between leading-edge research and practical product delivery.

Bottom Line

Google’s consolidation of AI research under Google DeepMind aims to increase speed while maintaining discipline. By aligning leadership, computation, and safety under one cohesive structure, the company is set to deliver more capable models and reliable products. The true measure of success will be whether this new framework preserves the spirit of inquiry and openness characteristic of a world-class research organization while meeting the pragmatic requirements of production-ready AI.

FAQs

What is Google DeepMind?

Google DeepMind is Google’s cutting-edge AI research and engineering division. It was formed in 2023 through the merger of DeepMind and the Google Research Brain Team to accelerate the development of general-purpose models and AI systems (Google).

How does this consolidation impact Google Research?

Google Research continues to emphasize foundational computer science and long-term research, including algorithms, privacy and security, responsible AI methods, quantum computing, and systems research. Much of the advanced model development has transitioned to Google DeepMind (Google Research).

What does this mean for Gemini users?

Users can anticipate more consistent updates and enhanced reliability as the research-to-product pathways become more streamlined. Expect improvements in reasoning capabilities, multimodality, and long-context handling to emerge more regularly across Google products and developer APIs (Google I/O 2024).

How is Google addressing AI safety amid faster releases?

Google upholds AI principles and commits to thorough evaluations, red-teaming, and safety research. The consolidation aims to apply these standards consistently, but maintaining transparency and external scrutiny remains crucial for earning user trust (AI Principles).

Why is centralizing compute and research important?

Training and deploying cutting-edge models is complex and costly. By centralizing leadership and infrastructure, Google can prioritize computation, facilitate shared tools, and enhance overall efficiency—resulting in quicker and more dependable model updates for users and developers.

Sources

- Google: Bringing together our AI teams under Google DeepMind (April 2023)

- Google: Gemini updates at I/O 2024

- Google Research: Overview of Research Areas

- Google: AI Principles

- WIRED: Changes to Google’s responsible AI teams (2024 coverage)

- Google Cloud AI: Products and Documentation

- Google Cloud TPU: Infrastructure for Training and Inference

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article