Why BERT and GPT Are Changing NLP

[{“type”:”paragraph”,”content”:”

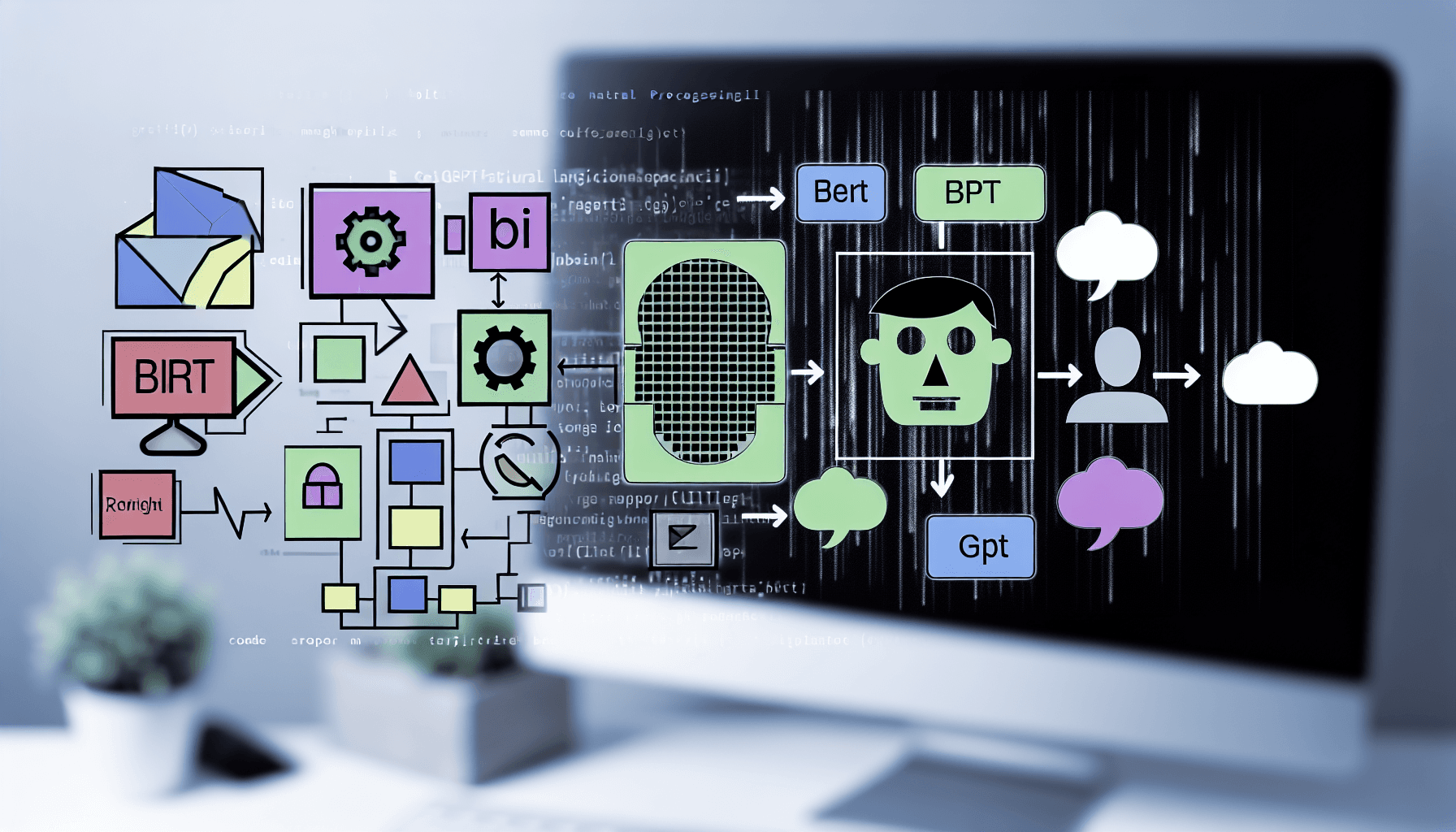

In the ever-evolving field of Natural Language Processing (NLP), two models, BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pretrained Transformer), have emerged as groundbreaking advancements. These models are not just improving the accuracy of language understanding; they are revolutionizing how machines interact with human languages, creating implications across various sectors including technology, healthcare, and customer service.

“},{“type”:”paragraph”,”content”:”

This detailed exploration looks at the reasons why BERT and GPT are pivotal in NLP, discussing their unique architectures, applications, and the overall impact on future technology. By understanding these models, we can anticipate how future interactions with technology will be reshaped.

“},{“type”:”heading”,”level”:”2″,”content”:”Understanding the Basics of NLP”},{“type”:”paragraph”,”content”:”

Natural Language Processing is a branch of artificial intelligence that helps computers understand, interpret, and manipulate human language. NLP bridges computers and humans, enabling machines to process human language in the form of text or voice data and understand its full meaning, complete with sentiment and intent.

“},{“type”:”paragraph”,”content”:”

Historically, NLP sought to fill a gap between human communication and digital data processing. Over the past decades, various models and approaches have been developed, each improving over the last in terms of sophistication and accuracy. Today, NLP encompasses a range of tasks from translation and sentiment analysis to chatbots and voice-operated GPS systems.

“},{“type”:”heading”,”level”:”2″,”content”:”The Rise of Transformers: BERT and GPT”},{“type”:”paragraph”,”content”:”

The introduction of transformer models has marked a significant innovation in NLP. Unlike earlier models that processed data linearly, transformers process data in parallel, significantly speeding up the learning time. BERT and GPT are based on this transformer architecture, but they utilize it in slightly different ways that are revolutionary.

“},{“type”:”paragraph”,”content”:”

BERT (Bidirectional Encoder Representations from Transformers) was developed by Google and is designed to pre-train deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers. As a result, BERT models can consider the full context of a word by looking at the words that come before and after it—making it incredibly effective for tasks like question answering and language inference.

“},{“type”:”paragraph”,”content”:”

GPT (Generative Pretrained Transformer), on the other hand, takes a different approach. Developed by OpenAI, it is an unsupervised learning model that excels at generating text. GPT uses a left-to-right architecture and can generate coherent and contextually relevant text based on a given prompt. This makes it particularly useful for applications like text generation, chatbots, and more advanced AI conversation systems.

“},{“type”:”heading”,”level”:”2″,”content”:”Impact on Industries”},{“type”:”paragraph”,”content”:”

The implications of such advancements in NLP are widespread, affecting numerous industries. In healthcare, for instance, NLP engines can digest vast amounts of medical texts, support clinical decision-making, and enhance patient care. In the realm of customer service, BERT and GPT-powered chatbots are providing support that is increasingly indistinguishable from human agents, offering responses that are quick, accurate, and personalized.

“},{“type”:”heading”,”level”:”2″,”content”:”Examples of BERT and GPT in Action”},{“type”:”paragraph”,”content”:”

Several real-world applications of BERT and GPT demonstrate their versatility and power. For example, Google uses BERT to improve the understanding of user queries in its search engine. Similarly, GPT-3 has been used to create articles, poetry, and even computer code, showcasing its ability to understand and generate human-like text with unprecedented accuracy.

“},{“type”:”heading”,”level”:”2″,”content”:”Challenges and Ethical Considerations”},{“type”:”paragraph”,”content”:”

Despite the incredible capabilities of BERT and GPT, there are challenges and ethical considerations that come with their use. One major concern is the potential for generating misleading information or deepfakes that can be difficult to distinguish from real data. There’s also the issue of bias in AI, as these models can inadvertently learn and perpetuate biases present in their training data.

“},{“type”:”paragraph”,”content”:”

To mitigate these risks, continuous research and development in the field of AI ethics and governance are essential. Developers and users of technologies like BERT and GPT must be vigilant about the potential misuses of these tools and strive for improvements that ensure fairness and accuracy.

“},{“type”:”heading”,”level”:”2″,”content”:”The Future of NLP with BERT and GPT”},{“type”:”paragraph”,”content”:”

As we look ahead, the future of NLP with BERT and GPT appears both promising and challenging. As these models become more sophisticated and integrated into various systems, their influence on our daily lives and interactions with technology will only grow. The continued refinement of these models and the exploration of new applications are likely to pave the way for more personalized, responsive, and intuitive AI systems.

“},{“type”:”paragraph”,”content”:”

The importance of understanding and leveraging BERT and GPT is undeniable for anyone involved in technology and AI. By keeping abreast of these developments, individuals and businesses can better prepare for a future where AI and human communication are seamlessly intertwined.

“}]

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article