What I Wish I Knew Before Learning AI: A Practical Guide For 2025

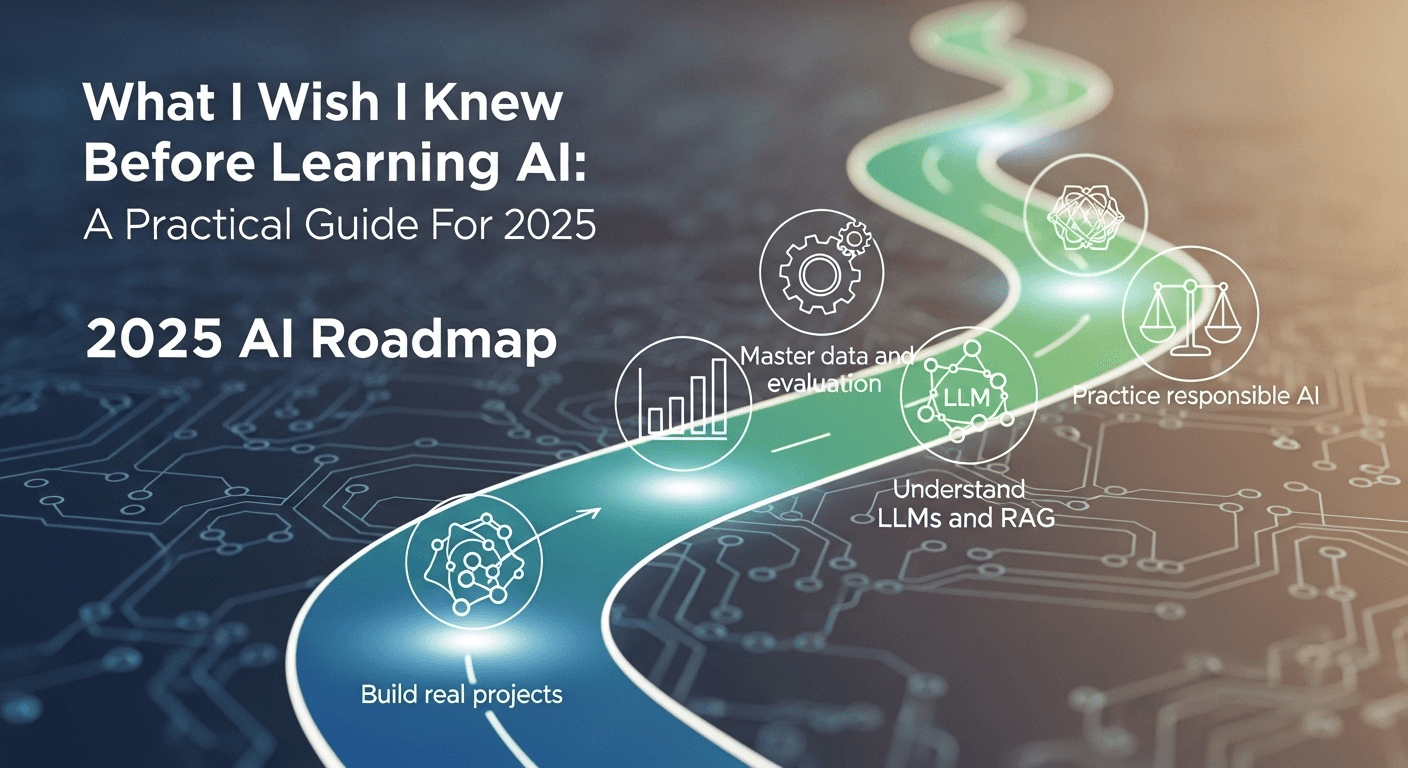

What I Wish I Knew Before Learning AI: A Practical Guide for 2025

If you’re intrigued by artificial intelligence but unsure where to begin, this guide is just for you. It encapsulates the essential lessons I wish I had learned before diving into AI: what truly matters, what doesn’t, how to sidestep common pitfalls, and how to cultivate real skills and create projects that have real-world applicability.

Why Learn AI Now?

AI has transitioned from the confines of research labs into everyday applications, ranging from writing assistants and code generators to medical imaging and logistics optimization. While the field is rapidly evolving, the core principles remain unchanged: solid data, clear problem definitions, rigorous evaluations, and responsible usage. By grounding yourself in these basics, you can navigate through the latest tools and trends without succumbing to the hype.

Recent reports indicate a surge in adoption and investment, highlighting an increasing demand for individuals skilled in data management, evaluation, and risk assessment—not just flashy demonstrations. For insights on trends in model sizes, computational power, and industry applications, refer to the Stanford AI Index 2024.

Start with a Clear Mental Model of AI

AI vs. Machine Learning vs. Deep Learning

It’s helpful to clarify these terms:

- Artificial Intelligence (AI): The overarching goal of enabling machines to perform tasks that typically require human intelligence.

- Machine Learning (ML): A subset of AI that focuses on discovering patterns in data to make predictions or decisions.

- Deep Learning (DL): A subset of ML that uses multi-layer neural networks, particularly effective for tasks involving vision, language, and speech.

Transformers, the architecture that powers modern large language models (LLMs), originated from the 2017 paper “Attention Is All You Need” (Vaswani et al., 2017). While you don’t need to master every equation from day one, having a solid roadmap will help you find your way.

Hype vs. Reality

- Hype: The model architecture is the most important factor. Reality: The quality of data and the problem framing often matter more than any architecture tweaks.

- Hype: You need a massive GPU cluster. Reality: Most essential skills can be learned on a CPU, and low-cost GPUs are available when needed.

- Hype: LLMs work like magic. Reality: They are robust pattern recognizers but come with challenges like hallucination, bias, and brittleness. Careful evaluation and safety measures are critical (HELM).

The Skills That Matter (and How Much of Each)

Essential Math

No need for a PhD to start. However, brushing up on some math will simplify your journey:

- Linear Algebra: Familiarity with vectors, matrices, dot products, and eigenvalues is crucial for grasping embeddings and optimization (Goodfellow et al., Deep Learning).

- Probability and Statistics: Knowledge of distributions, expectations, variance, conditional probability, and hypothesis testing is vital for evaluation and understanding uncertainty.

- Basic Calculus: A grasp of derivatives and gradients will clarify training processes and backpropagation.

Think of math as a tool to support your projects. When questions arise, refer back to these concepts. The Stanford CS229 notes are a user-friendly reference.

Programming and Tooling Skills

- Python: Familiarize yourself with core syntax and libraries like NumPy, pandas, Matplotlib, and Seaborn.

- Machine Learning Libraries: Get to know scikit-learn for classical ML (scikit-learn); and PyTorch or TensorFlow for deep learning (PyTorch, TensorFlow).

- Notebooks and IDEs: Start with Jupyter and VS Code (VS Code). Use virtual environments to maintain clean dependencies.

- Version Control: Use Git for code, along with lightweight trackers for experiments (MLflow or Weights & Biases) (MLflow, Weights & Biases).

Focus on Data Literacy Rather Than Model Obsession

Data collection, cleaning, and labeling often consume the most project time. Learn to prevent leakage, create effective splits, and measure uncertainty. The scikit-learn documentation on cross-validation is an excellent reference (Model evaluation and cross-validation). Also, Kaggle provides a valuable guide on avoiding data leakage (Kaggle).

A Step-by-Step Learning Path That Works

- Select a Use Case that Interests You: For example, classifying customer feedback, predicting inventory, or summarizing documents. A specific problem will guide your choice of tools and learning process.

- Begin with Classical ML: Learn about train-test splits, cross-validation, baselines, and feature engineering using scikit-learn. Practice with datasets from UCI or Kaggle (UCI ML Repository, Kaggle Datasets).

- Transition to Deep Learning When Necessary: Tasks like vision, speech, and modern NLP benefit from neural networks. Start with a basic convolutional network for images or a simple transformer for text. Use PyTorch tutorials to build confidence (PyTorch Tutorials).

- Understand the Basics of Transformers: Read a summary of Vaswani et al. (2017). Grasp concepts like attention, positional encodings, and the pretraining-finetuning process (Attention Is All You Need).

- Practice Evaluation: Define metrics that align with your goals (e.g., precision vs. recall). Incorporate human-in-the-loop checks and task-specific rubrics for LLMs. Refer to Stanford HELM for evaluation frameworks (HELM).

- Create Reproducible Projects: Use Git, environment files, MLflow, and clear READMEs. Keep raw and processed data separate and incorporate unit tests for data loading and metrics.

- Launch a Small Product: Package your model as a simple API or application. A Streamlit dashboard or a FastAPI endpoint can effectively demonstrate real value (Streamlit, FastAPI).

If you prefer a course-based approach, consider Andrew Ng’s classic machine learning course (now updated to specializations) or fast.ai for practical deep learning (Andrew Ng ML, fast.ai).

Understanding LLMs in 2025: Essential Insights for Beginners

Pretraining and Alignment

Modern LLMs undergo two fundamental training stages:

- Pretraining: Focuses on next-token prediction using extensive text corpora, resulting in the acquisition of generalized language patterns and world knowledge.

- Alignment: Modifies general models to adhere to instructions and ensure safety through supervised finetuning and reinforcement learning from human feedback (RLHF). For more details, check out Ouyang et al., 2022 (InstructGPT).

Prompting, Finetuning, and RAG

- Prompting: Crafting clear and focused prompts often resolves 60-80% of use cases. Start simple by providing a role, task, context, and format.

- Finetuning: Useful when you have domain-specific data and require consistent tone, structure, or specialized reasoning.

- Retrieval-Augmented Generation (RAG): Keep your model updated by retrieving relevant documents and allowing the model to reference these sources. Familiarize yourself with chunking, embedding, indexing, and evaluation methods. Check the LangChain and LlamaIndex documentation for practical guidance (LangChain, LlamaIndex).

Evaluating LLM Applications

In addition to accuracy, consider the following metrics:

- Groundedness: Is the answer supported by retrieved sources?

- Hallucinations: Monitor unsupported claims and implement guardrails.

- Task-Specific Quality: Use rubrics or small human evaluations. Benchmarks like MMLU and TruthfulQA reveal limitations and trade-offs (MMLU, TruthfulQA).

Building Real Projects the Right Way

Portfolio projects are some of the best teachers. Strive for comprehensive builds that deliver clear value, encourage data discipline, and showcase good documentation.

Project Ideas

- Text: Create a customer feedback classifier with explainability components and a simple RAG pipeline for evidence-backed responses.

- Vision: Implement lightweight defect detection for product images using a small CNN and apply data augmentation techniques.

- Time Series: Forecast weekly demand with baseline models, feature lags, and error bands.

- LLM Tooling: Develop an internal Q&A assistant that cites sources and tracks evaluation metrics.

Documentation Essentials

- Problem statements, metrics, and constraints.

- Data sources, licenses, and steps taken for cleaning. Consider dataset documentation practices like Datasheets for Datasets (Gebru et al.) and Model Cards for models (Mitchell et al.).

- Experiments: document what you tried, what worked, and what didn’t.

- Risks and mitigations: address aspects such as bias, privacy, safety, and potential failure modes.

Compute, Costs, and Infrastructure

Local vs. Cloud

- Local CPU: Ideal for data wrangling, classical ML, and small models.

- Free or Low-Cost GPU: Both Google Colab and Kaggle Notebooks provide accessible GPUs for practice (Colab, Kaggle Notebooks).

- Cloud: When your experiments scale, utilize managed compute services and storage, but keep a vigilant eye on quotas and costs.

Reproducibility and Experiment Tracking

- Utilize a

requirements.txtorenvironment.yml. Consider Docker for consistent environments across machines (Docker). - Track parameters, metrics, and artifacts using MLflow or Weights & Biases (MLflow, Weights & Biases).

- Version large datasets with DVC, and implement data quality checks using Great Expectations (DVC, Great Expectations).

Ethics and Responsible AI Are Essential

Addressing Bias, Privacy, and Safety

Statistical bias and representation gaps can yield unjust outcomes. As privacy and security issues escalate when handling sensitive data, best practices involve minimizing data usage, seeking consent, and performing rigorous evaluations. The NIST AI Risk Management Framework offers practical guidance for identifying, assessing, and managing risks throughout the AI lifecycle.

As regulations continue to develop, the European Union has enacted the AI Act, a risk-based framework that imposes obligations depending on the risk level of the system. Adhering to its principles will safeguard your work for the future (EU AI Act – EP press release).

Licensing and Data Provenance

Always respect licenses associated with datasets, models, and code. Clearly document data origins, processing steps, and usage constraints. Model and dataset cards enhance stakeholder understanding of limitations and risks (Model Cards, Datasheets for Datasets).

Reading Research Without Overwhelm

- Skim abstracts and figures first; determine whether the paper addresses your problem.

- Utilize curated resources: Papers With Code links papers to corresponding code and leaderboards (Papers With Code).

- Attempt to reproduce a small result before implementing a method, as many papers may not generalize seamlessly.

- Track claims against evidence: Are the reported gains statistically significant? Are the baseline comparisons robust?

Career, Interviews, and Portfolio Development

- Prioritize Portfolio Over Certificates: Hiring managers tend to favor tangible, reproducible projects that feature clean code and transparent write-ups.

- Tell a Compelling Story: What problem did you solve? How was success measured? What trade-offs were made?

- Practice for Interviews: Prepare for a mix of coding, ML concepts, product understanding, and effective communication. Be prepared to explain the rationale behind your decisions.

- Get Involved: Open issues and contribute modest pull requests to open source libraries. Engaging in discussions builds credibility and expands your network.

Avoiding Common Mistakes and Myths

- Neglecting Baselines: Always start with a simple model to establish a benchmark.

- Overfitting to Leaderboards: If you fine-tune on test data, your model is likely to fail in real-world scenarios.

- Data Collection Last: Define your data requirements upfront; poor data will undermine even the best models.

- Chasing the Latest Trends: Select methods that align with your constraints rather than simply following the latest headlines.

- Assuming LLMs Are a Panacea: Sometimes a rule-based system or a smaller model may be more cost-effective, faster, and reliable.

A Practical 90-Day Starter Curriculum

This plan balances foundational learning with hands-on projects and practical outcomes.

Weeks 1-3: Foundations and Python Basics

- Refresh your Python skills along with NumPy, pandas, and plotting.

- Learn the basics of linear algebra and probability while engaging in practical exercises.

- Complete two small notebooks: Exploratory Data Analysis (EDA) and a simple classification task.

Weeks 4-6: Classical ML and Evaluative Techniques

- Explore models: logistic regression, decision trees, random forests, and gradient boosting methods.

- Focus on evaluation: learn cross-validation, confusion matrices, ROC-AUC, and precision-recall techniques.

- Deliver a baseline project that features a comprehensive README and MLflow tracking.

Weeks 7-9: Diving into Deep Learning and Transformers

- Build a small CNN or transformer following an established tutorial.

- Experiment with transfer learning for image classification or text tasks.

- Read a summary of Vaswani et al. (2017) and implement attention mechanisms based on guides.

Weeks 10-12: Developing LLM Applications and RAG

- Prototype a RAG application incorporating retrieval, citation, and a simple evaluation rubric.

- Add logging capabilities, prompt templates, and a feedback loop for continuous improvement.

- Craft a brief postmortem documenting risks, failures, and next steps.

Final Thoughts

AI thrives on curiosity, perseverance, and sound engineering practices. Begin with manageable projects, share your learning journey publicly, and ensure your work is reproducible. By prioritizing data quality, thorough evaluations, and responsible practices, you’ll create systems that deliver value no matter how the tools evolve in the future.

FAQs

Do I need a powerful GPU to start learning AI?

No, you can learn Python, data wrangling, classical ML, and even small deep learning models on a CPU. For more demanding tasks, utilize free or low-cost GPU options available through Colab or Kaggle.

Should I learn deep learning before classical ML?

Generally, no. Classical ML provides valuable insights into data splitting, baselines, and evaluation, which are applicable to deep learning and LLMs.

How do I prevent data leakage?

Always split your data before any transformations, fit preprocessors solely on training data, and ensure test data remains completely separate. The scikit-learn documentation on cross-validation details this process comprehensively.

What is the difference between finetuning and RAG?

Finetuning alters model weights to assimilate patterns from your data, whereas RAG retrieves relevant documents at query time and asks the model to cite these sources for grounding its answers. RAG is often a more economical and maintainable option for knowledge-heavy tasks.

How do I evaluate an LLM application?

Combine automated checks (e.g., groundedness, formatting) with small human evaluations using task-specific rubrics. Monitor for hallucinations and assess whether the application assists users in meeting their goals.

Sources

- Stanford AI Index Report 2024

- Vaswani et al., 2017 – Attention Is All You Need

- Ouyang et al., 2022 – Training language models to follow instructions with human feedback

- HELM – Holistic Evaluation of Language Models

- scikit-learn – Model evaluation and cross-validation

- Kaggle – Data Leakage

- UCI Machine Learning Repository

- Kaggle Datasets

- PyTorch Tutorials

- Google Colab – Getting Started

- Kaggle Notebooks

- MLflow

- Weights & Biases

- DVC – Data Version Control

- Great Expectations

- NIST AI Risk Management Framework

- European Parliament – AI Act press release

- Model Cards for Model Reporting – Mitchell et al.

- Datasheets for Datasets – Gebru et al.

- Papers With Code

- MMLU – Measuring Massive Multitask Language Understanding

- TruthfulQA – Measuring how models imitate human falsehoods

- Deep Learning book – Goodfellow, Bengio, Courville

- Stanford CS229 – Machine Learning

- fast.ai – Practical Deep Learning

- Streamlit

- FastAPI

- LangChain – Retrieval cookbook

- LlamaIndex – Documentation

- Docker – Docs

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article