Understanding the GPT Pretraining Process

Introduction

The advent of Generative Pre-trained Transformers (GPT) by OpenAI has revolutionized the field of artificial intelligence, specifically in natural language processing (NLP). GPT models are exceptional in generating text that is not only coherent but also contextually relevant. In this blog, we delve deep into the GPT pretraining process, exploring its fundamentals, the mechanics behind it, and why it’s pivotal for the model’s performance.

What is GPT?

Generative Pre-trained Transformers, or GPT, refers to a series of models designed and developed by OpenAI. These models use the transformer architecture, first introduced in the paper ‘Attention is All You Need’ by Vaswani et al. Unlike traditional models that process inputs sequentially (left-to-right or right-to-left), transformers process all parts of the input data simultaneously. This feature allows GPT models to capture complex patterns and dependencies in the data more effectively.

The Pretraining Explained

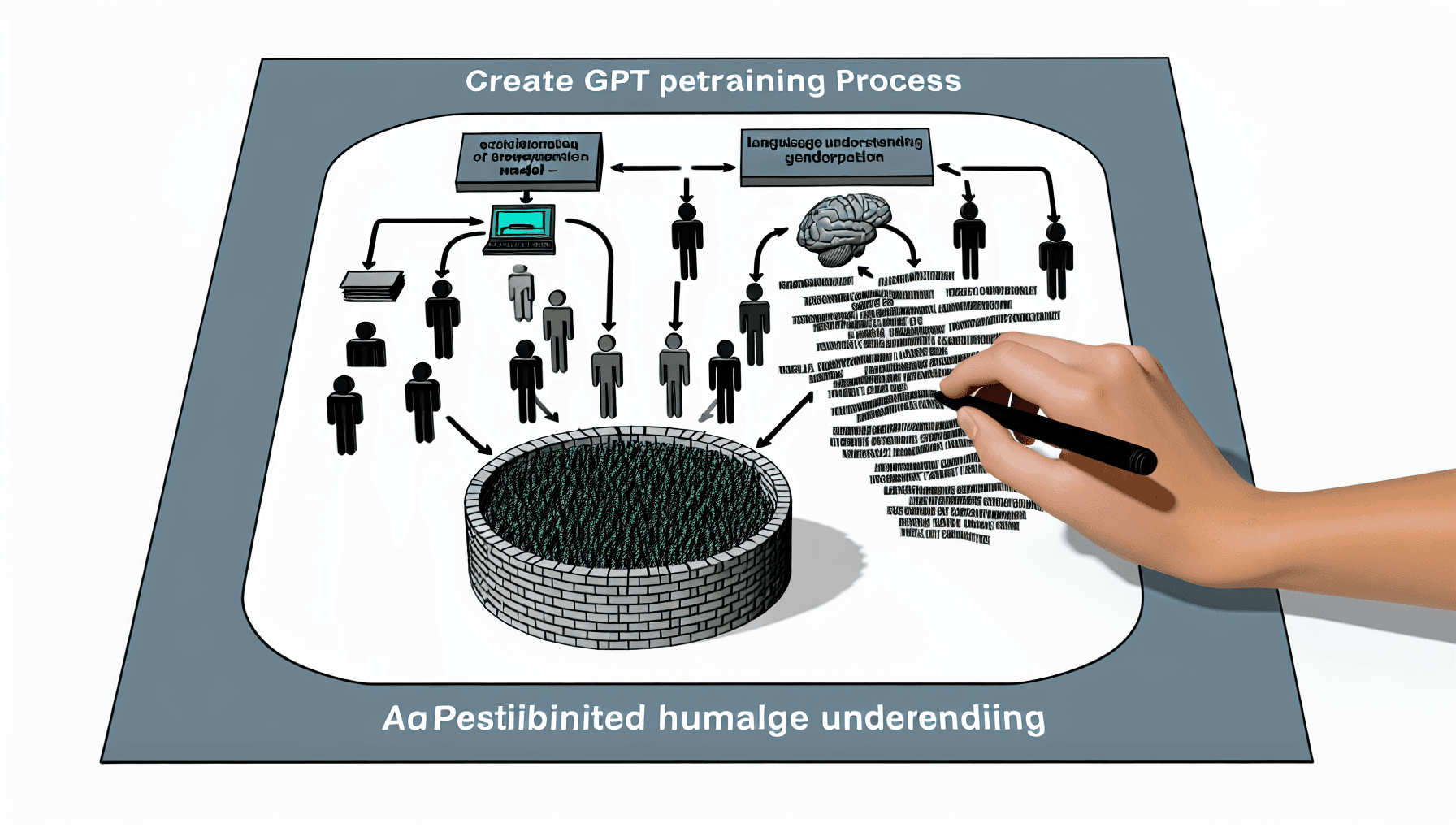

Before diving into the specifics of how the GPT models are pretrained, it’s essential to understand what ‘pretraining’ means in the context of machine learning. Pretraining involves training a machine learning model on a large dataset using a general task that does not require human-annotated labels. This step helps the model to learn a broad understanding of the language, which can be refined later through fine-tuning on specific tasks.

GPT models are predominantly trained using an unsupervised learning technique known as ‘auto-regressive language modeling’. In this approach, the model is trained to predict the next word in a sentence, given all the previous words. This training process requires a vast amount of text data and computational power. The benefit of this method is that it teaches the model to understand and generate language patterns similar to how humans do.

Steps in the GPT Pretraining Process

- Data Collection and Preparation: The first step in the pretraining process involves collecting a diverse dataset. This dataset should be large and encompass a wide variety of topics to ensure that the model is not biased towards any particular subject. The data then needs to be cleaned and prepared for training, which may involve removing non-text elements and any corrupted segments of text.

- Choosing the Right Model Architecture: Once the data is ready, the next step is to select an appropriate model architecture. For GPT, this involves setting up a transformer model with a certain number of layers, attention heads, and parameters.

- Training: With the architecture set, the actual training begins. This involves feeding the prepared data into the model and using algorithms to adjust the model’s parameters (such as weights and biases) based on the error in its predictions. This process is computationally intensive and can take several days or even weeks, depending on the size of the model and the computational resources available.

- Evaluation and Iteration: After training, the model’s performance is evaluated using several metrics. If the performance is not satisfactory, adjustments are made to the training process, and the model is trained further. This iterative process continues until the model reaches the desired level of accuracy.

Importance of Hyperparameter Tuning

Hyperparameter tuning is crucial in the GPT pretraining process. It involves adjusting the variables that govern the training process, such as the number of layers, the number of attention heads, the learning rate, and the batch size. Tuning these parameters can significantly affect the model’s ability to learn effectively and efficiently.

Challenges in Pretraining GPT Models

Despite the advanced capabilities of GPT models, their pretraining is not without challenges. The primary issue is the requirement for substantial computational resources, which can be expensive and inaccessible for many researchers and organizations. Additionally, training such large models can lead to issues like overfitting, where the model learns patterns specific to the training data and does not generalize well to new, unseen data.

Conclusion

The GPT pretraining process is a complex but fascinating journey that plays a crucial role in building powerful language models. By understanding the nuances of this process, researchers and practitioners can better leverage these models for a variety of applications in natural language processing and beyond. With continued advancements and innovations, the future of GPT and similar models looks promising, offering vast potential for both academic and commercial endeavors in AI.

Explore Further

To learn more about GPT and its applications, consider exploring the following resources and studies…

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article