Tiny PCs, Big AI: NVIDIA Blackwell SFF GPUs Bring Data Center-Class Acceleration to Compact Workstations

Tiny PCs, Big AI: NVIDIA Blackwell SFF GPUs Bring Data Center-Class Acceleration to Compact Workstations

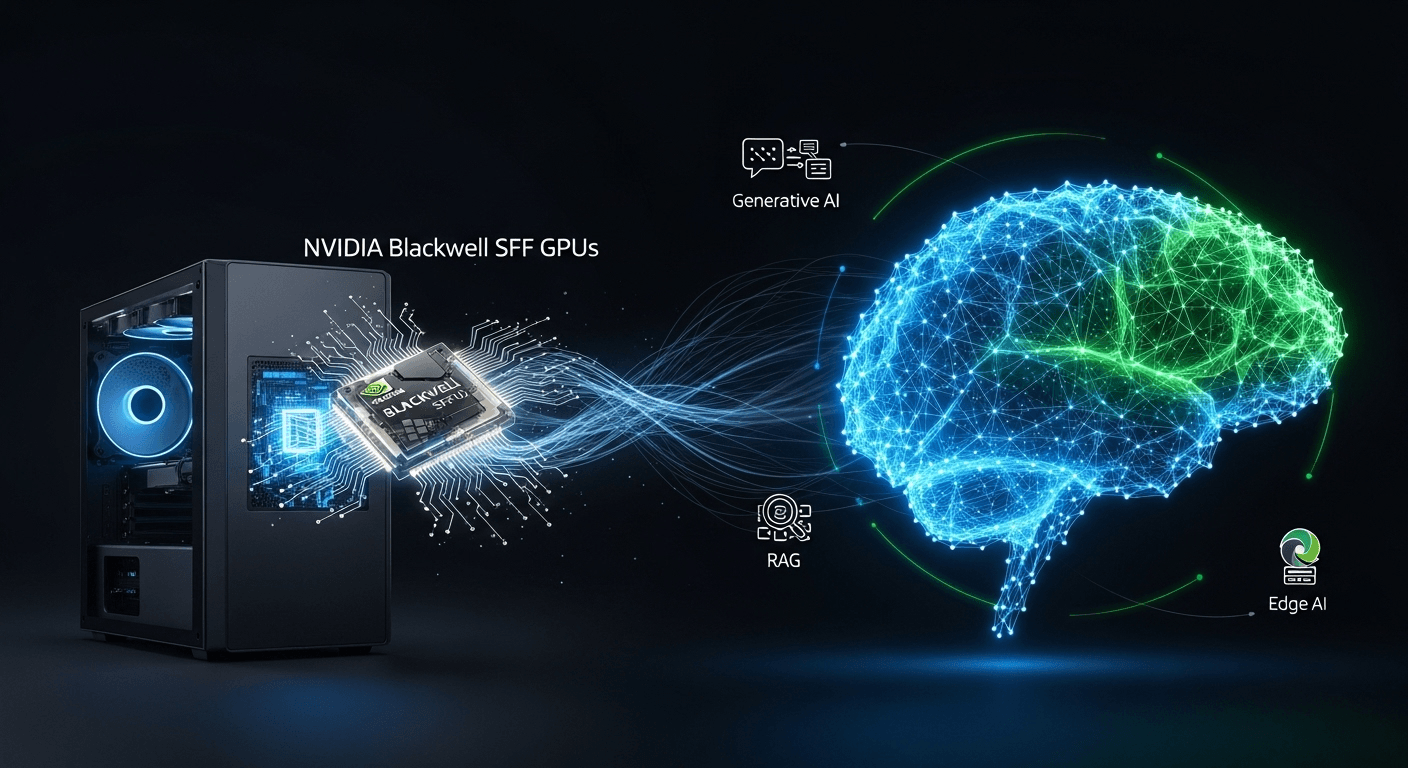

The era of AI has transcended traditional cloud data centers. With NVIDIA Blackwell small form factor (SFF) GPUs, powerful AI acceleration is now compact enough to fit in energy-efficient workstations that can be tucked under desks, placed in labs, or set up at the edge of networks. Let’s explore what this shift means, its significance, and tips on selecting the ideal compact configuration for modern AI workloads.

Why Small Form Factor GPUs Matter Now

As AI projects become more demanding, there’s an increasing need for rapid iterations, local data privacy, and predictable costs. This makes on-premises and edge systems especially appealing for teams working on specialized models, fine-tuning large language models (LLMs), implementing retrieval-augmented generation (RAG), or utilizing computer vision in environments like factories and hospitals. Until recently, these tasks required bulky towers or servers. NVIDIA Blackwell SFF GPUs revolutionize the landscape by delivering high-performance AI acceleration in compact workstations with modest power and cooling requirements.

NVIDIA’s introduction of the Blackwell architecture marks a new era in AI platforms, succeeding the Hopper generation with significant performance and efficiency improvements for generative AI and large-scale inference. Blackwell introduces a cutting-edge transformer engine, advanced numerical formats, and platform-wide reliability features, enabling AI capabilities to transition seamlessly from server racks to desktops. Explore NVIDIA’s detailed information on SFF Blackwell GPUs and partner systems here, and find an in-depth overview of the architecture on the official NVIDIA blog.

What is NVIDIA Blackwell in Simple Terms

Blackwell is NVIDIA’s next-generation GPU architecture designed to meet the growing demands of generative AI. It emphasizes speed, memory efficiency, and the capability to handle massive transformer models effectively. To simplify, here are three key points highlighting its importance:

- Efficient Math for AI: The new transformer engine and support for low-precision formats are optimized to accelerate LLMs and diffusion models while maintaining output quality. This efficiency enables SFF GPUs to deliver more performance per watt for training small models, fine-tuning, and high-throughput inference. Source

- Scalability Across Sizes: From data center chips like the GB200 Grace Blackwell to desktop-class GPUs, the platform ensures consistent tools and performance, allowing developers to prototype locally while deploying at scale. Source

- Enhanced Reliability and Security: Blackwell incorporates hardware and software features to improve uptime and data integrity for always-on AI systems. Coverage

What Makes a GPU Small Form Factor?

In this context, a small form factor typically refers to a short-length, single-slot or slim dual-slot PCIe card that can fit into compact desktops, mini towers, rackmount systems with size constraints, or embedded PCs mounted on walls. These GPUs are designed for lower acoustic and thermal footprints while still supporting workstation-class drivers and software.

When paired with an appropriate CPU, memory, and storage, an SFF GPU can transform an 8 to 15-liter chassis into a powerful AI development and inference system. For many teams, this range strikes a perfect balance between the limitations of laptops and the noise and power consumption of servers.

Use Cases for Blackwell SFF GPUs

Despite their compact size, Blackwell SFF GPUs are built for serious applications. Common use cases include:

- Prototyping and Fine-tuning: Experiment with LLMs, vision transformers, and diffusion models locally, enabling rapid iterations on prompts, adapters, and lightweight fine-tuning without waiting for cloud processing times.

- High-throughput Inference: Deploy chatbots, RAG pipelines, or multimodal assistants in departments requiring data localization.

- Edge AI: Implement defect detection in manufacturing lines, triage processes in clinical work, or smart retail analytics, where low latency and privacy are paramount.

- Digital Twins and Simulation: Integrate physics and AI for layout planning, robotics, and operations using NVIDIA tools like Omniverse and Isaac. Omniverse | Isaac Sim

Software Stack: From CUDA to NIM

Hardware is just one part of the equation. NVIDIA’s robust software ecosystem accelerates the journey from idea to deployment. On Blackwell SFF GPUs, you can utilize:

- CUDA and cuDNN: The essential libraries for enhancing AI and HPC workloads across NVIDIA GPUs. CUDA

- TensorRT and Triton: Tools designed for optimizing and serving high-performance inference workloads. TensorRT | Triton

- NVIDIA NIM: Microservices offering optimized inference pipelines and models behind standard APIs for seamless switching between local GPUs and cloud environments. Overview

- RTX Acceleration: For visualization, rendering, and mixed AI-graphics workloads typical in product design and digital twins. RTX

Since these tools are consistent across NVIDIA GPUs, you can prototype on a compact workstation and scale it to the cloud or a data center with minimal code modifications. Source

SFF Workstations vs. Laptops vs. Servers

Which platform is best for AI tasks? Here’s a simple guide to help you decide:

- Laptops: Ideal for mobility and demonstrations but limited in sustained performance and VRAM compared to desktops.

- SFF Workstations: A balanced option with more capability than a laptop while being quieter and more space-efficient than a server. Perfect for prototyping, fine-tuning, and maintaining steady inference loads.

- Servers: Clearly suited for extensive training, multi-GPU scaling, and running high-throughput services 24/7.

Blackwell SFF GPUs are specifically designed for this middle tier, where agility, cost-effectiveness, and data locality are critical.

Buyer’s Guide: How to Configure a Compact AI Workstation

To maximize the potential of a Blackwell SFF GPU, consider the entire system, not just the GPU:

- CPU: Opt for a modern multi-core processor to prevent bottlenecks during data preprocessing and RAG retrieval.

- Memory: A minimum of 32 GB is advisable for AI development; 64 GB or more is preferable for multi-model tasks.

- Storage: Choose fast NVMe SSDs and consider having a dedicated scratch drive for datasets and checkpoints.

- Power and Cooling: Ensure your power supply unit (PSU) can handle peak demands with some overhead. Although SFF cards are designed for lower power usage, effective airflow remains crucial.

- Chassis and Acoustics: Select cases with filtered intakes and clear airflow paths. Quiet operation is a significant advantage for SFF setups in office and lab environments.

- Networking: Implement 2.5 GbE or 10 GbE for efficient dataset synchronization and model artifact transfers. While Wi-Fi 6E works for smaller teams, wired connections are more reliable.

- OS and Drivers: Regularly update NVIDIA drivers, CUDA, and containers. Consider using containerized environments with Docker and the NVIDIA Container Toolkit.

Real-World Examples

Here are specific scenarios where small, quiet AI workstations excel:

- Healthcare Imaging Labs: On-premises inference for de-identification, triage, or workflow quality assurance without transferring sensitive scans to the cloud.

- Manufacturing QA: Implementing real-time vision models at workstations, integrated with local PLCs or robots for instant decision-making.

- Cybersecurity Teams: Utilizing embeddings, anomaly detection, and LLM-assisted triage with private logs that cannot leave the secure network.

- Product Design Studios: Accelerating generative CAD, texture synthesis, and photorealistic rendering using a single compact GPU.

- Retail and Logistics: Conducting footfall analytics, shelf monitoring, or route optimization while ensuring data residency.

Total Cost of Ownership and Sustainability

SFF workstations offer exceptional price performance when considering:

- Reduced Energy and Cooling Costs: A single SFF GPU consumes significantly less power than a server and is more manageable in terms of cooling.

- Stable Capacity: Avoid cloud queues or unexpected egress fees. You maintain control over utilization and scheduling.

- Extended Usable Life: Workstation-class drivers and ISV certifications generally enhance the longevity of the system. ISV certifications

In an age where sustainability and data privacy are top priorities for organizations, this right-sized computing approach often offers a compelling business case.

Ecosystem and Partner Systems

NVIDIA collaborates with major OEMs and system integrators to deliver ready-to-use compact workstations featuring Blackwell SFF GPUs, complete with professional drivers and compatibility for leading AI software stacks. While configurations may vary by region and vendor, the underlying principle remains: data center-quality AI features, encapsulated in desktop-friendly formats. Check out NVIDIA’s announcement regarding Blackwell SFF GPUs and the latest partner systems here: official news release.

From Prototype to Production: A Practical Workflow

- Start Local: Initiate a project on your SFF workstation using CUDA, PyTorch, and TensorRT. Adopt a containerized approach from the outset.

- Optimize: Profile your model and transition to more efficient precision levels as needed. Utilize TensorRT for accelerated inference.

- Package: Create a reproducible container image and publish your model using NVIDIA NIM or Triton inference server APIs.

- Scale: As demand increases, deploy the same container to larger on-site servers or a managed GPU cloud, while retaining observability and cost management.

- Secure: Implement role-based access controls, secrets management, and data loss prevention from the outset, especially for RAG and fine-tuning workflows.

How Blackwell Advances Generative AI

Both independent analyses and NVIDIA’s documentation highlight two major trends accelerated by Blackwell: higher throughput for transformer workloads and enhanced efficiency at lower precision levels. This enables quicker fine-tuning and more cost-effective inference, particularly for enterprise copilots, content creation, code assistance, and applications that integrate text, images, audio, and structured data. The Verge | AnandTech

For compact workstations, the advantage lies in the ability to perform more tasks locally without being limited by power or thermal constraints. This translates to shorter feedback loops for developers and enhanced privacy for sensitive data deployments.

Security and Privacy Benefits of Local AI

By keeping AI workloads on-premises or at the edge, organizations can minimize data movement risks. This approach allows comprehensive auditing of access, prevents the transmission of sensitive information to external services, and facilitates compliance with regional data residency regulations. NVIDIA’s enterprise software and partner ecosystem provide robust support for authenticated access, encryption in transit, and secure drivers that enterprises rely on. NVIDIA security resources

Frequently Asked Questions

What is special about NVIDIA Blackwell SFF GPUs compared to previous models?

They incorporate the latest advancements of the Blackwell architecture in compact, lower-power cards tailored for small workstations, offering improvements in transformer performance, generative AI efficiency, and access to the latest software stack in a smaller, quieter form factor. Source

Can a compact workstation handle LLM fine-tuning, or is it limited to inference tasks?

This depends on the model size and dataset. Many teams adeptly fine-tune smaller or adapter-based models locally before scaling larger jobs to more powerful systems. SFF GPUs are ideal for quick iterations and production inference that must remain on-prem.

How do Blackwell SFF GPUs compare to laptop GPUs for AI tasks?

While high-end laptops are versatile, SFF desktops provide sustained performance, greater VRAM capacity, better thermal management, and upgradeability, making them more suitable for ongoing AI applications.

Which software frameworks are supported?

Frameworks supported typically include CUDA, cuDNN, PyTorch, TensorFlow, TensorRT, Triton Inference Server, Omniverse, and NVIDIA NIM microservices, all complemented by enterprise-ready drivers and ISV certifications. Developer resources

Are OEM systems available, or do I need to build my own?

Several OEMs and system integrators provide compact workstations featuring professional NVIDIA GPUs. NVIDIA’s announcement highlights various partner systems designed for SFF deployments. Official announcement

Key Takeaways

- Blackwell SFF GPUs enable significant AI acceleration in compact, quiet, and energy-efficient workstations.

- NVIDIA’s consistent software stack facilitates a smooth transition from local prototypes to full production deployments.

- For teams prioritizing privacy, cost predictability, and rapid iterations, SFF workstations present a smart compromise between laptops and servers.

AI is becoming smaller, faster, and more accessible, moving closer to where your data resides. With Blackwell SFF GPUs, you no longer need a dedicated server room to create and deploy advanced AI solutions.

Sources

- NVIDIA announces Blackwell SFF GPUs and partner systems

- NVIDIA Blackwell architecture overview

- NVIDIA unveils the Blackwell platform

- The Verge coverage of Blackwell announcement

- AnandTech deep dive into Blackwell architecture

- NVIDIA NIM microservices overview

- CUDA Toolkit

- NVIDIA Triton Inference Server

- NVIDIA ISV certifications

- NVIDIA Omniverse

- NVIDIA Isaac Sim

- NVIDIA AI developer resources

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article