Three Challenges Today’s AI Faces – And How You Can Address Them

Three Challenges Today’s AI Faces – And How You Can Address Them

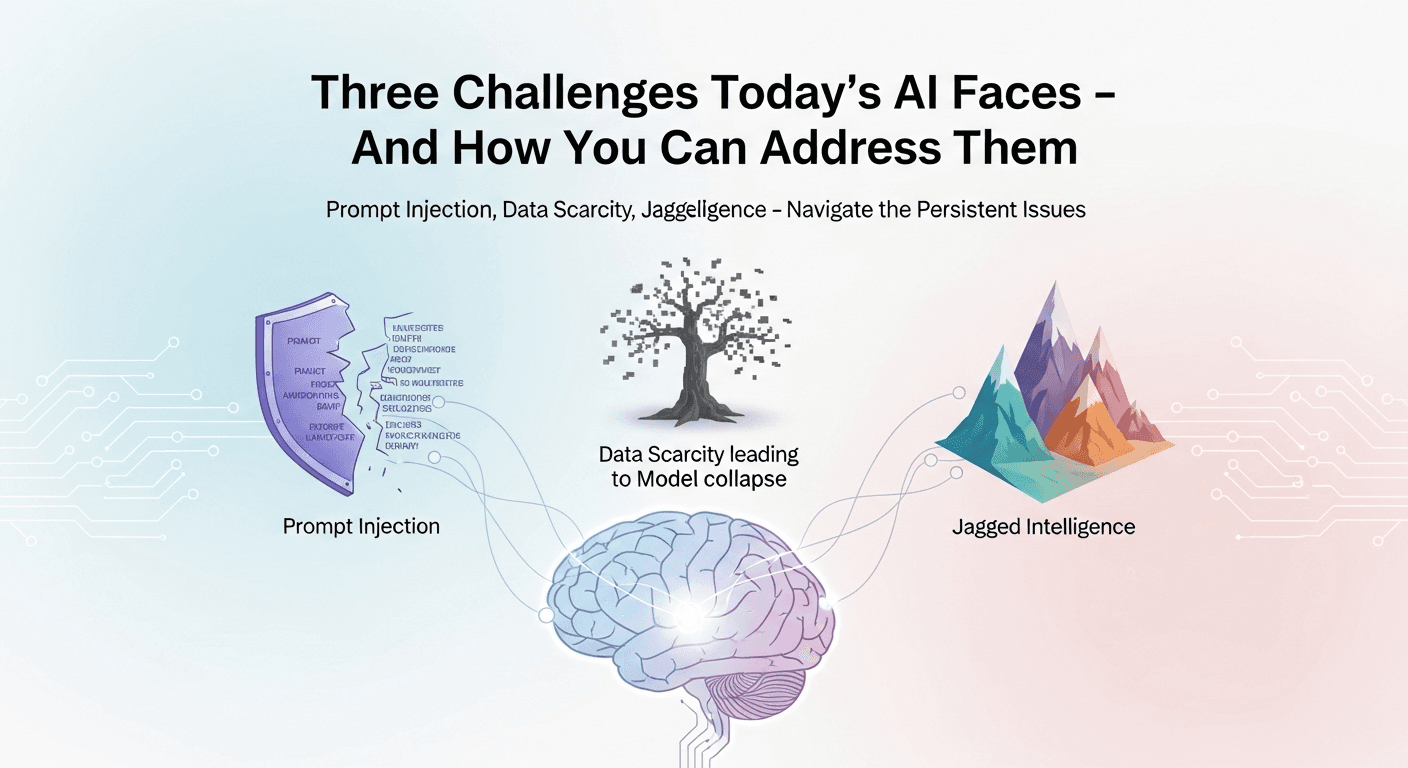

If you’ve interacted with modern AI models, you’ve likely encountered both their impressive capabilities and their limitations. They excel at drafting, translating, summarizing, and navigating complex prompts. Yet, they still produce inaccuracies, can be easily misled, and struggle in lengthy tasks. While it’s common to hope that advancements in computing power, larger datasets, and refined prompts will resolve these issues, the reality is that three significant challenges are deeply entrenched in current large language models (LLMs).

These challenges are not typographical errors; they are foundational issues that persist across various applications, vendors, and benchmarks. Addressing them is not as simple as applying a software patch. Instead, they require a deeper understanding of AI architecture.

In this article, we will explore these three persistent problems, discuss why they’re challenging to resolve, share insights from recent research, and provide practical strategies for working around them. This discussion builds on Sabine Hossenfelder’s October 19, 2025, blog post, which identifies unresolvable issues within today’s AI models, supplemented by recent studies and industry insights.

Key Takeaways

- Challenge 1: Instruction-data confusion makes models vulnerable to prompt injections and jailbreaks, as detailed in the OWASP LLM Top 10.

- Challenge 2: Data scarcity and synthetic-data feedback loops lead to model degradation. As the availability of high-quality data diminishes, models that rely on their outputs are at risk.

- Challenge 3: Irregular intelligence and weak generalization ensure inconsistent performance in real-world applications. While benchmark scores may improve, reliability often falls short in everyday tasks.

Now, let’s dive deeper into each issue.

Challenge 1: Models Struggle to Distinguish Instructions from Data

Large language models are designed to predict the next sequence of words based on a given input that may include user instructions, web pages, emails, PDFs, or tool outputs. To these models, everything is simply text, leading to significant security vulnerabilities. Attackers can embed misleading instructions within the input data, which the model might then erroneously execute. This phenomenon is known as prompt injection.

Globally, security experts classify prompt injection as a crucial risk. The OWASP LLM Applications Top 10 identifies it as the primary threat, highlighting how strategically crafted inputs can hijack systems, leak sensitive information, or provoke unintended actions.

Extensive research has demonstrated that state-of-the-art models can be easily fooled by human-written prompts designed to elicit unintended responses. Projects like Tensor Trust have documented over 126,000 prompt injections, showcasing their effectiveness across various models.

Emerging variants of prompt injections include:

– Imprompter-style payloads: Seemingly nonsensical strings that instruct models to share personal information, achieving success rates near 80%.

– System prompt poisoning: Infiltrates hidden developer instructions, silently altering all subsequent interactions within the model.

– Backdoor-powered prompt injection: Plants triggers during model fine-tuning that can later override the intended instruction hierarchy.

Why This Issue Is Difficult to Fix

- Objective Alignment: As a next-token predictor, the model has no inherent way to differentiate between “instruction” and “content.” If it can read it, it can be manipulated to treat it as a command. While defensive strategies can mitigate this risk, they don’t eliminate the underlying ambiguity.

- Limitations of Defense: Techniques like multi-agent protection and instruction referencing can minimize potential attacks. However, new attack vectors often arise, creating a cyclical battle between vulnerability and defense.

Conclusion: Until models can inherently separate commands from data and enforce strict boundaries, prompt injection will remain a significant hazard. Consider it akin to SQL injection in the context of LLMs; instead of searching for a universal solution, design your systems to accommodate and safeguard against risks.

Challenge 2: Data Scarcity and Feedback-Induced Collapse

The drive to scale models has fueled many recent advancements in AI. However, scaling compute must naturally align with expanding datasets. Estimates indicate that the global supply of high-quality public text could be fully utilized in AI training runs between 2026 and 2032. As this limit approaches, reliance on synthetic data may ensue, leading to deterioration.

Model collapse occurs when systems are trained predominantly on their generated outputs. Rare data patterns fade, and, over time, results devolve into repetitive or nonsensical text. Research indicates that relying solely on synthetic data is a guaranteed pathway to collapse, necessitating careful mixing with human-generated content.

This issue is not just theoretical; tech analysts have already pointed out signs of decline as the industry increases its reliance on synthetic data. Concerns about model collapse are growing, with projections indicating that the optimal use of public text will come to an end around 2028.

Why This Issue Is Difficult to Fix

- Physical and Statistical Limits: There’s a finite amount of high-quality human-generated text. Once saturated, adding computational power alone won’t yield substantial improvements.

- Coordination Challenges: While watermarking and proper data curation can help mitigate the risks of synthetic data, consistent implementation across the industry is complex. The value of any remaining clean data declines as sources become more competitive.

Takeaway: The future will likely lean towards diversifying data collection methods, focusing on physical domain-specific sources, and developing hybrid systems that validate information rather than merely memorizing it.

Challenge 3: Jagged Intelligence and Inconsistent Generalization

Modern AI models can achieve high scores on rigorous benchmarks yet still falter in straightforward, everyday tasks. This phenomenon is termed “jagged intelligence,” where peaks of impressive performance coexist with surprising flaws. For instance, Salesforce reports that state-of-the-art models excel in isolated tasks but struggle in multi-step processes, often compromising essential information or breaching policies.

This inconsistency is evident in foundational video models, which, despite high accuracy in some trials, perform poorly in tasks that require an understanding of causality or spatial relationships. Analysts have highlighted that benchmark success doesn’t correlate with reliable generalization across varied scenarios.

Why This Issue Is Difficult to Fix

- Mismatch in Objectives: While next-token prediction represents a sophisticated compression of knowledge, it does not inherently compel models to construct verifiable, causal models of their environment. This mismatch results in confident yet fragile outputs, especially in unfamiliar contexts.

- Lack of Reliable Metrics: Current evaluation methods emphasize average scores over minimum performance in variable conditions. When enterprises monitor reliability in longer workflows, significant vulnerabilities reveal themselves.

Summary: Although current models showcase remarkable generalization within known parameters, they can struggle with routine yet complex tasks. Without architectural adjustments that promote planning, tool utilization under constraints, and verification steps, this jagged performance will continue.

Practical Strategies for Today

While these challenges are deeply rooted, there are actionable measures you can take. Embrace the concept of “risk-managed AI” rather than viewing it as a “set it and forget it” technology.

- Design for Injection Resistance

- Isolate untrusted data from instructions. Ensure retrieval results are handled distinctly and require explicit references to original instructions before executing actions. OWASP guidance supports fostering layered defenses.

- Utilize capability sandboxes to limit model functions and mandate approvals for sensitive activities, incorporating role-based access.

-

Adopt a multifaceted defense approach. By combining static checks, red teams, content firewalls, and agent oversight, you can prepare for emerging threats while remaining adaptable to new challenges.

-

Hedge Against Data Scarcity and Collapse

- Focus on acquiring high-quality, rights-cleared human data in your specific areas. Treat this as a valuable resource.

- Carefully balance the use of synthetic data, applying research-backed ratios and continuously monitoring for shifts in performance. Instrument your training methods to catch early signs of degradation.

-

Explore diverse data collection methodologies like voice, images, and sensor data beyond traditional text sources.

-

Engineer for Reliability

- Assess workflows based on end-to-end success rather than isolated tasks. Monitor minimum performance levels to identify where models may struggle, especially in multi-step processes.

- Implement hybrid systems that combine LLMs with retrieval mechanisms, structured memory, and deterministic plans that evaluate every decision along the way. Treat the LLM as a collaborator, with separate components checking facts and enforcing guidelines.

- Ensure human oversight in scenarios with significant stakes, establishing review procedures based on risk levels.

Future of AI

These challenges are deemed “unfixable” with the current generation of models because they stem from their fundamental training processes and data inputs. However, promising research avenues might alter this landscape:

– Developing architectures that differentiate parsing, planning, and execution could address instruction-data confusion.

– Systems that learn from real interactions, rather than relying solely on static data, may enhance model reliability and causal understanding.

– Improved industry coordination on watermarking and data provenance could help mitigate feedback loops, though achieving consensus remains a challenge.

Conclusion

While current frontier AI demonstrates remarkable capabilities, three core challenges persist: instruction-data confusion leading to prompt injection, limitations in data quality driving model collapse, and jagged intelligence causing inconsistent reliability. These issues are not mere glitches; they emerge from the foundational design and training of existing models. By recognizing them as design constraints, we can effectively harness AI’s power responsibly.

As you move through late 2025, it’s essential to acknowledge these limitations. Plan for both containment and verification. Invest in collecting high-quality data, and prioritize measuring reliability in your practical workflows. Shifting your focus from simply achieving benchmark scores to building trust within AI systems will be the key to success.

FAQs

1. Can prompt injection be fixed with better prompts or more fine-tuning?

While improved prompts may reduce the success rate of attacks, they won’t eliminate the core ambiguity where models can treat instructions and data as indistinct text. Use layered defenses and require human approvals for sensitive operations.

2. Will increasing data and computational power resolve these issues?

More compute helps until you reach the limits of available data. Research indicates that solely relying on synthetic data inevitably leads to collapse. The solution lies in diversifying your data sources and grounded learning methods.

3. Do agents and tools stop hallucinations and brittleness?

While tools do help reduce errors, they don’t change the model’s fundamental objectives nor guarantee reliability in extended workflows. Enterprises frequently observe sharp declines in performance during multi-step tasks without solid safeguards.

4. Are video and multimodal models the answer to these challenges?

They indeed expand potential applications, but current evaluations indicate inconsistent performance in tasks requiring spatial reasoning and causal coherence. Reliability must improve significantly before they can function as general-purpose solutions.

5. What immediate actions should teams take?

Treat AI as a powerful tool: sandbox it, verify its outputs, and thoroughly evaluate it. Prioritize high-quality human data, assess prompt injection risks, and review entire workflows instead of focusing solely on individual tasks. Follow OWASP’s LLM guidelines to effectively manage risks.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article