Unveiling Complexities: The Mathematics of Attention Mechanisms

Introduction to Attention Mechanisms

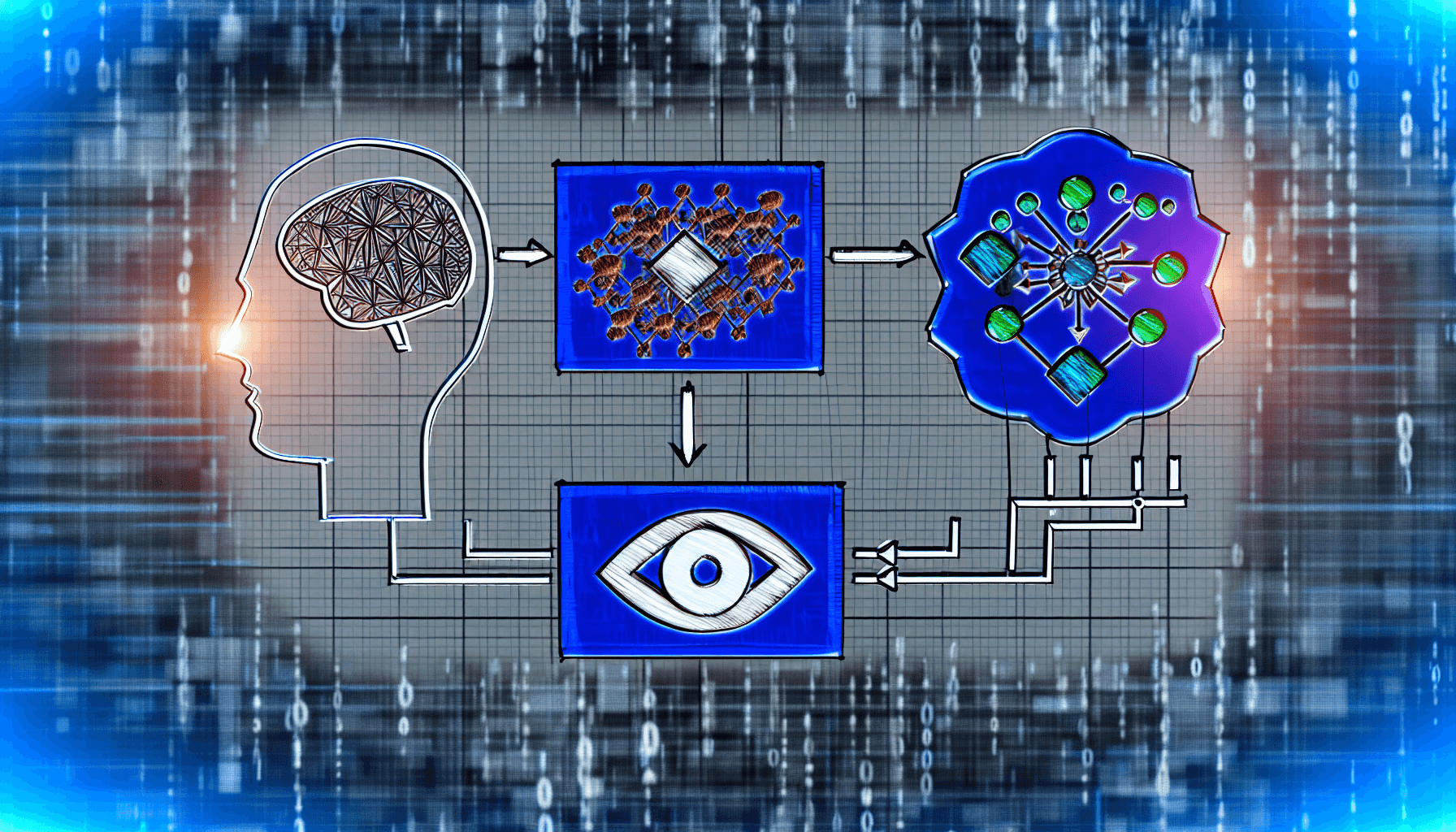

Attention mechanisms have revolutionized the field of artificial intelligence, particularly in enhancing machine learning models. These mechanisms, inspired by human cognitive processes, help models to focus on specific parts of the input data, thereby improving their ability to make predictions and process information efficiently. This post delves into the complex mathematics that enables these mechanisms to function, offering a clearer understanding of their significance and application in modern AI systems.

Understanding the Concept of Attention

In machine learning, attention mechanisms can be thought of as components that allow models to dynamically highlight relevant features of the input data. This concept is akin to the human ability to pay attention to particular aspects of a visual scene or a conversation while ignoring others. The effectiveness of attention mechanisms in neural networks, notably in models like Transformer networks used in natural language processing (NLP), illustrates their critical role in achieving state-of-the-art results in many AI applications.

The Basic Mathematical Model

The simplest form of an attention mechanism can be described mathematically by a set of equations that modulate the focus over an input sequence. Typically, an attention function can be visualized as a query and a set of key-value pairs to produce an output, where the output is a weighted sum of the values. The weights assigned to each value are computed by a compatibility function of the query with the corresponding keys.

Output = Sum(weights * values)

This compatibility function, often a dot product, measures how much focus to place on each part of the input data. In more formal terms:

weights = softmax(query ⋅ keys)

Advanced Formulations of Attention

As the field of AI has evolved, so too have the expressions and models of attention mechanisms. Advanced formulations include multi-headed attention, where multiple sets of queries, keys, and values are used to compute the output. This allows the model to attend to information from different representation subspaces at different positions, enriching the model’s ability to learn and generalize.

Multi-head attention equation:

MultiHead(Q,K,V) = Concat(head_1, ..., head_h)W^O

where each head is computed as follows:

head_i = Attention(QW_i^Q, KW_i^K, VW_i^V)

Applications in Neural Networks

The mathematical principles of attention mechanisms are particularly pivotal in neural networks. They enhance the network’s ability to focus on different parts of a sequence crucial for tasks like translation, summarization, and even image recognition. By effectively capturing dependencies without regard to their distance in the input or output sequences, attention mechanisms enable networks to achieve long-range dependency modeling that was previously challenging.

Case Studies: Attention in Action

To better illustrate the practical applications and effectiveness of these mathematical concepts, consider the Transformer model architecture, which utilizes self-attention mechanisms to process text. Unlike previous sequence-to-sequence models that use recurrent layers, Transformers parallelize the training and manage dependencies more effectively, leading to faster and more adaptable models.

Challenges and Future Directions

Despite their success, the deployment of attention mechanisms comes with challenges. Computationally, they are intensive, particularly in scenarios involving large inputs or many heads in multi-head attention configurations. Researchers and developers continue to explore new mathematical approaches and optimizations to mitigate these challenges, pushing forward the capabilities and applications of attention mechanisms in AI.

Conclusion

The mathematics behind attention mechanisms offers a fascinating glimpse into the capabilities of modern AI systems. As we continue to unravel these mathematical underpinnings, the scope for innovation and enhancement in machine learning models expands, underscoring the profound impact of attention mechanisms on the field of artificial intelligence.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article