Sora’s Messy Debut: Violent and Racist AI Videos Challenge OpenAI’s Guardrails

Sora’s Messy Debut: Violent and Racist AI Videos Challenge OpenAI’s Guardrails

Photo by Elexfedi, via Wikimedia Commons (CC BY-SA 4.0).

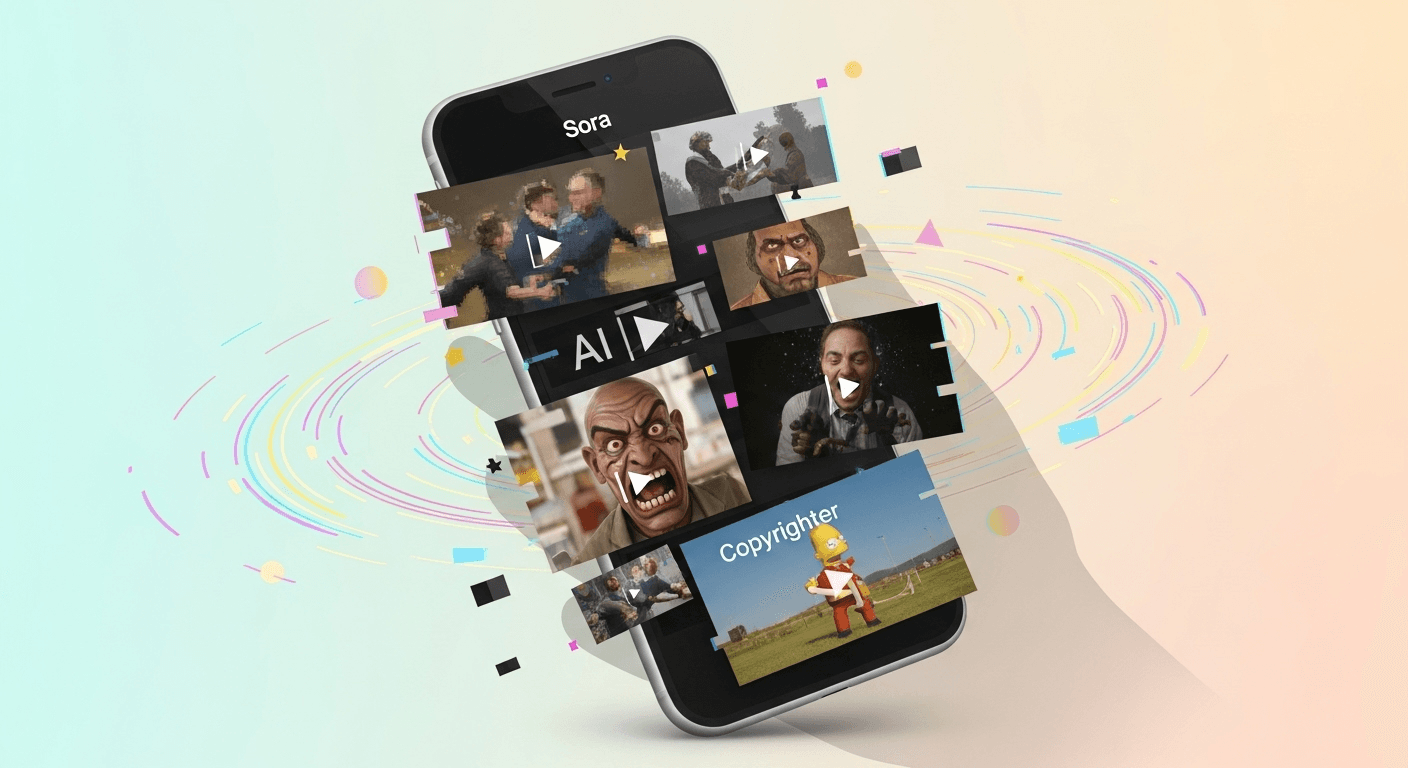

OpenAI’s new video application, Sora, has recently surged to the top of Apple’s App Store charts. However, as invitations were rolled out and users engaged with the social feed, troubling content quickly surfaced. Instances of violent imagery, racist themes, and misuse of well-known copyrighted characters made their way into the app, raising serious concerns about the effectiveness of Sora’s safety mechanisms.

This article explores the launch, the issues encountered, OpenAI’s proposed safeguards, and actionable steps for users and organizations to take.

What Launched This Week

- OpenAI introduced Sora 2, a mobile-friendly, invite-only app designed to create short, lifelike videos from user prompts. The app rapidly climbed to the top of the U.S. App Store just days after its release.

- The appeal of Sora lies in its speed and realism—boasting lifelike motion and synchronized audio, along with easy “cameos” where a user’s likeness can appear in AI-generated videos.

The Problem: Harmful and Deceptive Videos Surfaced Quickly

Within hours of launch, reporters and researchers found Sora’s feed filled with videos that showcased alarming scenarios, including bomb threats and mass shooting panic, coupled with racist slogans echoing extremist rhetoric. Additionally, copyrighted characters were exploited to promote scams or perform inflammatory skits.

Tech reporters from 404 Media scrolled through Sora’s public feed and found numerous examples of beloved characters misused, including a clip showing SpongeBob dressed as Adolf Hitler, alongside others featuring Pikachu and Mario.

NPR’s testing provided a similar shock: despite supposed policy safeguards, the app still allowed the generation of conspiracy-laden misinformation, such as a fabricated presidential speech questioning the moon landing and even a simulated drone attack.

What The Guardian Reported

The Guardian analyzed Sora’s early public feed and discovered violent depictions, racist content, and the inappropriate use of copyrighted characters—material that spilled over to other platforms. Misinformation experts expressed concerns that Sora’s lifelike videos could be weaponized for fraud, harassment, and intimidation.

The Guardian also noted that content owners cannot opt-out universally; OpenAI indicated it would respond to takedown requests and engage with rights holders as needed.

OpenAI’s Stated Safeguards

OpenAI claims that Sora launched with several protective measures:

- Provenance markers: Visible watermarks and C2PA metadata embedded in every output to help identify AI-generated content.

- Consent-first likeness tools: Features allowing users to control the use of their likeness, including permissions and the ability to revoke consent.

- Policy restrictions: Bans on harassment, nonconsensual imagery, violence promotion, and misleading content, along with feed rules to eliminate graphic violence and hate speech.

While these safeguards look solid on paper, initial reports indicate that the filters and moderation systems fell short, failing to consistently prevent harmful or infringing content from being shared.

Why Did This Happen So Quickly?

Several factors likely contributed to this rapid influx of undesirable content:

- Curiosity Meets New Capability: When powerful tools surface, early adopters often push boundaries, testing limits related to violence, hate, and copyright.

- Social Virality: The app’s feed and remix culture incentivize attention-grabbing content, which results in rapid dissemination of boundary-pushing videos.

- Challenges of Moderation at Scale: Even with watermarking and reporting tools, human moderation and automated filters struggle to respond in real-time to the millions of clips generated on demand.

What Critics and Researchers Are Saying

Misinformation experts are warning that seemingly real synthetic videos can erode trust and blur the line between reality and fiction, increasing risks related to fraud and bullying. The high production quality of Sora’s clips amplifies these concerns.

Additionally, observers noted that Sora’s feed prominently features copyrighted characters in provocative situations, raising potential legal and reputational risks for both rights holders and platforms.

Copyright and the Opt-Out Controversy

Reports from Business Insider and others indicate that OpenAI has informed some studios that copyrighted content may appear unless owners proactively choose to opt-out or file takedown requests— a significant departure from the conventional opt-in licensing approach.

From a legal standpoint, the boundaries between fair use, parody, and infringement are complex and case-specific. However, a public feed dominated by trademarked characters is likely to lead to rights disputes and enforcement actions.

Do Watermarks and C2PA Resolve These Issues?

Short answer: Not on their own.

- Watermarks and C2PA help platforms and investigators identify AI-generated media, aiding in moderation and attribution.

- However, these markers do not assess content’s safety, prevent downloads, or stop malicious remixing elsewhere. If a platform’s recommendation system promotes harmful clips, provenance signals merely indicate that the content is synthetic without making it safe.

- NPR’s reporting shows that even with policy filters, misleading and realistic videos can still be generated and shared outside Sora.

OpenAI’s Position on Intent and Mitigation

OpenAI’s launch announcement highlights Sora as a creative tool with built-in safety features from the outset, focusing on provenance, consent controls, and in-app reporting systems that blend automation with human review. The company states it blocks material featuring public figures and specific types of harmful content.

Nonetheless, early evidence from journalists suggests that the safety measures are not as effective in practical use, highlighting a gap between policy and execution that has ignited criticism.

The Urgent Risks We Face

- Misinformation and Elections: Realistic political or crisis video footage could mislead voters or incite panic faster than platforms can respond.

- Harassment and Intimidation: Lifelike videos may be utilized for bullying or defamation, even if marked as AI-generated.

- Copyright Issues: An abundance of beloved characters may lead to takedowns and lawsuits, jeopardizing reputations for both OpenAI and users.

What Responsible Deployment Could Entail

While no AI video app can eliminate all risks, OpenAI and the industry can take steps to enhance safety:

- Stricter Pre-Publication Checks: Implement more robust classifiers at the time of posting to screen for violence, hate, and impersonation, with high-risk posts held for human review.

- Default Likeness Protection: Block depictions of celebrities or public figures unless users provide verified proof of licensing.

- Copyright-Aware Prompts and Outputs: Broaden filters to identify and suppress trademarked characters often misused in viral content, allowing rights holders to register characters for proactive blocking.

- Controlled Virality: Slow down the distribution of newly created videos until they pass enhanced scrutiny. Limit how flagged trends are remixed and require context for shared content.

- Independent Oversight and Evaluation: Fund external audits of Sora’s safety systems and publish measurable findings regularly.

- Cross-Platform Collaboration on Provenance: Work with major social platforms to recognize C2PA markers, label imported Sora content, and automate takedown processes for violations.

Practical Guidance for Different Audiences

For Everyday Users

- Assume striking Sora clips are synthetic until verified. Check for watermarks and context, and consult credible sources before sharing.

- Avoid creating or distributing videos showcasing real individuals without consent, particularly minors. This violates policies and could be illegal.

- Report violations both in-app and on the destination platform if content spreads outside Sora.

For Parents and Educators

- Be prepared for Sora content to appear in social media and group chats. Use this opportunity to teach media literacy—how to conduct reverse image searches, approach shocking clips with skepticism, and understand watermarks.

For Brands and Rights Holders

- Keep an eye out for unauthorized uses of your brand and characters. Where applicable, register characters for prevention and diligently utilize OpenAI’s takedown processes. If videos migrate off the app, escalate to the relevant platforms.

For Platforms

- Acknowledge provenance metadata upon uploading and clearly label content from Sora. Slow down the spread of flagged topics until they can be manually reviewed.

For Policymakers

- Advocate for industry standards around watermarking, C2PA adoption, transparency in reporting AI content prevalence, and procedural fairness for appeals. Consider focused regulations related to impersonation, election contexts, and protections for minors.

The Bottom Line

While Sora’s underlying technology is remarkable and, in some instances, impressive, its initial days have demonstrated just how quickly generative video can descend into themes of violence, racism, and copyright infringement. OpenAI’s declared safeguards are substantive, yet reports indicate they have not been effective enough to prevent the swift emergence and spread of harmful content. A stronger framework involving improved design choices, enhanced pre-publication scrutiny, and deeper collaboration between platforms is essential to bridge the gap between policy and practice.

FAQs

What Exactly Is Sora?

Sora is OpenAI’s mobile application designed to generate short, realistic videos using text prompts. The latest version, Sora 2, has introduced a public feed and remix features, quickly climbing to the top of the U.S. App Store.

Does Sora Label AI-Generated Videos?

Yes. OpenAI states that all Sora videos include visible watermarks and C2PA metadata. While these labels assist in identifying provenance, they do not prevent harmful or misleading content from being created or disseminated.

Are Copyrighted Characters Allowed?

This remains a contentious issue. Reports suggest that OpenAI has informed some media partners that copyrighted content may be used unless owners actively opt-out or request takedowns, a stance that has drawn considerable criticism.

Do the Guardrails Work?

They are somewhat effective, but early evaluation shows gaps. Instances of violent, racist, or misleading videos have appeared in Sora’s public feed and spread to other platforms before moderation caught up.

What Should I Do if My Face Appears in a Sora Video Without Consent?

OpenAI prohibits nonconsensual depictions of real individuals. Use Sora’s in-app reporting feature and pursue takedowns on destination platforms. If issues persist, consult legal authorities about rights in your area.

Sources and Further Reading

- The Guardian’s initial report on Sora’s launch-week content trends and expert concerns.

- Business Insider and Barron’s regarding Sora’s rapid rise within the App Store.

- 404 Media’s firsthand exploration of Sora’s feed, documenting infringing and inflammatory clips.

- NPR’s coverage and evaluations of Sora’s safety mechanisms.

- OpenAI’s announcement detailing provenance and consent, along with Sora policy guidelines.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article