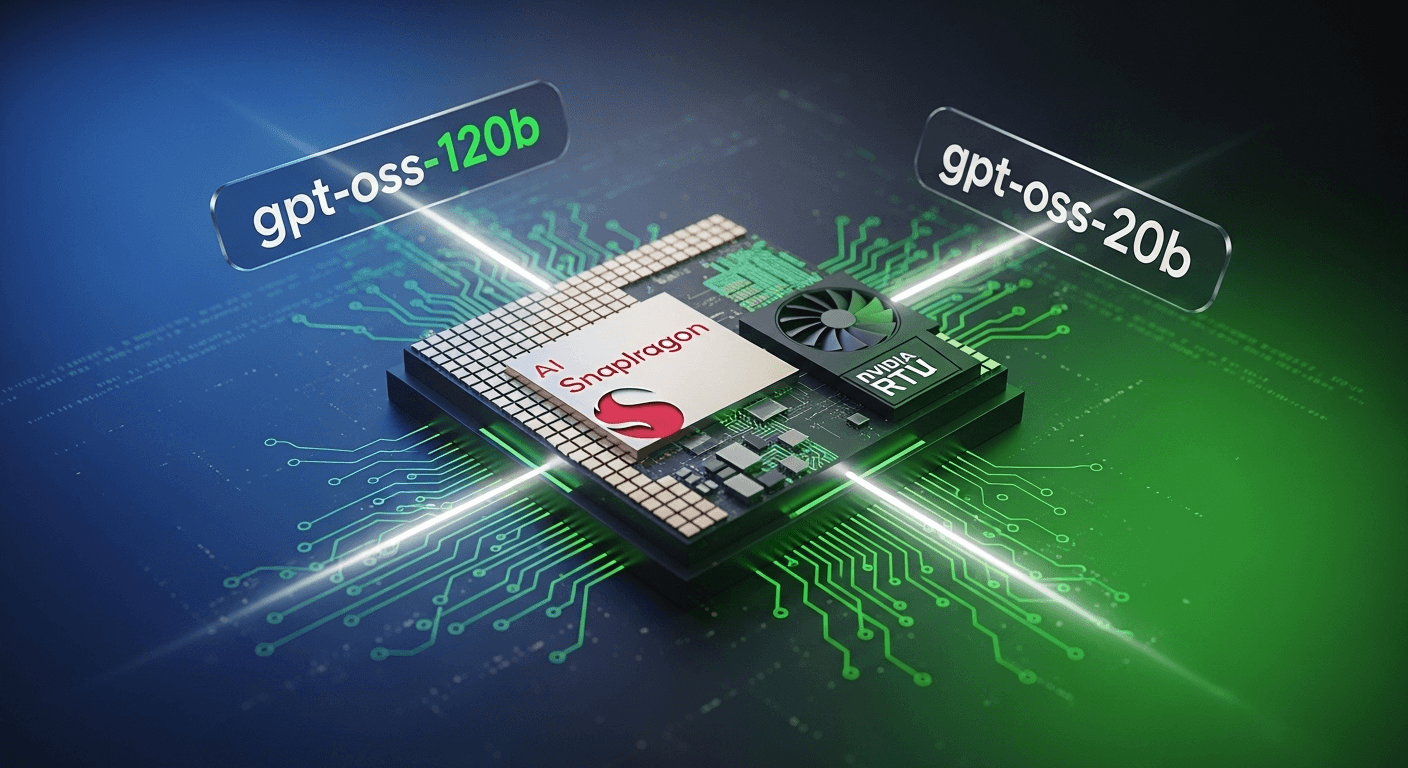

OpenAI Introduces Local AI with gpt-oss-120b and gpt-oss-20b Models for Snapdragon and RTX PCs

OpenAI is transforming the AI landscape by bringing powerful models closer to users. Their latest offerings, gpt-oss-120b and gpt-oss-20b, are specifically designed to run on modern PCs equipped with Qualcomm Snapdragon chips and NVIDIA RTX graphics cards. Although these models are not GPT-5, they represent a significant shift toward delivering faster, more private, and on-device AI experiences.

Why This Matters

Running sophisticated language models locally was once an uphill battle, often limited to specialized environments. However, with advancements in mainstream hardware and optimized runtimes, it’s becoming increasingly achievable. Local AI offers several advantages:

- Lower Latency: Instantaneous responses without the need to communicate with the cloud.

- Enhanced Privacy: Sensitive information remains on your device.

- Cost Efficiency: Reduced reliance on cloud infrastructure can lower expenses for frequently used workflows.

- Reliable Offline Functionality: Useful in low-connectivity or travel scenarios.

OpenAI’s focus on bringing gpt-oss models to Snapdragon PCs and NVIDIA RTX GPUs illustrates how quickly local AI is evolving across Windows devices.

What OpenAI Unveiled

As reported by Windows Central, OpenAI has announced the gpt-oss-120b and gpt-oss-20b models, designed for local inference on Windows laptops powered by Qualcomm Snapdragon chips, as well as on desktops with NVIDIA RTX graphics cards (Windows Central). Rather than striving for a distant goal like GPT-5, this announcement emphasizes practical, efficient, on-device performance.

While detailed technical documentation was not included in Windows Central’s coverage, the key takeaway is evident: OpenAI is prioritizing models that are ready for local deployment, aligning with current trends in Windows hardware.

The Hardware Landscape: Snapdragon PCs and RTX GPUs

Snapdragon PCs: Tailored for On-Device AI

Microsoft and Qualcomm are leading the charge for “AI PCs” powered by Snapdragon X chips, which come equipped with dedicated Neural Processing Units (NPUs). These NPUs efficiently handle AI workloads, allowing larger models to operate within the power limitations of laptops. Qualcomm has consistently demonstrated on-device generative AI, including large language models and diffusion models, on Snapdragon platforms (Qualcomm). Microsoft, on the other hand, is integrating AI into Windows more deeply with Copilot experiences and the introduction of a new category of Copilot+ PCs (Microsoft).

By creating local-friendly GPT versions for Snapdragon PCs, OpenAI aligns with this trend, providing developers and users with responsive AI tools that don’t rely on cloud computing for every interaction.

NVIDIA RTX: Making Local LLMs Practical on Desktops

Thanks to NVIDIA RTX GPUs and the TensorRT-LLM stack, running local LLM inference has become significantly faster and more memory-efficient. NVIDIA has showcased optimized pipelines that allow popular open models to run on consumer GPUs with VRAM ranging from 8 GB to 24 GB, depending on the model’s size and quantization (NVIDIA TensorRT-LLM). RTX “AI PCs” are crucial for local AI tasks and applications that benefit from GPU acceleration (NVIDIA).

With a focus on RTX capabilities, OpenAI acknowledges where many developers and tech enthusiasts are already computing, making it easier to create, test, and deploy local-first AI applications.

What to Expect from gpt-oss-120b and gpt-oss-20b

While OpenAI hasn’t released full specifications for these models, the name suggests their parameter counts (120 billion and 20 billion, respectively). Here’s what that typically entails:

- gpt-oss-20b: A mid-sized model suitable for high-end laptops or desktops featuring RTX 4070/4080-class GPUs, particularly when using 4-bit or 8-bit quantization. On Snapdragon PCs, it may employ hybrid CPU, GPU, and NPU execution alongside aggressive optimizations.

- gpt-oss-120b: A larger model designed for desktops or workstations equipped with powerful RTX GPUs and ample VRAM. Anticipate the frequent use of 4-bit quantization, along with streaming generation and well-planned batching.

Generally, locally operated models may sacrifice some peak capabilities for enhanced privacy and reduced latency. The upside is immediate interactivity and control, though complex reasoning tasks might still be better suited for cloud-scale models.

How “Local GPT” Impacts Everyday Workflows

Consider how local AI can enhance everyday tasks that are sensitive to latency or privacy:

- Writing and Editing: Drafting emails, documents, and summaries without sending data to the cloud.

- Code Assistance: Quick inline completions and refactors in your IDE, keeping your code private.

- Customer Support Triage: On-device processing enables redaction and preliminary responses before engaging the cloud.

- Productivity Copilots: Task scheduling, file searches, and note summarization with local files.

- Creative Workflows: Storyboarding, brainstorming, and local image generation.

With models like gpt-oss-20b and gpt-oss-120b, these activities aim to feel responsive and confidential, especially on hardware specifically optimized for AI performance.

Performance Factors and Setup Considerations

Factors Influencing Local LLM Speed

Several aspects can affect the efficiency of running a local model:

- Quantization: Utilizing 4-bit and 8-bit quantization can reduce memory usage and improve processing speed with minimal quality compromise.

- Context Length: Longer prompts and histories require more computational resources per token.

- Batching and Streaming: Streaming outputs can improve perceived speed, while batching enhances overall throughput.

- Thermal Management and Power: Laptops may throttle performance during extended heavy workloads.

- Kernel Optimizations: Libraries such as TensorRT-LLM, ONNX Runtime, and DirectML are crucial.

Preparing an RTX Desktop

If you’re considering running models on an NVIDIA RTX GPU, your setup should include:

- Ensure that you have the latest NVIDIA Game Ready or Studio drivers.

- Install CUDA and cuDNN as necessary for your chosen runtime.

- Set up TensorRT-LLM or another inference framework that supports it (NVIDIA TensorRT-LLM).

- Utilize quantized weights to fit into your GPU’s memory limitations.

Preparing a Snapdragon PC

On Windows on Arm laptops, anticipate that developer tools will rely on:

- ONNX Runtime and DirectML for GPU/NPU acceleration on inference tasks.

- Qualcomm’s AI Engine and SDKs available through the Qualcomm AI Hub (Qualcomm AI Hub).

- Windows 11 features optimized for the Arm64 architecture and AI tasks (Microsoft Docs).

Exact installation procedures will vary based on how OpenAI packages gpt-oss models and the runtime bindings they support upon release.

Comparing gpt-oss Models with Other Local-First Solutions

OpenAI is not alone in this move toward capable local models. Here’s how it fits into the broader landscape:

- Meta Llama 3/3.1: Open-weight models ranging from 8B to 405B parameters, widely used for local and server inference (Meta).

- Microsoft Phi-3: Compact, high-quality models optimized for edge and mobile scenarios (Microsoft).

- Mistral Models: High-performance open-weight models and tools sought after by local inference users (Mistral).

- NVIDIA NIM and RTX AI: Frameworks and tools for implementing models across client and server GPUs (NVIDIA NIM).

OpenAI’s entry into this domain with gpt-oss models suggests a dual strategy: maintain flagship models in the cloud for optimal performance while offering tuned, local-first versions for increased speed, privacy, and user control.

Licensing, Distribution, and Safety

The Windows Central report does not outline details concerning licensing, distribution channels, or policy constraints. These aspects are essential for developers looking to integrate these models into commercial software. Until OpenAI releases model cards and licenses, it’s prudent to adopt common due diligence practices:

- Verify usage rights and distribution terms before deploying products.

- Review safety protocols and establish guardrails suited to your use case.

- Stay informed on updates, as local models can benefit from regular optimization releases.

If OpenAI follows the established practices of local inference ecosystems, expect quantized versions, sample applications, and runtime-specific guidance to be provided.

Practical Examples and Early Ideas

- Private Meeting Notes: Transcribe and summarize locally, sharing selectively with the cloud.

- Local Coding Assistant: A lightweight assistant that retains proprietary code on your device.

- On-Device Retrieval-Augmented Generation: Access information from local files, knowledge bases, and emails without relying on network access.

- Travel Mode: Use a smaller local model for quick tasks when offline, reverting to a cloud model when online.

Key Takeaways

- OpenAI’s gpt-oss-120b and gpt-oss-20b models are designed for local inference on Snapdragon PCs and NVIDIA RTX GPUs (Windows Central).

- These models do not represent GPT-5—rather, they are practical solutions aimed at enhancing latency, privacy, and control for everyday tasks.

- The existing hardware ecosystem is ready: Qualcomm’s Snapdragon X platform and NVIDIA’s RTX GPUs can accelerate local LLMs.

- Developers should be attentive to licensing, packaging, and tooling details as OpenAI releases model documentation.

FAQs

Are gpt-oss-120b and gpt-oss-20b Fully Offline?

Yes, these models are designed for local inference, allowing them to function without an internet connection after installation. However, applications might still offer cloud integration for larger tasks or updates.

Do I Need an RTX 4090 to Use These Models?

No, various RTX GPUs can run local LLMs effectively when configured with the appropriate quantization and settings. Performance will vary based on VRAM availability, power, and runtime optimizations.

Will These Models Be Accessible Through an API?

Local models typically come as downloadable weights and runtimes, whereas cloud models are accessed via APIs. Keep an eye out for OpenAI’s model cards and documentation for distribution options.

Can Snapdragon Laptops Handle a 20B Parameter Model?

With sophisticated optimization and quantization measures, mid-sized models can perform effectively on modern NPUs and GPUs, often utilizing hybrid execution methods. Expect improved responsiveness on desktops, with laptops also providing significant on-device capabilities.

How Do These Models Compare to GPT-4 or GPT-4o?

Cloud models like GPT-4 and GPT-4o excel in handling complex reasoning and multimodal tasks. In contrast, the gpt-oss models emphasize local speed and privacy, trading some advanced capabilities for better on-device control.

Sources

- Windows Central – OpenAI launches two GPT models designed to run locally on Snapdragon PCs and NVIDIA RTX GPUs

- NVIDIA – TensorRT-LLM for high-performance local LLM inference on RTX GPUs

- NVIDIA – What is an RTX AI PC?

- Qualcomm – Snapdragon X Elite and Plus: building the future of AI PCs

- Qualcomm AI Hub – developer resources for on-device AI

- Microsoft – Introducing Copilot+ PCs

- Meta – Llama 3.1 announcement

- Microsoft – Phi-3 small language models

- Mistral AI – News and model updates

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article