Inside NVIDIA Blackwell: How Rack-Scale GPUs Revolutionize Extreme-Scale AI Inference

Inside NVIDIA Blackwell: How Rack-Scale GPUs Revolutionize Extreme-Scale AI Inference

Artificial Intelligence (AI) is moving from experimental phases to mainstream applications that millions rely on daily. Underpinning these innovations is the infrastructure that transforms raw computing power, data, and networking into a critical asset: rapid and precise answers. NVIDIA’s Blackwell platform is crafted precisely for this challenge, turning data centers into AI powerhouses capable of high-volume reasoning.

This article breaks down concepts from NVIDIA’s original explanation of Blackwell and extreme-scale inference, translating complex engineering language into straightforward explanations while retaining essential technical details. We corroborate major assertions with official resources and independent benchmarks, providing context to highlight the new features, their significance, and future developments.

Why is Inference the Toughest Task in Computing Today?

Training equips a model with intelligence. Inference, on the other hand, deploys that model to generate responses, manage requests across countless processors, all while maintaining low costs and minimal latency. As models expand from hundreds of billions to trillions of parameters amid soaring user demand, every token generated and each millisecond of latency significantly influences user experience and query costs. Consequently, NVIDIA regards inference as the most daunting facet of computing today.

Independent benchmarks support this perspective. The recent MLPerf Inference v5.0 tightened latency expectations to align with real-world usage—approximately 20-50 tokens per second for seamless interaction—and included larger language models (LLMs) such as Llama 3.1 405B. Notably, NVIDIA presented results for its inaugural rack-scale Blackwell system, marking a significant step towards platforms designed explicitly for large-scale inference.

Scale-Out vs. Scale-Up: Why Prioritize Making a Bigger Computer

There are two approaches to enhancing performance:

- Scale-Out: Adding additional nodes and linking them through a data center network.

- Scale-Up: Creating a single, expansive node that operates like a unified processor.

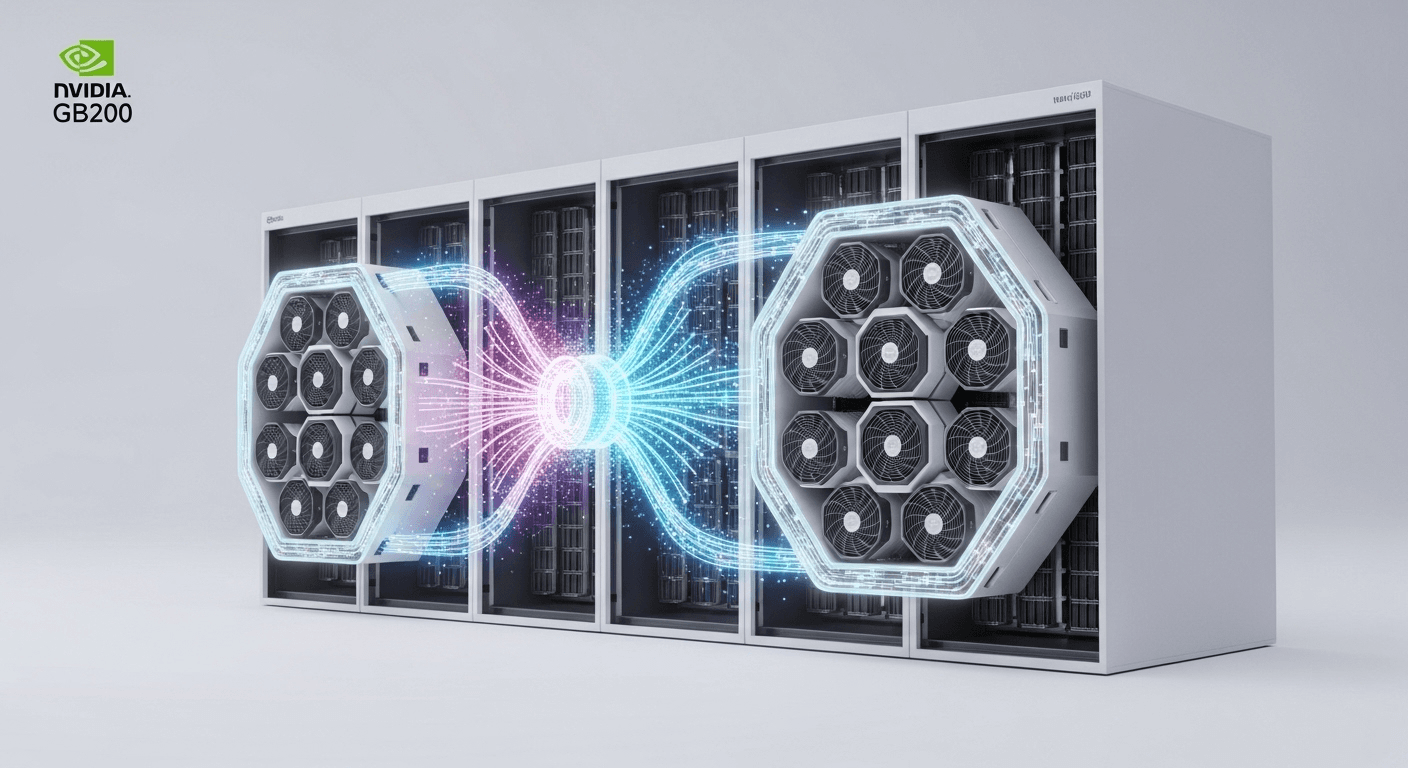

Both strategies are essential, but the Blackwell era emphasizes scale-up as the cornerstone for scale-out. The rationale is straightforward: when data movement between GPUs consumes the majority of processing time, the optimal solution is to keep that data flow on an ultra-fast local network. This is the function of the GB200 NVL72, a 72-GPU NVLink domain that operates like one colossal GPU before even accessing your data center network.

Introducing the Platform: Grace Blackwell and the GB200 NVL72

Envision Blackwell not just as a chip but as a cohesive platform uniting silicon, systems, and software:

- Grace Blackwell Superchip: Comprises two Blackwell GPUs and one Grace CPU interconnected by NVLink-C2C for efficient memory sharing with minimal overhead.

- GB200 NVL72: Houses 36 Grace CPUs and 72 Blackwell GPUs in a single, liquid-cooled rack, functioning as a massive GPU for inference and training.

The NVL72 architecture utilizes fifth-generation NVLink and NVLink Switch to enable 130 TB/s of low-latency GPU-to-GPU bandwidth across the rack. Each GPU boasts 1.8 TB/s of NVLink bandwidth, facilitating communication typically restricted to a single board, but extended throughout an entire rack.

NVIDIA claims this approach enhances real-time LLMs significantly, achieving up to 30 times faster inference for trillion-parameter models and improving energy efficiency up to 25 times compared to H100-based systems under specified testing conditions. Be aware; these figures are NVIDIA-reported and tied to specific configurations and latencies, meaning performance may vary in different scenarios.

Inside the Rack: The NVLink Switch Spine

At the core of the NVL72 is a meticulously designed NVLink Switch spine that connects 72 GPUs across 18 compute trays. NVIDIA boasts over 5,000 high-performance copper cables—equivalent to roughly two miles of wiring—engineered to support an aggregate bandwidth of 130 TB/s across the rack. The true value lies in facilitating seamless communication among thousands of chips, mirroring the functionality of a single board, which is vital when models distribute tasks across numerous GPUs.

This illustrates why Blackwell’s “make a bigger computer” philosophy is pivotal for inference: if communication is sufficiently rapid within the rack, expert routing, attention, and KV-cache processes can remain local, thus minimizing overhead that might otherwise encroach on slower, congested data center networks.

Scaling from One Rack to an AI Factory

With a rack that functions as a singular, massive GPU, the next step is to integrate thousands of these racks into a cohesive system. NVIDIA’s data center networking solutions offer two options:

- Spectrum-X Ethernet: Provides lossless, AI-optimized Ethernet fabric.

- Quantum-X800 InfiniBand: Suited for the most demanding HPC/AI scale-outs.

Each GPU within an NVL72 connects directly to the facility’s data network via 400 Gbps NICs (ConnectX-7), while BlueField-3 DPUs offload storage, security, and networking functionalities to ensure GPUs can concentrate on AI tasks. The objective is to eliminate bottlenecks at every level, ensuring AI factories scale predictably.

Software as the Key Differentiator: Dynamo, TensorRT-LLM, and NVFP4

While hardware provides the raw capabilities, software determines the efficiency of its utilization. NVIDIA’s inference-at-scale stack comprises three fundamental components:

- Dynamo: Designed as the operating system for AI factories, Dynamo orchestrates distributed inference, intelligently routing tasks across thousands of GPUs to maximize throughput and minimize costs. NVIDIA claims this enables substantial performance improvements through enhanced scheduling and autoscaling.

- TensorRT-LLM and Associated Libraries: Optimized libraries and runtimes that extract maximum performance from each GPU by fusing kernels, synchronizing compute and communication, and efficiently utilizing paged KV caches.

- NVFP4: A new low-precision format introduced with Blackwell, reducing memory and bandwidth requirements while maintaining accuracy when paired with the platform’s upgraded Transformer Engine and calibration processes. This is pivotal to Blackwell’s professed efficiency and throughput enhancements.

Together, these tools address two predominant challenges that contribute to inference costs: the overhead incurred during data movement among experts/partitions and the computational demands for attention and decoding tasks.

Insights from Independent Benchmarks

In the latest MLPerf Inference v5.0 round, NVIDIA showcased results for the GB200 NVL72, achieving record performances across LLMs and various tasks, with 15 partners also submitting third-party results based on the NVIDIA platform. MLPerf evolves continuously to reflect true user expectations, while the v5.0 suite incorporated larger LLMs and more stringent latency standards. This ensures that rack-scale metrics carry significant weight, not only measuring raw FLOPS but also the system’s capacity to deliver rapid token generation under workload.

Recent media coverage has spotlighted the generational advancements of Blackwell-powered systems, alongside the newer Blackwell Ultra GB300 platform, which further enhances inference throughput and efficiency, particularly for complex reasoning models like DeepSeek R1. It’s essential to scrutinize vendor claims and seek MLPerf publications and partner results for accurate comparisons.

A Concrete Example: DeepSeek-R1 and Test-Time Scaling

Reasoning models such as DeepSeek-R1, featuring a 671 billion-parameter mixture-of-experts (MoE) architecture, emphasize the importance of routing and communication efficiency. NVIDIA showcased DeepSeek-R1 achieving up to 3,872 tokens per second on a single 8x H200 server utilizing FP8 with NVLink/NVLink Switch. This capability hinges on keeping expert communication within a high-bandwidth, low-latency network. The Blackwell platform introduces FP4 capabilities and a 72-GPU NVLink domain specifically designed to enhance inference-first scaling, which is expected to boost test-time scaling efforts that invest more computational resources per answer to attain improved accuracy.

How Blackwell Alters the Cost Structure

Why is Blackwell often referred to as an AI factory engine rather than merely a chip? Because the cost of tokens generated and the total cost of ownership (TCO) depend on the entire stack:

- Compute Density and Energy Efficiency: Achieved through liquid-cooled, rack-scale designs.

- Intra-rack Bandwidth: Ensures expert routing and attention remain localized.

- Distributed Scheduling: Ensures GPUs are utilized efficiently by directing work to the most suitable resources.

- Low-Precision Formats and Optimized Kernels: Drive up token generation per watt.

NVIDIA’s analysis suggests that from Hopper to Blackwell, comprehensive improvements can yield a significant increase in AI reasoning output while maintaining the same energy budget, with further enhancements expected as Dynamo and NVFP4 optimizations evolve. Independent validation is crucial, but the trajectory is clear—achieving greater performance with the same or reduced power, which aligns with hyperscalers’ expansion plans.

Building Blocks and Specifications at a Glance

If you’re assessing Blackwell for production inference, consider the following key highlights:

- NVL72 Scale-Up Domain: 72 GPUs across 18 trays that emulate a large GPU, featuring 130 TB/s of aggregated NVLink Switch bandwidth and 1.8 TB/s per GPU.

- Grace Blackwell Superchip: Two Blackwell GPUs and one Grace CPU interconnected via NVLink-C2C for efficient memory sharing and rapid CPU-GPU communication.

- Memory Capacity: Up to 13.4 TB of HBM3e in an NVL72 rack, designed to handle memory-bound LLM inference and accommodate larger KV caches.

- Networking for Scale-Out: Each GPU supports 400 Gbps connectivity, utilizing either Spectrum-X Ethernet or Quantum-X800 InfiniBand at the cluster level, with BlueField-3 DPUs for infrastructure offload.

- Software Suite: Dynamo for orchestration and autoscaling, TensorRT-LLM for optimized inference, and NVFP4 ensuring accuracy-maintaining low precision.

Transitioning from Plan to Plant: Creating AI Factories with Digital Twins

As AI factories aim for gigawatt-scale construction, proper planning of the physical layout is as pivotal as chip selection. NVIDIA’s Omniverse Blueprint integrates power, cooling, networking, and computational components into a unified simulation environment using OpenUSD, collaborating with partners like Schneider Electric, Vertiv, and Cadence. This initiative is designed to identify potential issues early, optimize layouts, and validate performance before physical construction.

What’s Next: Blackwell Ultra and Beyond

At GTC 2025, NVIDIA previewed Blackwell Ultra, positioning it as the next evolution for both training and inference, especially in reasoning and agentic workflows. Reports suggest that systems are anticipated to launch in the second half of 2025, with continued architectural advancements into 2026 and beyond. Organizations considering capacity planning should prepare for ongoing improvements in attention performance, memory bandwidth, and software capabilities that facilitate smoother cross-rack operations.

Practical Considerations: Questions to Address Before Purchase

- What are your token-per-second and latency targets at P50 and P99? Use MLPerf latency guidelines as a foundational reference.

- How sizeable are your models and KV caches presently, and what is their projected growth? Size memory and NVLink domains accordingly.

- Do your workloads gain from test-time scaling? If so, prioritize intra-rack bandwidth and software that can allocate additional compute resources as needed.

- Will your architecture employ a mixture-of-experts (MoE)? Plan for high-bandwidth, low-latency expert communications and consider a rack-scale approach first.

- What is your transition plan from a single rack to an AI factory? Early mapping of network fabrics (Spectrum-X or Quantum-X800), DPUs, and orchestration is essential.

Key Takeaways

- Inference now dominates the design of AI infrastructure, and Blackwell was engineered specifically for this purpose.

- Scale-up has become the new foundation for scale-out: NVL72 creates a unified 72-GPU domain before your traffic reaches the data center network.

- The NVLink Switch spine offers 130 TB/s of intra-rack bandwidth—a critical element for MoE routing, attention management, and KV-cache scaling.

- Software holds equal weight with hardware: Dynamo, TensorRT-LLM, and NVFP4 harness hardware capabilities into tokens per second while lowering costs per token.

- Independent benchmarks like MLPerf v5.0 validate advancements and set more stringent latency standards that align with real-world expectations.

FAQs

1) What differentiates GB200 NVL72 from a traditional GPU server?

A conventional 8-GPU server predominantly relies on the data center network for inter-GPU communication. In contrast, the GB200 NVL72 establishes a 72-GPU NVLink domain within a single rack, offering up to 130 TB/s of low-latency bandwidth, thus, the entire rack operates like an extensive, singular GPU. This drastically reduces communication overhead for LLMs and MoE models.

2) Is Blackwell exclusively for inference, or does it also aid in training?

Both. NVIDIA cites up to four times faster training speeds over H100 for large models at scale, but the platform and its software are particularly optimized for real-time LLM inference and AI reasoning. Always verify configuration details and latency assumptions when comparing performance metrics.

3) How does Dynamo enhance performance?

Dynamo treats a large cluster as a singular pool of compute and memory. It dissects inference into smaller segments and directs them to the optimal GPUs in real time, elevating utilization and throughput without elevating costs. Think of it as a harmonious blend of autoscaling and topology-aware scheduling, specifically designed for AI factories.

4) What role do DPUs serve in an AI factory?

BlueField-3 DPUs offload essential tasks such as networking, storage, security, and telemetry from GPUs and CPUs, allowing GPUs to focus on executing models. This offloading aids in maintaining predictable latencies as workloads scale.

5) How credible are vendor performance assertions?

Utilizing MLPerf as a benchmark and examining partner submissions alongside vendor data is advisable. Pay attention to the fine print: model sizes, latency targets, precision, and batch sizes play critical roles. For reasoning-intensive models, look for results affiliated with MoE and larger-context LLMs.

Conclusion

The success of AI products hinges on the speed, accuracy, and affordability of their responses. Blackwell’s groundbreaking concept is to re-engineer the computing architecture around this objective: prioritize scale-up with a rack that operates as a colossal GPU, then scale-out across a fabric engineered for AI. Coupled with software that unifies thousands of GPUs into a single system, you create an AI factory—an infrastructure designed for industrial-scale intelligence production.

Whether you are an AI strategist planning your capacity, a researcher pushing the boundaries of reasoning, or a developer launching a production-level LLM, the path is clear: inference is the area to focus on, and systems like Blackwell are reshaping the way we approach it.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article