Inside GPT: Simplified Architectures for Complex Tasks

Understanding the Foundations of GPT

Generative Pre-trained Transformers (GPT) represent a groundbreaking advancement in the field of artificial intelligence (AI). Developed by OpenAI, these models leverage deep learning to generate human-like text based on the input they are fed. The evolution from GPT to its latest iteration, exemplifies the rapid progress in AI capabilities, focusing primarily on natural language understanding and generation.

The Architecture Behind GPT

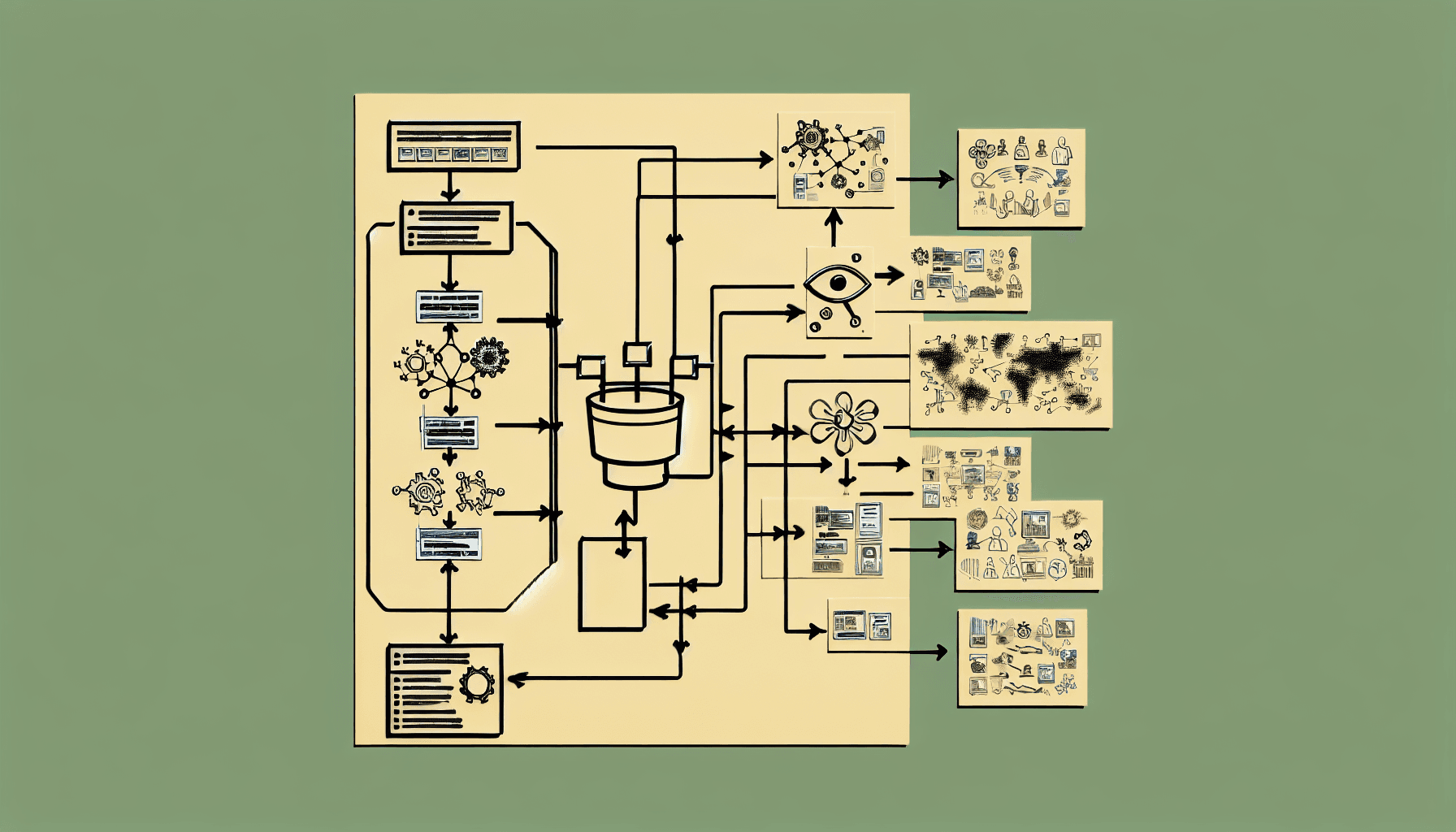

At its core, GPT is based on the transformer architecture, which marks a departure from previous sequence-based models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory cells). The transformer model utilizes mechanisms called attention and self-attention to process and predict output without the need for sequentially analyzing the data. This allows for significantly improved processing speeds and effectiveness in handling long-range dependencies in text.

Key Features That Set GPT Apart

GPT models are distinct because of their ability to generalize from limited data, adapt to a wide range of tasks without task-specific training, and generate coherent and contextually relevant responses. This adaptability makes them particularly valuable for applications ranging from automated customer service to content creation and beyond.

Applications of GPT in Various Industries

The versatility of GPT models finds applications in numerous fields. For instance, in healthcare, GPT can assist in patient management by synthesizing and interpreting patient data to provide personalized care recommendations. In the financial sector, these models enhance customer service by handling inquiries and processing transactions with high efficiency and accuracy.

The Impact of GPT on Workflow Automation

GPT’s ability to streamline complex tasks has made significant impacts on workflow automation. By integrating GPT with existing software tools, businesses can automate various administrative tasks such as scheduling, email management, and customer relationship management, leading to increased productivity and reduced operational costs.

Challenges and Considerations in GPT Deployment

Despite its impressive capabilities, deploying GPT models comes with challenges. These include handling the ethical implications of AI-generated content, managing the computational resources required, and ensuring the security of the models against potential misuse. Companies must address these issues to fully leverage GPT’s potential while maintaining ethical standards and security.

Future Developments in GPT Technology

The ongoing development of GPT models promises even more sophisticated AI tools in the future. Researchers are continuously working on improving the models’ efficiency, reducing their environmental impact, and enhancing their ability to understand and generate more nuanced human-like text. These advancements are set to open up new frontiers in AI applications.

Conclusion

The simplicity yet profound capabilities of the GPT architecture represent a significant leap forward in AI technology. As these models continue to evolve, their influence across various sectors is expected to grow, driving further innovations and transforming how businesses and services operate in an increasingly digital world.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article