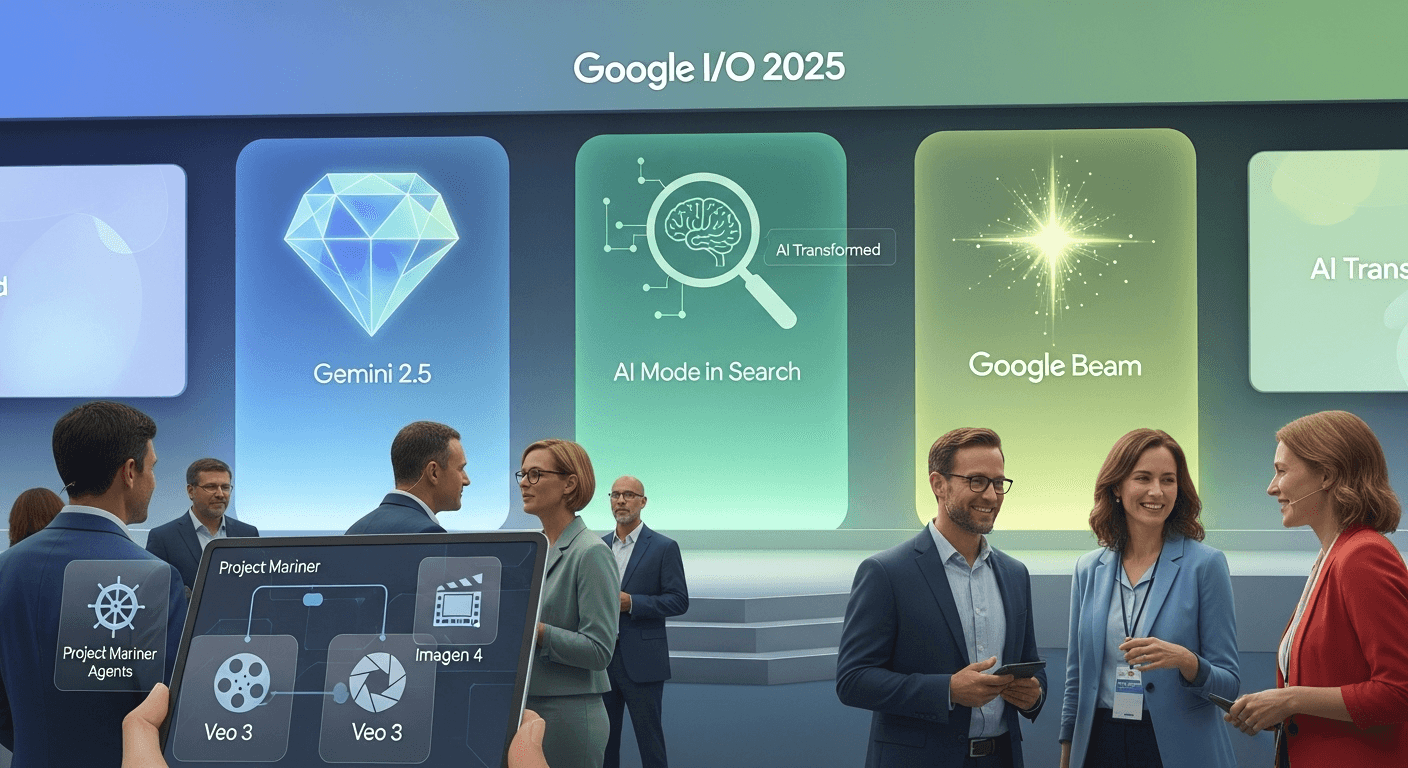

Inside Google I/O 2025: How Gemini, AI Mode, and Google Beam Transform Research into Real-World AI

Introduction

What happens when years of artificial intelligence (AI) research transition from labs to everyday applications? At Google I/O 2025, Sundar Pichai framed this moment as a significant shift: research turning into reality. The keynote highlighted how Google’s Gemini models are evolving—moving from benchmarks to consumer products, transitioning from demonstrations to everyday tools, and evolving from singular features to autonomous systems that can act on your behalf.

This guide rewrites and clarifies the major announcements, providing context from credible sources, and emphasizing what matters for curious readers and professionals alike.

Rapid Model Advancements and the Power Behind Them

Google reports that the Gemini family has experienced significant advancements. As of the keynote on May 20, 2025, Gemini 2.5 Pro topped the LMArena community leaderboard and excelled across multiple evaluation categories. Since rankings can change, it’s important to keep in mind that positions may vary over time; earlier in May and June, Gemini 2.5 Pro led across various arenas, while subsequent updates indicated shifting standings as new models emerged.

At the core of these advancements is Google’s seventh-generation TPU, named Ironwood. This powerful 9,216-chip pod delivers a staggering 42.5 exaflops, offering about 10 times the performance of its predecessor, facilitating faster and more cost-effective model serving. Independent analysis has supported these claims regarding scale and architecture.

The adoption statistics are equally impressive. Compared to last year, Google’s reported monthly tokens processed skyrocketed from 9.7 trillion to over 480 trillion. More than 7 million developers are harnessing the power of Gemini, while the Gemini app has attained over 400 million monthly active users. External reporting corroborated these milestones during I/O week.

What does this mean for you? Expect better models, quicker responses, and a more extensive array of features across Google products—all at a lower cost to developers.

Enhanced Human Connection: Google Beam

A few years ago, Google unveiled Project Starline, a groundbreaking demo that made video chats feel as if you were sitting across the table from someone. This concept has now evolved into Google Beam, an AI-first 3D video communication platform. Beam employs an array of six cameras and an AI volumetric video model to create realistic 3D representations of the person you’re speaking with, displayed on a light-field monitor. HP is set to manufacture the first Beam hardware for early adopters later in 2025, with initial pricing around $24,999, integrating with Google Meet and Zoom.

But Beam is more than lifelike visuals. Google also presented near real-time speech translation in Google Meet that maintains the speaker’s voice, tone, and expressions. This feature, currently in beta for AI Pro and AI Ultra subscribers, initially supports English and Spanish, with plans for additional languages. Early users described an overdub that mimicked the speaker’s voice remarkably well, despite some initial hiccups.

Why it matters: If your team or family includes speakers of different languages or spans various time zones, Beam and Meet’s speech translation can facilitate cross-language conversations in a more natural way than traditional subtitles.

Advancements from Project Astra to Gemini Live and Search Live

Project Astra aimed to develop a universal AI assistant capable of understanding the world. Many of those features are now integrated into Gemini Live: you can share your camera or phone screen and engage with Gemini about what it sees—whether troubleshooting a device or planning a route. Rollouts began on Android and have since expanded to a wider range of devices, with iOS support on the horizon. Google and various outlets have tracked these developments throughout spring and summer 2025.

This concept also manifests in Search as Search Live, allowing real-time, voice-and-camera interactions directly within the Google app in the U.S. By tapping Live, pointing your camera, and conversing with Search about what you see, you can access follow-ups and links for more information. Google began broad U.S. availability in late September 2025.

Why it matters: The assistant understands context from your surroundings and your screen, making its assistance more grounded and practical.

The Arrival of Agents: Project Mariner and Agent Mode

A significant conceptual shift this year is the advent of agents—systems that combine model intelligence with tools and the ability to take actions under your discretion.

-

Project Mariner is a research prototype capable of using a browser like a human: reading webpage content, planning steps, clicking buttons, scrolling, filling forms, and completing tasks from beginning to end. Google has introduced multitasking support (up to 10 tasks) and a method called ‘teach and repeat,’ where you demonstrate a workflow once, and the agent can replicate it in similar scenarios later. Limited access began in December 2024, with broader expansion in 2025, and it will be integrated into the Gemini API.

-

Agent Mode in the Gemini app will enable subscribers to delegate tasks such as apartment hunting. In demonstrations, Agent Mode can adjust filters on platforms like Zillow, utilize external tools through the Model Context Protocol (MCP), and coordinate actions such as scheduling tours. Initial testing is underway, with wider access planned in the future.

To enhance agent interoperability, Google introduced the Agent2Agent (A2A) protocol, allowing agents from different vendors to communicate and collaborate. They are also adopting MCP compatibility in the Gemini API and SDK, linking agents to various tools and services. A2A has progressed under an open governance structure, and a proposed Agent Payments Protocol (AP2) aims to standardize how agents authorize and finalize transactions.

Why it matters: Agents can save significant time on complex tasks, and open protocols reduce vendor lock-in by facilitating collaboration between different agents.

AI Mode Transforms Search

AI Overviews, which summarize results at the top of Google Search, have reached over a billion users and expanded globally earlier in 2025. At I/O, Google introduced AI Mode, offering a more interactive and intelligent Search experience. AI Mode utilizes a query fan-out technique to break down intricate queries into subtopics, conduct parallel searches, and synthesize answers with hyperlinks back to relevant sources. This feature is rolling out to users in the U.S., with continuous upgrades, including Gemini 2.5 Pro for subscribers and new deep research features.

Recent updates to Search have also added live, voice-first experiences and agent-driven features like AI-powered business calls that can contact local merchants on your behalf to check availability or pricing.

Why it matters: For those needing nuanced, multi-part answers, AI Mode aims to handle the heavy lifting, citing useful sources so you can verify and explore further.

Gemini 2.5: Speed, Reasoning, and Deep Think

Google is maintaining a distinction between the Gemini 2.5 offerings focusing on speed and cost versus in-depth reasoning:

-

Gemini 2.5 Flash is optimized for quick, affordable responses, with enhancements across reasoning, code input, multimodal input, and extensive context. It is popular among developers requiring low-latency interactions at scale.

-

Gemini 2.5 Pro serves as the advanced option. Google has introduced Deep Think, an enhanced reasoning mode that evaluates multiple hypotheses before producing an answer. Deep Think began with trusted testers and started rolling out to AI Ultra subscribers in August 2025. Google highlights strong performance on math and coding benchmarks, although ratings vary based on evaluation methods.

Developers also gain access to thought summaries in the Gemini API and Vertex AI to facilitate debugging of complex agent activities, along with “thinking budgets” to balance cost, latency, and quality trade-offs.

Why it matters: Users can select the most suitable tool for their workload—Flash for broader use and costs, Pro for complex reasoning—and adjust how much the model computes before responding.

Personalization in Gemini with Privacy Controls

A key theme emphasized during the keynote was enhanced personalization with user consent. Google defines this as personal context—allowing Gemini to utilize relevant signals across Google applications to offer tailored assistance. Features in Gmail will include personalized Smart Replies that mimic your tone and content by referencing previous emails and Drive files to generate appropriately aligned responses.

In 2025, the Gemini app introduced features that enhance this personalization: it now includes options that learn from past conversations, along with a Temporary Chats mode allowing ephemeral discussions that won’t be saved or used for future adaptations. Google continues to refine privacy models as Gemini develops more assistant-like capabilities on Android. As always, users should check the latest settings to manage data sharing and retention.

Why it matters: Personalization can enhance the utility of AI, provided there are clear controls over what data is used and when.

Generative Media: Veo 3, Imagen 4, and Flow

On the creative front, Google unveiled key upgrades:

-

Veo 3 is Google’s latest video model now capable of generating native audio—including sound effects, background noise, and dialogue—to complement visuals, marking a significant advancement beyond silent video generation.

-

Imagen 4 enhances the speed and fidelity of image generation, significantly improving the rendering of readable text—an area older models often struggled with. This tool will be accessible across the Gemini app, Workspace apps, and Vertex AI.

-

Flow is a novel AI filmmaking tool developed for Veo, Imagen, and Gemini, offering camera controls, scene builders for extending shots, and asset management focused on consistent, cinematic sequences rather than isolated clips. Flow is currently rolling out to paying subscribers and expanding to additional regions.

Why it matters: For those involved in content creation, marketing, education, or film, these tools can significantly expedite the transition from concept to storyboard to refined sequences, while maintaining consistency in characters and styles.

What This All Means

In summary, I/O 2025 emphasized making AI more personalized, proactive, and practical. Here’s what you can expect:

- Infrastructure: Ironwood pods provide the power needed for rapid, cost-effective inference.

- Models: Gemini 2.5 Flash and Pro give you flexibility between speed and reasoning, with Deep Think for challenging tasks.

- Agents: Project Mariner and Agent Mode begin to facilitate actions on your behalf, while open protocols like A2A and MCP aim to foster collaboration.

- Products: AI Mode in Search, Search Live, Gemini Live, Beam, and Meet translation integrate these capabilities into familiar environments.

The consistent theme is clear: research is evolving into practical applications that enhance everyday life.

Practical Examples to Try

- Use AI Mode to plan a complex purchase, asking detailed questions about budget, specifications, and trade-offs, and expect to receive citations and relevant links for verification.

- Leverage Gemini Live for troubleshooting. Share your screen with Gemini to compare product pages, or show it a device and ask about the right adapter.

- Draft smarter emails in Gmail. When available, enable personalized Smart Replies and watch as Gemini replicates your tone and context (always review before sending!).

- Use Google Meet to enable speech translation and facilitate cross-language conversations between English and Spanish; test this feature in casual settings first.

- In Flow, import elements to build a scene, extend shots, and observe how different prompts influence camera movements.

FAQs

1. What is AI Mode in Google Search?

AI Mode offers a chat-like Search experience, designed to handle more complex queries. It employs a query fan-out strategy to explore subtopics and synthesizes answers with citations and links for further exploration. This feature is currently being rolled out in the U.S., with continuous enhancements, including Gemini 2.5 integration and advanced research tools for subscribers.

2. How is Project Mariner different from traditional assistants?

Mariner operates as an agent that can interact with a browser like a human, interpreting web elements and executing actions such as filling out forms and conducting searches. It can handle multiple tasks and learn workflows through a feature called teach and repeat.

3. What does A2A mean, and why is it important?

A2A, or Agent2Agent, is an open protocol that enables AI agents from different vendors to communicate and collaborate. This promotes interoperability and prevents vendor lock-in. Google also supports Model Context Protocol (MCP), allowing agents to access various tools and services.

4. What is Deep Think in Gemini 2.5 Pro?

Deep Think is an advanced reasoning mode designed for complex tasks such as mathematics and coding. It was initially available to select testers and began rolling out to AI Ultra subscribers in August 2025, with ongoing assessments for safety and effectiveness.

5. When can I try Google Beam?

HP’s first Beam device is expected for early customers in 2025, with enterprise availability to follow. Initial reports estimate the price at around $24,999 for HP’s Dimension system, which will integrate with Google Meet and Zoom.

Conclusion

Google I/O 2025 demonstrated the company’s rapid advancement across multiple areas: hardware, models, agents, and practical applications. Ironwood provides the necessary scale, while Gemini 2.5 offers flexibility between speed and reasoning. Protocols like A2A and MCP enable interconnected agents, and frontline experiences—including AI Mode, Search Live, Gemini Live, Beam, and Meet translation—make these innovations accessible and useful.

Whether you’re a curious reader, developer, or business leader, the message remains clear: AI is evolving from simple commands to proactive systems that perceive, reason, and act seamlessly in the flow of our lives and work.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article