How Tokenization Enhances Text Understanding in AI

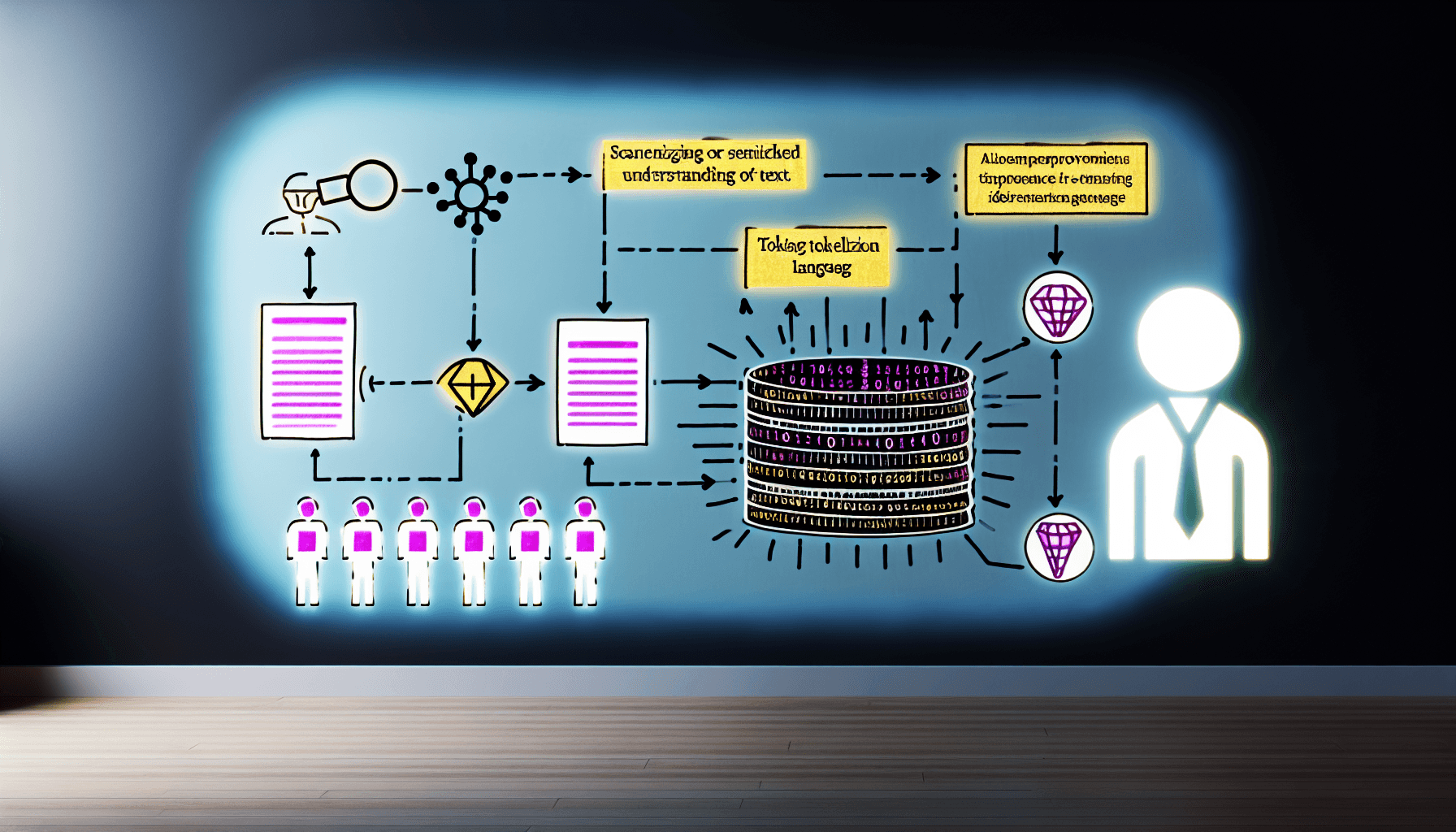

Introduction to Tokenization in Artificial Intelligence

In the realm of artificial intelligence (AI), understanding human language is a complex challenge. AI systems need to decipher, interpret, and predict text in a way that aligns with human understanding. Central to achieving this sophisticated level of understanding is the process of tokenization. In this detailed exploration, we delve into how tokenization fundamentally transforms text comprehension in AI applications.

Understanding the Basics of Tokenization

Tokenization is the process of converting a sequence of characters into smaller units, called tokens. This breakdown helps in parsing and understanding text by transforming unstructured data into a format that AI algorithms can more effectively process. Each token, which can be a word, a part of a word, or even punctuation, is a significant element in the analysis of text.

The Significance of Token Types in AI

Different types of tokens serve different purposes in AI text understanding. While some tokens represent individual words, others may signify larger phrases or specialized symbols that play critical roles in semantic analysis. Understanding the variety and function of these tokens is crucial for developers building AI models designed to interpret human language with high accuracy.

Role of Tokenization in Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of AI that focuses on the interaction between computers and humans using natural language. The aim of NLP is to read, decipher, understand, and make sense of the human languages in a manner that is valuable. Tokenization in NLP is a foundational step that impacts data preprocessing, language modeling, and ultimately, the outcome of AI-driven analyses and applications such as chatbots, voice-operated devices, and more.

Tokenization and Semantic Analysis

Tokenization contributes significantly to semantic analysis by enabling machines to understand the contextual use of words and phrases. This understanding aids in discerning the intent and sentiment of texts, which is essential for applications like sentiment analysis, market intelligence, and customer feedback processing.

Tokenization in Machine Learning Models

For machine learning models, proper tokenization can dramatically improve learning efficiency and accuracy. By standardizing the input text data, these models can better understand and predict outcomes based on textual information, leading to enhancements in AI applications across various sectors including healthcare, finance, and customer service.

Tokenization Techniques and Their Impact

There are several techniques of tokenization, each with unique impacts on the text processing capabilities of AI systems. Choosing the right tokenization technique depends on the specific requirements and constraints of the application. Common methods include whitespace tokenization, rule-based tokenization, and subword tokenization, each catering to different levels of text analysis precision.

Challenges in Tokenization

Despite its advantages, tokenization presents challenges such as dealing with multilingual text, understanding context, and managing variations in linguistic expressions. These obstacles necessitate continual advancements and refinements in tokenization algorithms to enhance AI’s linguistic comprehension.

Advancements in Tokenization Technology

As AI technologies evolve, so do tokenization methods. Recent advancements include the development of more sophisticated algorithms that can handle complex linguistic phenomena with greater accuracy. These improvements are crucial for building next-generation AI systems that can understand and interact in human language more effectively.

Tokenization and The Future of AI

Looking ahead, tokenization is set to play an even more critical role in the development of AI. With ongoing research and technological improvements, tokenization strategies will become more advanced, leading to even more robust AI systems capable of understanding language with near-human accuracy.

Conclusion

Tokenization is a cornerstone of effective text analysis in AI, crucial for transforming raw text into intelligible data that AI systems can process and learn from. As AI continues to penetrate every aspect of modern life, tokenization will remain fundamental in enhancing the ability of machines to understand and interact with human language.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article