How Google Raced to Catch OpenAI: Inside a Turbulent Two-Year Pivot

How Google Raced to Catch OpenAI: Inside a Turbulent Two-Year Pivot

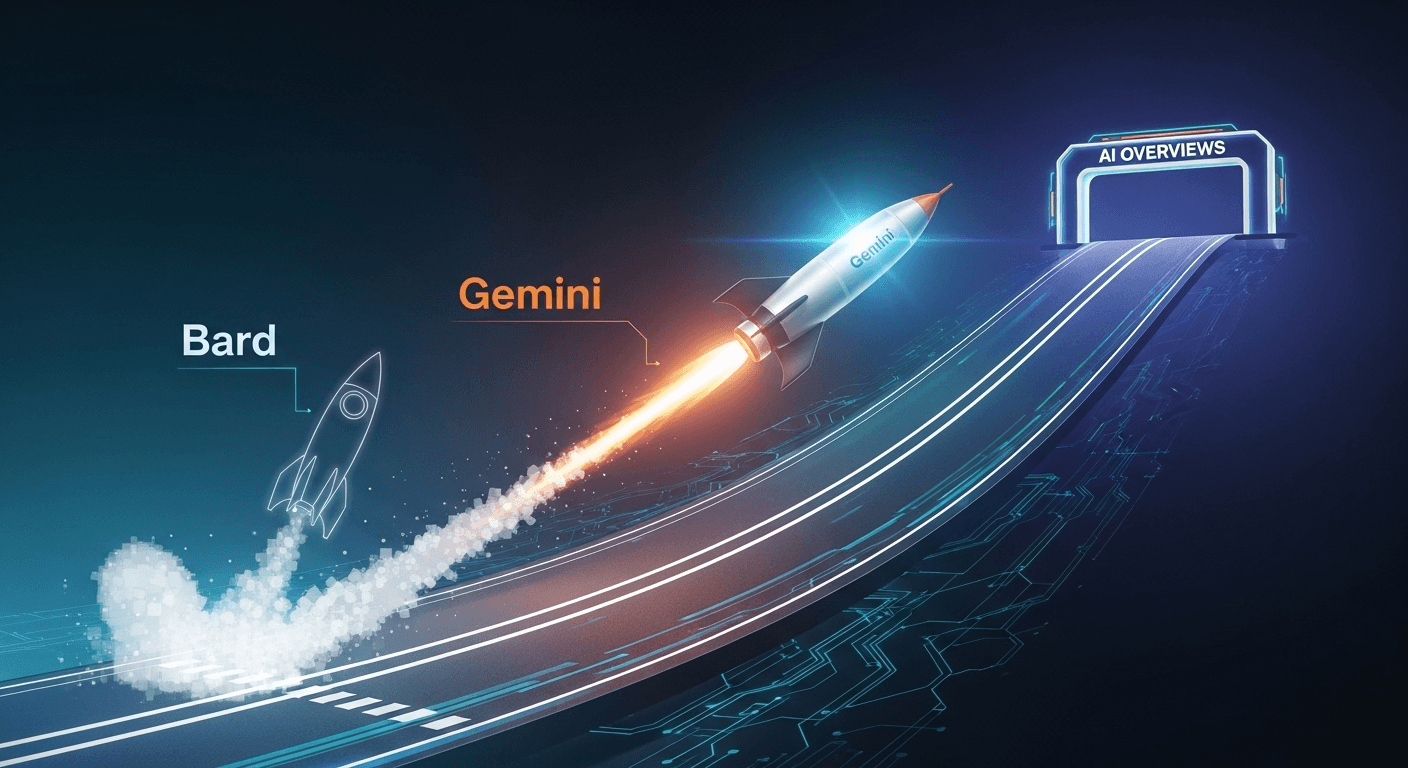

In late 2022, ChatGPT made headlines, shaking up the tech landscape and sparking urgency at Google. This event kicked off a frenetic race: launching Bard, integrating research teams into Google DeepMind, unveiling Gemini, and rethinking the future of Search with AI. Throughout this journey, Google encountered challenges, competition, and key decisions focused on safety and operational scale. Here’s an overview of the unfolding narrative, detailing successes and setbacks, and what it implies for the future of AI at a company that helped pioneer it.

From Shock to Sprint: When ChatGPT Changed the Stakes

ChatGPT’s explosive launch in November 2022 brought generative AI to a global audience, creating immense pressure for Google, whose innovations laid the groundwork in transformer and large language model technology. Within weeks, Google accelerated its efforts to introduce a consumer chatbot based on its LaMDA model, officially launching Bard in early 2023. However, this rapid push came at a cost: Bard’s initial demo revealed a factual error about the James Webb Space Telescope, causing an investor backlash and a significant drop in Alphabet’s market value that day [Reuters]. This incident emphasized the scrutiny and high stakes of the new era of AI.

Despite the setbacks, Google had little choice but to accelerate its initiatives. Microsoft integrated OpenAI’s models into Bing and Edge, establishing a competitive foothold in search innovation. In response, Google introduced the Search Generative Experience (SGE) in May 2023, aiming to blend LLM-generated responses with traditional search results [Google].

Reorganizing for Speed: Google Brain and DeepMind Become One

As the competition intensified, Google undertook a significant structural change that would influence its path forward. In April 2023, Sundar Pichai announced the merger of Google Research’s Brain team and DeepMind into a single unit, now known as Google DeepMind, led by Demis Hassabis. Jeff Dean was appointed Chief Scientist, tasked with steering long-term research [Google]. The objective was straightforward: consolidate talent, minimize redundancy, and expedite the development of breakthrough models.

This reorganized team focused on unifying Google’s suite of models under a cohesive brand – Gemini.

Gemini Arrives: Unifying Google’s Model Strategy

In December 2023, Google introduced Gemini, a versatile multimodal model capable of processing text, images, audio, and code. The launch offered three configurations for various applications: Ultra (flagship), Pro (all-purpose), and Nano (on-device solutions). According to benchmarks, Gemini Ultra matched or outperformed leading models in several areas, including coding and multimodal reasoning [Google DeepMind]. This rollout sent a clear message: Google was back in the race.

The momentum continued in February 2024 when Bard was rebranded as Gemini, coinciding with the introduction of Gemini 1.5, which featured extended context windows of up to 1 million tokens for select developers, facilitating an in-depth analysis of extensive documents, videos, and codebases in a single session [Google].

On-Device AI and Developer Reach

Google also focused on making AI more accessible at the device level. Gemini Nano was incorporated into the Pixel, enabling features like Summarize in the Recorder app and intelligent replies, positioning Android as a key platform for privacy-conscious AI capabilities [Google]. Additionally, Gemini APIs were made available through Google AI Studio and Vertex AI, catering to both developers and enterprises [Google Cloud].

Hard Lessons in Public: Image Generation and AI Overviews

However, momentum came with its challenges. In February 2024, issues arose when Gemini’s image generation produced historically inaccurate portrayals, prompting Google to pause the feature while improvements were made [The Verge]. Google recognized the problem and committed to enhancing safeguards and quality before reintroducing the feature [Google].

In May 2024, the rollout of AI Overviews in Search led to users experiencing bizarre and impractical results, including recommending glue for pizza or citing jokes as legitimate sources. While Google asserted that, overall, AI Overviews improved user satisfaction, it acted swiftly to rectify failures by refining prompts, filtering low-quality content, and instituting additional safety practices [The Verge] [Google]. This highlighted a crucial challenge: scaling useful AI features while minimizing the risk of confidently incorrect responses.

New Leadership and a Consumer AI Push

In March 2024, Google recruited Mustafa Suleyman, co-founder of DeepMind and former CEO of Inflection AI, to lead a new group titled AI at Google. Karén Simonyan, Inflection’s chief scientist, joined him, along with several team members from Inflection. This strategic move consolidated consumer AI initiatives and emphasized a commitment to delivering polished, user-friendly products swiftly [Google].

During Google I/O 2024, the company previewed Project Astra, a multimodal assistant that interprets the world through a phone camera and engages in real-time interaction. Astra’s seamless, low-latency functionalities seemed geared to compete with OpenAI’s GPT-4o demo showcased the same month, which illustrated natural voice and vision with rapid responsiveness [Google] [OpenAI].

Compute, Safety, and Scale: The Less Visible Work

Betting Big on Infrastructure

The performance of AI models hinges on robust computational power and data management. Google has invested significantly in custom chips and data centers, including TPU v5e and v5p for both training and inference. The company has also broadened its support for Nvidia GPUs on Google Cloud, illustrating its commitment to providing flexible, cost-effective solutions for enterprises utilizing generative AI [Google Cloud] [Google Cloud].

Safety, Alignment, and Provenance

Incidents in the public eye have sharpened Google’s focus on safety measures. The company has prioritized red-teaming and evaluation frameworks tailored for generative models, emphasizing watermarking and provenance through efforts such as SynthID, which embeds identifiers into AI-generated content for downstream recognition [Google DeepMind]. Additionally, Google has published guidelines for responsible AI development and actively engaged in industry and policy discussions on model governance [Google].

Where Google Stands Now

Two years into this competitive surge, Google appears both humbled and invigorated. It has successfully launched a unified model family, consolidated leadership, expanded AI into core offerings, and improved access for developers. The company has also learned valuable lessons about the challenges of rapidly deploying consumer AI.

When evaluating its position against OpenAI, the gap fluctuates. OpenAI continues to lead in producing refined capabilities and user experiences with ChatGPT and GPT-4o. Meanwhile, Google counters with Gemini’s long-context capabilities, on-device AI through Android and Pixel, extensive distribution via Search and Workspace, and a robust infrastructure. Future success may hinge less on raw performance metrics and more on factors such as reliability, responsiveness, cost, and seamless integration into daily processes.

Financially, Google is beginning to translate its AI advancements into revenue through Cloud services, advertising, and subscriptions. Management has continuously pointed to advancements in AI-enhanced Search, Workspace features, Vertex AI growth, and Android differentiation as key revenue drivers across Alphabet’s ecosystem [Alphabet IR]. Nonetheless, the company notes that training new models and upgrading its infrastructure remain capital-intensive endeavors.

Key Moments in Google’s AI Catch-Up

- Nov 2022 – ChatGPT launches, accelerating consumer interest in generative AI.

- Feb 2023 – Bard debuts, immediately encountering scrutiny over a factual error [Reuters].

- Apr 2023 – Google merges Brain and DeepMind into Google DeepMind [Google].

- May 2023 – Search Generative Experience launches in Labs [Google].

- Dec 2023 – Gemini announced in Ultra, Pro, and Nano configurations [Google DeepMind].

- Feb 2024 – Bard rebrands to Gemini; Google introduces Gemini 1.5 with extended context windows [Google].

- Feb 2024 – Google pauses Gemini’s image generation following inaccuracies [The Verge].

- Mar 2024 – Mustafa Suleyman joins to lead AI at Google [Google].

- May 2024 – AI Overviews roll out more widely; Google addresses reliability issues [Google].

- May 2024 – Project Astra previewed at I/O; OpenAI showcases GPT-4o [Google] [OpenAI].

What It Means for Users and Businesses

For users, the upcoming wave of AI products will feel more intuitive and supportive as they become responsive, consistent, and well-integrated. Anticipate assistive features appearing across Search, Android, Pixel, and Workspace, with Gemini working seamlessly in the background. For businesses, the transition centers on enhanced productivity and reliability: long-context models capable of processing entire repositories, structured outputs integrated into workflows, and clear governance with provenance signals.

As technology continues to evolve rapidly, essential questions remain: Can these systems be trusted? Are they auditable? Are costs predictable? Can they operate within local data constraints? Google’s strategy combines broad consumer access, developer platforms, and infrastructure robustness. The effectiveness of this approach in catching up to or overtaking OpenAI will ultimately depend on real-world execution amidst the complexities of scaling.

Conclusion: A Long Race, Not a Single Sprint

Reflecting on the past two years, Google’s sprint involved more than just launching a chatbot. It entailed reorganizing talent, rethinking product strategies, and reaffirming the company’s commitment to responsibly delivering groundbreaking AI on a global scale. The journey encompassed learning from missteps, making adjustments, and embracing new leadership while also establishing a unified model platform focused on safety and integration within major products.

The rivalry with OpenAI will persist, driving advancements on both sides. Users and enterprises have already begun to benefit from this competition, enjoying faster, more capable, and accessible AI solutions. The future chapters are likely to be characterized by the ability to deliver both speed and reliability, rather than just superior intelligence.

FAQs

What is Gemini, and how is it different from Bard?

Gemini is Google’s suite of multimodal AI models. Bard was an early chatbot interface; in February 2024, it was rebranded as Gemini and enhanced with the latest Gemini models. Gemini supports text, images, audio, and code, available in various versions like Ultra, Pro, and Nano for different uses [Google DeepMind] [Google].

Why did Google merge Brain and DeepMind?

The merger was intended to enhance innovation and reduce redundancy. The formation of Google DeepMind in April 2023 centralized leadership and resources for cutting-edge model research and engineering, with Demis Hassabis leading as CEO and Jeff Dean as Chief Scientist [Google].

What went wrong with AI Overviews?

Initial AI Overviews released low-quality or humorous content as factual, resulting in misleading outputs. Google refined prompts, improved content filters, and implemented additional safeguards to mitigate these issues while proceeding with the rollout [Google].

How does Google’s approach compare with OpenAI’s?

OpenAI focuses on creating polished, centralized experiences like ChatGPT and GPT-4o. In contrast, Google emphasizes a model platform (Gemini) that is integrated across Search, Android, Workspace, and Cloud. Both aim for multimodal, real-time interactions, but Google’s strengths lie in its distribution capabilities and on-device features [Google] [OpenAI].

What should enterprises watch next?

Enterprises should keep an eye on long-context reasoning capabilities, predictable latency and costs, robust safety measures, and smooth integration with existing data systems. Google is advancing in all these areas through Gemini 1.5, Vertex AI, and Cloud TPU and GPU offerings, alongside provenance tools like SynthID [Google Cloud] [Google DeepMind].

Sources

- Google shares fall after Bard demo error – Reuters

- Announcing Google DeepMind – Google

- Generative AI in Search (SGE) – Google

- New AI-powered Bing and Edge – Microsoft

- Introducing Gemini – Google DeepMind

- Gemini 1.5 and long context – Google

- Google pauses Gemini image generation – The Verge

- Improving Gemini’s image generation – Google

- Improving AI Overviews – Google

- AI Overviews backlash – The Verge

- Mustafa Suleyman joins Google – Google

- Google I/O 2024 highlights, including Project Astra – Google

- Introducing GPT-4o – OpenAI

- Cloud TPU v5e – Google Cloud

- Cloud TPU v5p – Google Cloud

- SynthID watermarking – Google DeepMind

- AI Principles – Google

- Alphabet Investor Relations – Alphabet

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article