Google Introduces Labels for AI-Generated Images in Search: What You Need to Know

Google Introduces Labels for AI-Generated Images in Search: What You Need to Know

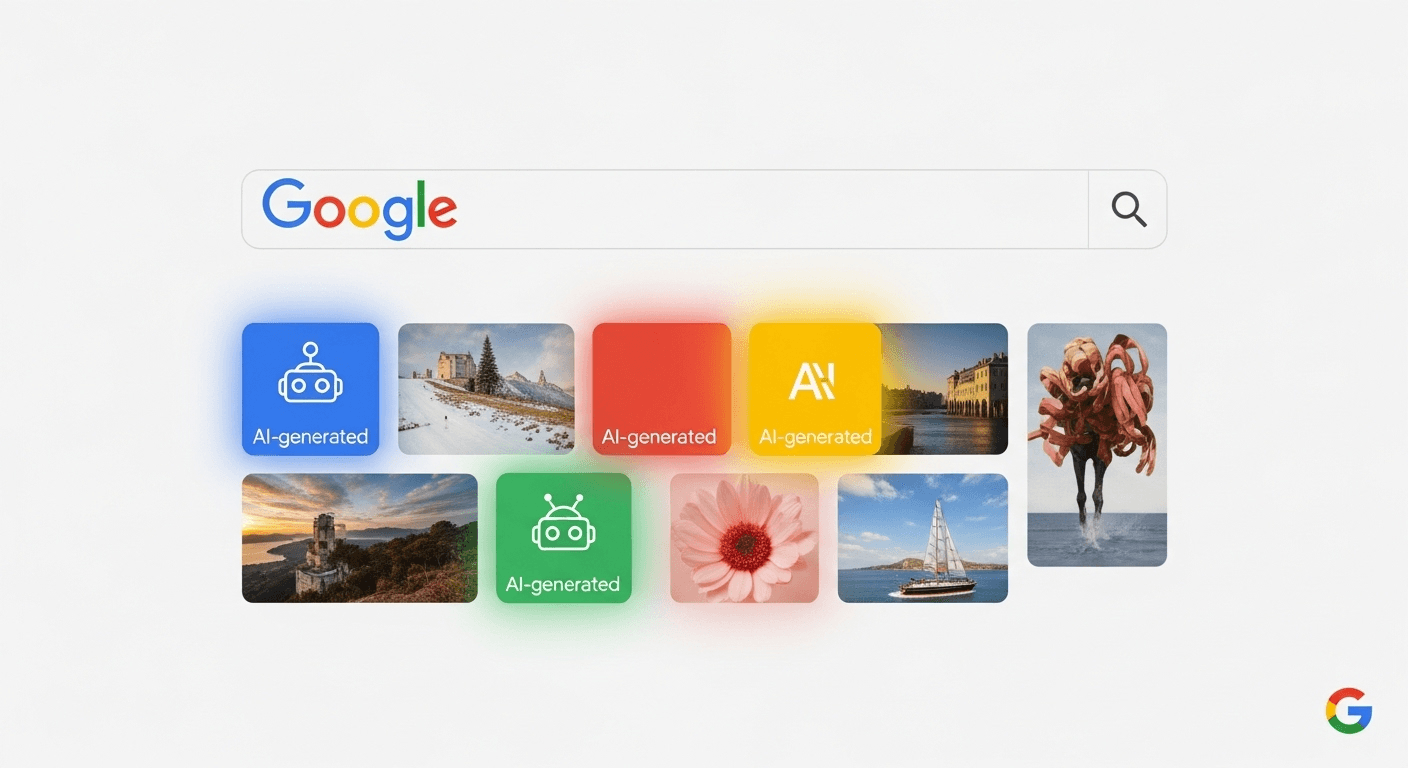

Google is rolling out new labels to identify AI-generated images in Search and Google Images. Here’s what this means for users, creators, and publishers, as well as a breakdown of how these labels function.

Why This Update Matters

The rise of AI image generation has greatly enhanced creativity, but it has also complicated our ability to discern what is real. Labels indicating when an image was created or significantly altered by AI will help users quickly assess credibility, curb the spread of misinformation, and clarify what they are viewing.

Google has been working towards this initiative by integrating features like “About this image,” supporting industry metadata standards, and researching invisible watermarking. This new step will provide a straightforward label for AI-generated images in search results when reliable signals are available—part of a broader push for content authenticity led by the Coalition for Content Provenance and Authenticity (C2PA) and the Content Authenticity Initiative (CAI). C2PA and CAI.

What is Changing

Google Search and Google Images will feature labels such as “AI-generated” on image thumbnails and/or detail views when it can confirm that an image is either synthetic or significantly modified by AI, based on trustworthy signals:

- Metadata provided by the image creator or publisher using standards like IPTC Photo Metadata and C2PA Content Credentials.

- Google’s own signals for images created by its tools, which embed disclosure by default.

- Contextual data found in “About this image” to help you track when an image was first indexed and how it has been described online.

Initially, you can expect to see labels on images produced by major AI platforms that already include provenance data, as well as images generated by Google’s tools. This label coverage will expand as more tools, platforms, and publishers adopt these standards and Google continues to improve its detection and labeling mechanisms.

How Google’s Labeling Works

The labeling system relies on three key components:

1) Open Provenance Standards in Metadata

Modern image files can include embedded metadata that details their creation and any edits. Two essential standards include:

- IPTC Photo Metadata – Commonly used in news and photography, this now features a field called Digital Source Type to indicate whether an image is an original photograph, a composite, or synthetic. Learn more about the IPTC specification here.

- C2PA Content Credentials – This industry framework cryptographically signs an asset’s provenance, showing which app or camera created it and what modifications followed. It is supported by major companies, including Adobe, Microsoft, OpenAI, and Google. Explore more at C2PA and CAI.

Google Search utilizes these signals to display clear labels such as “AI-generated” or “Labeled by the creator as AI-generated” when applicable.

2) Invisible Watermarking (SynthID)

AI systems, including Google’s, implant invisible watermarks in generated images. Google DeepMind’s SynthID aims to ensure these watermarks remain intact even with common edits like resizing or color adjustments. When present, these watermarks help to reliably identify AI-generated images without depending solely on removable metadata. More information can be found in DeepMind’s overview of SynthID here.

However, it’s important to recognize that no watermark is infallible. Heavy editing could complicate detection, which is why Google enhances watermark checks with metadata and other contextual information.

3) Context from “About this Image”

“About this image” in Google Search offers insights on when an image was first indexed, its various appearances, and its web descriptions. This context helps users recognize misused recycled photos, even if an image lacks AI metadata. Access this feature directly from image results. For further information, visit Google’s support resources here.

Where You Will See the Labels

- Google Images – Labels will be visible on grid thumbnails and in the image viewer when there are reliable signals indicating AI generation.

- Standard Search – Labels will appear in image packs, knowledge panels, perspectives, and other image-including sections.

- Lens and Mobile Platforms – Expect similar integration across various visual discovery experiences.

Label design may vary based on the platform and will likely evolve with user feedback and testing.

What Qualifies as “AI-Generated”?

There are various degrees of synthetic content, and the labels will reflect this nuance:

- Fully Synthetic – Images created solely by a generative model from a text prompt or other inputs, which will clearly bear an AI-generated label when signals are present.

- Significantly Modified by AI – Real photos altered in ways that change their meaning or accuracy (for instance, swapping faces or introducing fake objects). These images will also carry an AI disclosure in their metadata.

- Light Edits – Basic enhancements like exposure adjustments, cropping, or removing dust will not trigger new labeling.

The labels will emphasize the creation method or significant editing rather than the intent or truthfulness of the imagery.

Implications for Creators and Publishers

This update offers an opportunity for web publishers to strengthen trust and safeguard the provenance of their work.

Incorporate Provenance Metadata Before Publishing

- Utilize Tools Supporting Content Credentials – Many creative applications now allow the integration of C2PA content credentials at export. Check the CAI for an up-to-date list of supported tools and workflows here.

- Set IPTC Fields – Ensure the IPTC fields, including Digital Source Type, are accurately populated. Most professional photo editing software supports this feature. More details are available at the IPTC site here.

- Review Your Workflow – Some content management systems or CDNs may strip metadata during uploads. Optimize settings to preserve metadata or implement C2PA credentials, designed to be tamper-evident.

Promote Clear Disclosure When Using AI

Transparency is essential for audience trust and brand integrity. Use descriptive captions and utilize any available explicit AI-generated metadata fields. Many platforms are aligning towards transparency in this area, enhancing the likelihood that Google can accurately label your content.

Will Labels Impact Image SEO?

Google’s public guidance underscores the importance of helpful and original content. There are no restrictions against AI-generated content, provided it adheres to spam policies and addresses user needs source. An AI-generated label will not adversely affect image rankings; factors such as relevance, quality, descriptive alt text, structured data, and fast-loading pages remain pivotal for image SEO.

What This Means for Everyday Users

For the average user, this change means that some Search images will be clearly marked as AI-generated. Here are some quick tips for gaining further context:

- Look for the Label – If you see “AI-generated,” consider the image synthetic unless another reliable source confirms otherwise.

- Utilize About this Image – Investigate when the image was first indexed, its descriptions on other sites, and whether trustworthy outlets have featured it.

- Perform a Reverse Image Search – This will help you find where else the image appears and identify earlier, unedited versions.

- Observe Context Clues – Look for signs like inconsistent lighting or odd artifacts, which may indicate AI-generated imagery, although high-end AI models continue to improve.

Limitations and Ongoing Challenges

- Metadata Can Be Stripped – Basic export settings or platform uploads might remove metadata, making cryptographically signed credentials and watermarking particularly important.

- Watermarks Are Not Foolproof – While robust frameworks like SynthID are designed to endure common edits, adversarial removal remains an active research challenge source.

- AI Editing Is a Spectrum – Drawing clear policy lines between acceptable retouching and misleading manipulation is nuanced and context-dependent.

- Potential for Errors – Any detection system can generate false positives and negatives. Labels will only surface when confidence is high, but this cannot entirely eliminate errors.

The Broader Policy Context

This initiative aligns with a growing movement towards transparent AI content disclosure:

- U.S. Voluntary Commitments – In 2023, the White House announced voluntary commitments from major AI companies to develop watermarking and provenance tools source.

- EU AI Act – The European Parliament approved groundbreaking AI regulations in 2024, imposing transparency requirements on deepfakes and AI-generated content, pushing platforms and creators for clearer labeling source.

- Industry Standards – C2PA and CAI standards are expanding, with camera manufacturers, creative tools, and platforms piloting comprehensive content credentials that accompany images from creation to publication source.

Expect ongoing convergence in regulation, platform policy, and standards, making labeled and verifiable provenance a foundational aspect of digital media.

How to Prepare Now

For Brands and Publishers

- Define guidelines for how your teams will use AI imagery and require disclosure for synthetic or heavily modified visuals.

- Incorporate C2PA Content Credentials in your creative workflow, including for real photos, to validate authenticity and edits.

- Audit your CMS and CDN to ensure they preserve provenance metadata during uploads or optimization. If necessary, modify settings or transition to workflows that maintain it.

- Update alt texts and captions to remain transparent about AI assistance where applicable. Clarity fosters trust.

For Creatives and Agencies

- Utilize tools that automatically export IPTC and C2PA data. Keep your software current to leverage the latest provenance features.

- Retain originals and edit histories, as content credentials are more effective when there is a clear record of edits.

- Discuss disclosure expectations with clients upfront to mitigate surprises when labels appear in search results.

For Everyone

- Make it a habit to check labels and use “About this image” whenever an image seems unusual or curated.

- Share responsibly; a quick verification could prevent the spread of misinformation.

What Comes Next

Labeling AI-generated images in Search is not a comprehensive fix, but combined with provenance standards, invisible watermarks, and context, it significantly elevates the effort to combat visual misinformation and enhances media literacy.

In the short term, anticipate expanded coverage as more publishers adopt Content Credentials and as Google’s tools begin incorporating strong provenance signals by default. Camera manufacturers and platforms are also testing native credentials, potentially making trustworthy provenance the standard instead of the exception. As this ecosystem advances, Search labels will become increasingly common and precise.

Looking ahead, similar practices are expected to extend beyond still images to video and audio, adapting standards and watermarking as necessary. The aim is straightforward: users should be able to understand how media was produced and make informed judgments regarding its trustworthiness.

FAQs

How Does Google Detect AI-Generated Images?

Primarily through provenance signals, which include embedded metadata utilizing standards like IPTC and C2PA Content Credentials, along with invisible watermarks from supported tools. Google Search also offers context via “About this image” to help evaluate authenticity, even when embedded signals are absent.

Will Every AI Image Be Labeled?

No. Labels are dependent on strong signals. Images lacking metadata or coming from tools that don’t provide credentials or watermarks may not be labeled. This is why widespread adoption of open standards is essential.

Can Labels Be Incorrect?

Any detection system has the potential for inaccuracies. Google aims to display labels only when confidence levels are high, utilizing multiple signals to minimize false positives and negatives.

How Can I Ensure My AI Images Are Labeled Correctly?

Ensure you publish with intact IPTC and C2PA metadata and utilize tools that attach Content Credentials. Avoid workflows that strip metadata and provide clear captions and disclosures.

Does an AI Label Harm SEO?

There is no indication that an AI-generated label alone negatively impacts rankings. Google’s guidance emphasizes helpfulness, originality, and compliance with spam policies for both text and images source.

Conclusion

Google’s new labels for AI-generated images represent a significant move towards a more trustworthy visual web. While they don’t address misinformation in isolation, they facilitate a better understanding of what users see and how much trust to place in it. For creators and publishers, adopting provenance standards now is crucial for audience clarity and accurate representation in Search. For curious readers, these labels combined with “About this image” provide rapid, informative context. Ultimately, the trend is clear: transparency is becoming the future of digital media.

Sources

- Coalition for Content Provenance and Authenticity (C2PA)

- Content Authenticity Initiative (CAI)

- IPTC Photo Metadata Standard

- Google DeepMind SynthID Overview

- Google Search Guidance on AI-Generated Content

- Google Search Help – About This Image and Related Features

- White House Fact Sheet on AI Voluntary Commitments

- European Parliament – AI Act Overview and Approval

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article