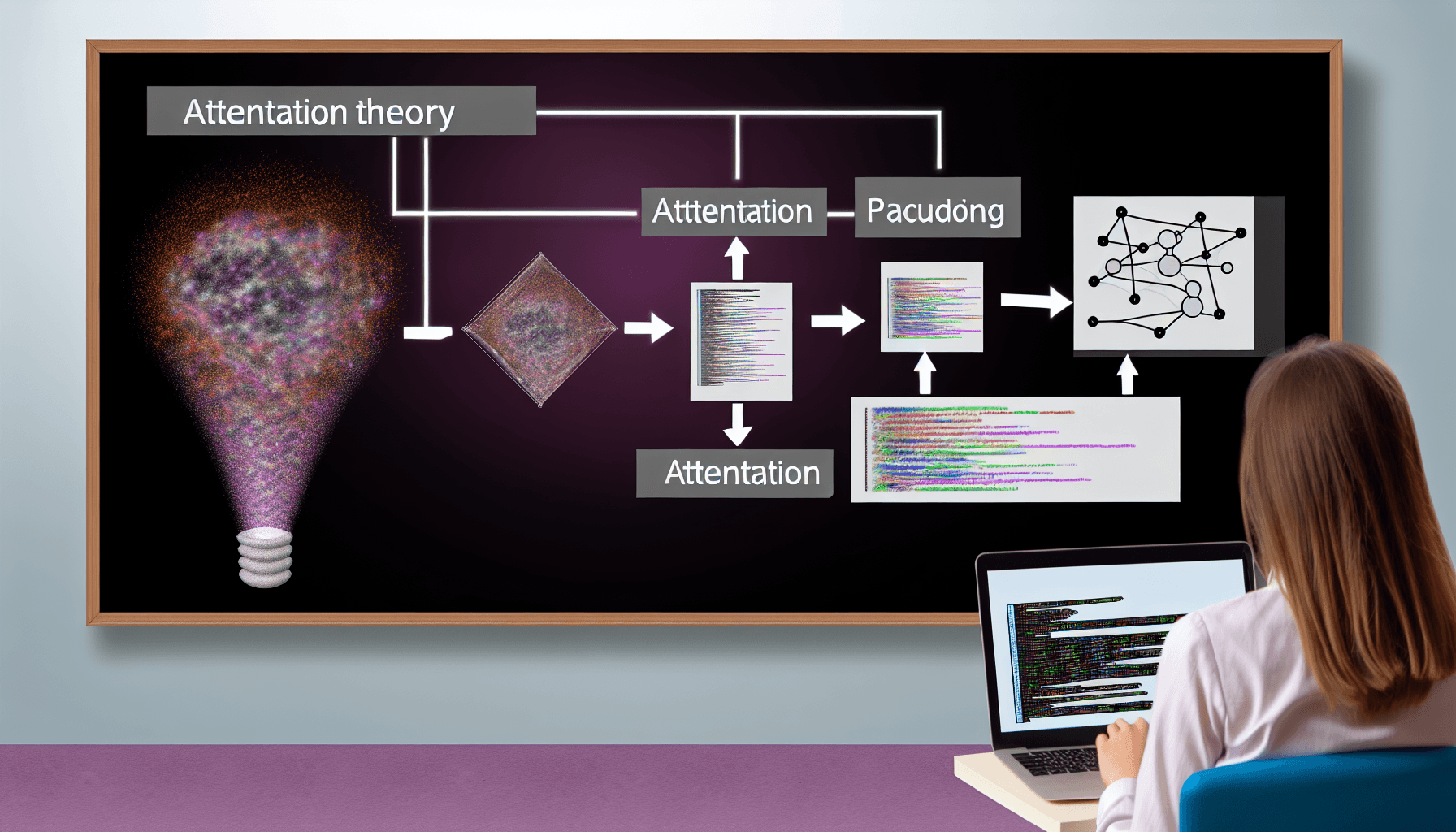

From Theory to Code: Implementing Attention Mechanisms

Introduction

Attention mechanisms have revolutionized the field of machine learning, especially in tasks that involve natural language processing and image recognition. This article delves into how these sophisticated mechanisms can be understood from a theoretical standpoint and then effectively implemented in code, ensuring that developers can enhance their models’ interpretability and performance.

Understanding Attention Mechanisms

Before diving into the coding aspects, it’s crucial to understand what attention mechanisms are and why they are so transformative. In essence, attention helps models focus on specific parts of the input data that are more relevant for a given task, mimicking the human ability to focus with precision. This is particularly useful in handling sequences or large inputs where certain segments hold more significance than others.

The Basics of Attention

At its core, the attention mechanism involves three main components: queries, keys, and values. Typically, these components are derived from the input data and are used to dynamically assign a focus to specific parts of the data through a compatibility function, often calculated using a softmax function.

Types of Attention Mechanisms

There are several types of attention mechanisms, each suitable for different scenarios:

- Self-attention: Often used in transformer models, this type of attention allows the model to look at other parts of the input sequence in processing each element.

- Global attention: Applies attention to all input features of the data, ideal for tasks where each input element can affect the output globally.

- Local attention: Focuses on just a subset of input features, useful in models where local context is more pertinent.

Implementing Attention in Python

Moving from theory to code, Python offers robust libraries such as TensorFlow and PyTorch that simplify the implementation of attention mechanisms. Below is a step-by-step guide on how to integrate attention into a neural network using Python.

Setting Up Your Environment

First, ensure that your Python environment is set up with the required libraries. Here’s a quick setup guide:

# Install TensorFlow

pip install tensorflow

# Or, if you prefer PyTorch:

pip install torch

Coding an Attention Layer

We will use TensorFlow to build a custom attention layer. This example involves creating a class that inherits from TensorFlow’s Layer class:

import tensorflow as tf

class AttentionLayer(tf.keras.layers.Layer):

def __init__(self, units):

super(AttentionLayer, self).__init__()

self.W = self.add_weight(shape=(units, units), initializer='random_normal')

self.b = self.add_weight(shape=(units,), initializer='zeros')

def call(self, inputs):

# Assuming inputs = [query, key, value]

q, k, v = inputs

matmul_qk = tf.matmul(q, k, transpose_b=True)

attention_scores = tf.nn.softmax(matmul_qk)

attention_output = tf.matmul(attention_scores, v)

return attention_output

Integrating Attention in a Model

Once the attention layer is coded, it can be integrated into a larger model. Here’s a simple example using a sequence-to-sequence model:

encoder_input = tf.keras.layers.Input(shape=(None, dim))

encoded = tf.keras.layers.LSTM(units)(encoder_input)

attention_out = AttentionLayer(units)([encoded, encoded, encoded])

decoder_output = tf.keras.layers.LSTM(units)(attention_out)

model = tf.keras.Model(inputs=encoder_input, outputs=decoder_output)

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()

Best Practices and Tips

Implementing attention mechanisms can be complex, and here are some tips to help you ensure the success of your models:

- Understand the data: Know what parts of the data are worth focusing on to configure your attention models effectively.

- Experiment: Attention can be applied in different ways, so experiment with various types of attention to find what works best for your specific application.

- Monitor performance: Always monitor your model’s performance and be ready to tweak the attention layers as necessary.

Conclusion

Attention mechanisms are powerful tools that allow models to improve their focus on relevant data, enhancing both the efficiency and effectiveness of the learning process. By understanding these mechanisms theoretically and applying them practically in Python, developers can significantly boost their machine learning models’ performance.

With the guidance provided, you are well-equipped to begin exploring and implementing attention mechanisms in your own projects, pushing the boundaries of what your AI systems can achieve.

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article