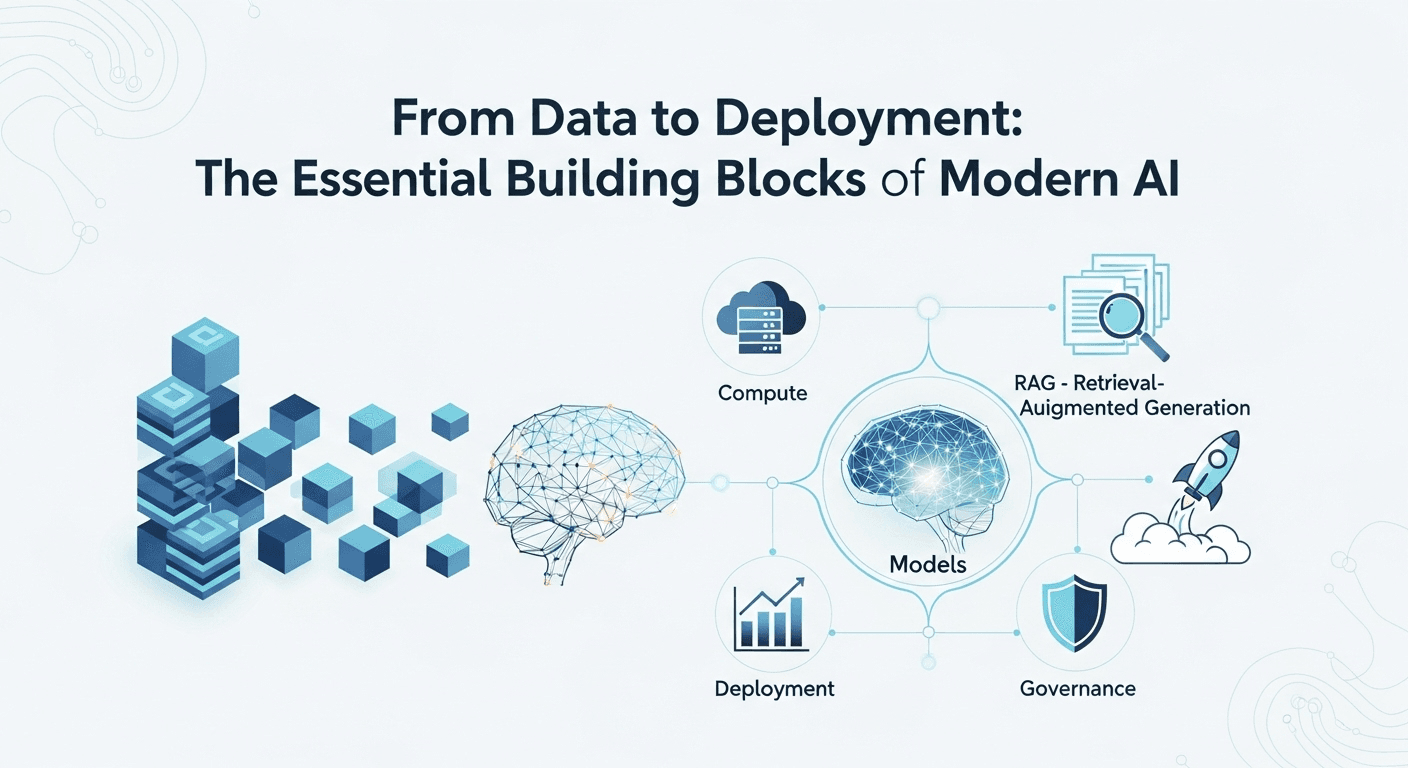

From Data to Deployment: The Essential Building Blocks of Modern AI

From Data to Deployment: The Essential Building Blocks of Modern AI

Artificial Intelligence has evolved from exciting lab demonstrations to essential products that millions rely on daily. Yet, for many teams, the technology behind AI can seem like a complicated black box. This guide simplifies the core components of AI, making the information accessible. Whether you’re working on your first prototype or scaling up production systems, this practical overview covers crucial elements, trade-offs, and effective practices.

What This Guide Covers

We will explore the essential layers that power modern AI systems, providing examples and links to credible resources. You’ll learn how data enters models, how these models are trained and optimized, how retrieval and vector searches enhance performance, and how evaluation, governance, and operational practices ensure reliability and safety.

The AI Stack at a Glance

- Data: Collection, labeling, quality assurance, and privacy considerations.

- Models: Classical machine learning, deep learning techniques, transformers, and multimodal systems.

- Compute and Frameworks: Utilizing GPUs/TPUs, strategies for distributed training, and inference optimization.

- Embeddings and Vector Search: Transforming text and images into vectors for efficient retrieval.

- Retrieval-Augmented Generation (RAG): Merging search capabilities with generation to ensure contextually relevant outputs.

- Agents and Tool Usage: Enabling models to plan, utilize tools, and perform actions.

- Evaluation and Reliability: Implementing benchmarks, human assessments, and rigorous testing methods.

- Deployment, MLOps, and LLMOps: Managing version control, monitoring, and cost efficiency.

- Security, Privacy, and Governance: Upholding responsible practices and regulatory compliance.

1) Data: The Foundation

High-quality data is the cornerstone of effective AI. The shift toward data-centric AI emphasizes that enhancing data quality can yield better performance than repeatedly adjusting model code (see Andrew Ng’s insights on data-centric approaches).

- Data Types: This includes text, tables, images, audio files, code, logs, and event streams.

- Data Sources: Public datasets, web-sourced data, enterprise knowledge bases, operational databases, and user-generated content are critical.

- Labeling Methods: A combination of expert labeling, crowdsourcing, weak supervision, and automated labeling can be utilized.

- Data Quality: Focus on deduplication, normalization, schema consistency, and ensuring comprehensive coverage of use cases.

Practical tips include:

- Establish a clear data contract, defining fields, formats, and refresh schedules to prevent unnoticed issues.

- Record real user interactions and outcomes (with consent) to inform iterative improvements and evaluations.

- Employ privacy-preserving techniques, such as differential privacy, to protect individuals while maintaining useful aggregate insights (see Google’s open-source differential privacy library).

2) Models: From Classic ML to Foundation Models

Modern AI blends established machine learning techniques with large neural networks, known as foundation models.

Classical ML

Techniques like logistic regression, decision trees, random forests, and gradient boosting are well-suited for structured data tasks. These models are interpretable, efficient, and often easier to deploy, particularly when working with tabular data.

Deep Learning and Transformers

Transformers form the backbone of current state-of-the-art systems across language, vision, and multimodal tasks. The original transformer architecture introduced attention mechanisms that effectively model long-range dependencies (Vaswani et al., 2017). As model and data scales have increased, researchers noted predictable scaling behaviors (Kaplan et al., 2020) and devised more compute-efficient training strategies (Hoffmann et al., 2022, also termed Chinchilla). These insights assist teams in optimizing dataset size, parameter quantity, and training compute rather than merely increasing sizes.

Instruction Tuning, Alignment, and Adapters

- Instruction Tuning: Fine-tuning models using human-generated prompts and responses significantly enhances model utility (Ouyang et al., 2022).

- Adapters and LoRA: Parameter-efficient methods like LoRA enable specialization of models by training smaller adapter layers rather than adjusting all parameters (Hu et al., 2022).

- Distillation: Utilizing knowledge distillation compresses larger models into smaller versions, enhancing speed and cost efficiency while maintaining accuracy (Hinton et al., 2015).

Multimodal Models

These models integrate text with images, audio, video, or structured data. Systems like Flamingo (Alayrac et al., 2022) have pioneered advancements in vision-language capabilities, while recent models successfully deliver image understanding and image-to-text functionality in real-world applications (refer to the OpenAI GPT-4 system card).

3) Embeddings and Vector Search

Embeddings transform text, images, and other inputs into high-dimensional vectors, ensuring that similar items are grouped together. They support semantic search, clustering, recommendations, and RAG.

- Text Embeddings: Approaches like Sentence-BERT demonstrate how to create effective sentence-level vectors for tasks like search and clustering (Reimers and Gurevych, 2019). Current embedding models enhance multilingual and domain-specific capabilities.

- Vector Indexes: Libraries such as FAISS facilitate rapid nearest-neighbor searches across vast vector spaces (Johnson et al., 2017). Specialized vector databases combine storage, indexing, filtering, and hybrid search functionalities.

Best practices include maintaining consistent chunk sizes, storing document metadata for efficient filtering, and re-embedding content periodically when the underlying embedding model changes.

4) Compute and Frameworks

Training and inference processes are heavily dependent on specialized hardware and software frameworks.

- Hardware: GPUs like NVIDIA A100/H100 and Google’s TPUs are designed for optimized tensor operations. Efficient interconnects and memory bandwidth are vital for scaling operations (see NVIDIA Hopper architecture and Google Cloud TPU v5e announcements).

- Frameworks: PyTorch and TensorFlow are the leading frameworks in deep learning, while JAX is favored in research and high-performance training contexts.

- Distributed Training: Techniques like data parallelism, model parallelism, and optimizers such as DeepSpeed ZeRO help minimize memory usage, enabling the training of larger models (Rajbhandari et al., 2020).

- Inference Optimization: Quantization and low-rank adapters can lower memory consumption and increase the speed of inference while retaining acceptable accuracy (Dettmers et al., 2022; Dettmers et al., 2023). Runtimes like ONNX Runtime and NVIDIA TensorRT facilitate portability and performance improvements.

The cost and efficiency of operations depend on batch sizes, sequence length, type of precision (FP32 vs. FP16 vs. INT8), and the effective utilization of hardware resources. Profiling your system early and frequently can yield significant cost savings.

5) Retrieval-Augmented Generation (RAG)

RAG combines a generator model (like a large language model) with a retriever that fetches relevant documents. This combination enhances the accuracy of model outputs by grounding them in reliable sources, reducing hallucinations, and ensuring content remains current without re-training the base model (Lewis et al., 2020).

How a Typical RAG Pipeline Works

- Ingestion: Clean, segment, and embed documents, storing vectors and metadata in a vector index.

- Retrieval: For each query, retrieve the top-k relevant chunks using semantic search techniques and filters.

- Synthesis: Construct a prompt incorporating the retrieved context, then query the model for an output.

- Attribution: Cite sources and provide links to enhance trust and verification.

Dense retrievers, like DPR (Karpukhin et al., 2020), and hybrid approaches that blend keyword and vector methods typically improve recall. When dealing with lengthy documents, consider hierarchical retrieval strategies or query decomposition. Continuous monitoring of retrieval quality is essential, in addition to assessing generation quality.

Common Pitfalls

- Over-chunking: Chunks that are too small may lose context, while those that are too large could introduce noise. Regularly adjust chunk sizes and overlaps.

- Stale Indexes: If your knowledge base changes frequently, automate the re-indexing process and maintain versioned indexes linked to updates.

- Prompt Leakage: Maintain a clear distinction between system and user inputs. Implement allowlists to mitigate prompt injection vulnerabilities that could trick the model.

6) Agents and Tool Use

Agents allow models to go beyond mere text generation, facilitating planning, tool utilization, data retrieval, and iterative problem-solving. Research such as ReAct has shown how integrating reasoning and tool usage can enhance performance on complex tasks (Yao et al., 2022). Toolformer demonstrates models learning when to employ external resources (Schick et al., 2023). In operational settings, many teams employ function-calling APIs to safely connect model outputs to trusted tools and data sources.

Designing effective agents requires:

- Keeping the toolset concise and explicit, with well-documented functions and constraints.

- Establishing limits on recursion and setting budgetary constraints (steps, tokens, time) to prevent runaway costs.

- Logging each action for accountability, including inputs, tool outputs, and intermediate reasoning summaries.

- Implementing safeguards for sensitive operations (e.g., requiring approvals for data deletion or financial transactions).

7) Evaluation and Reliability

While Large Language Models (LLMs) can be impressive, their reliability hinges on thorough measurement practices. Combine automated benchmarking with human assessments and targeted red teaming.

- General Benchmarks: MMLU evaluates a range of knowledge tasks (Hendrycks et al., 2020). HELM establishes a multidimensional evaluation framework, assessing aspects such as robustness, fairness, and efficiency (Liang et al., 2022). Interactive comparisons like the LMSYS Chatbot Arena provide scalable human preference signals.

- Domain-Specific Tests: Develop tailored test sets from genuine user inquiries and your established correct responses, including both standard and adversarial cases.

- Safety Assessments: Evaluate models for toxicity and bias using datasets like RealToxicityPrompts (Gehman et al., 2020) and applying fairness tests appropriate to each domain. Conduct red-team exercises to challenge model behavior under stress.

- Human-In-The-Loop: Engage experts in reviewing high-stakes outputs. Active learning can prioritize uncertain cases for further labeling.

Note: Make sure to monitor both retrieval and generation quality in RAG setups. A retrieval failure, even by a top-performing model, can result in incorrect answers.

8) Deployment, MLOps, and LLMOps

Successfully releasing AI involves more than just getting a model to function on a laptop; it requires creating reliable workflows for versioning, testing, monitoring, and consistent improvement.

Core MLOps Building Blocks

- Versioning: Treat data, prompts, models, and indexes as crucial, versioned artifacts.

- CI/CD: Set up automated testing for prompts, retrieval structures, and model adapters before going live.

- Model Registry: Keep track of model lineage, evaluation results, and deployment specifics.

- Observability: Record inputs, outputs, latencies, retrieval performances, and user feedback to identify regressions early.

- Rollouts: Use canary releases and A/B testing to safely validate system changes.

LLMOps Specifics

- Prompt Management: Organize prompts with structured variables and guidelines, treating them like code for testing.

- Index Maintenance: Schedule regular ingestion, re-embedding, and updates. Keep track of index freshness service-level agreements (SLAs).

- Cost Controls: Limit tokens per request, cache frequent results, and consider smaller local models for quicker tasks.

- Latency Budgets: Pre-compute embeddings, warm caches, and leverage batching and streaming techniques to enhance response times.

For teams just beginning, a practical approach is to start by prototyping with a hosted model API, incorporate RAG for grounding the system in your data, and later evaluate the need for lightweight fine-tuning or adapters when gaps are identified.

9) Security, Privacy, and Governance

Implementing responsible AI practices is essential. The NIST AI Risk Management Framework provides a structured approach to identifying, evaluating, and managing risks associated with AI throughout its lifecycle. The recently adopted EU AI Act establishes varying obligations based on the associated risk, offering useful principles for system design even outside the EU.

- Data Governance: Minimize personal data collection, apply retention limits, and document user consent. Where feasible, prioritize de-identified or synthetic data.

- Model Documentation: Publish model cards and system cards detailing capabilities, limitations, and intended applications (Mitchell et al., 2019; OpenAI system cards).

- Access Controls: Restrict who can modify prompts, models, and indexes, and require reviews for sensitive adjustments.

- Security: Safeguard training and inference pipelines. Assess the potential for prompt injection threats, data exfiltration through RAG, and supply chain vulnerabilities.

- Monitoring and Incident Response: Keep track of anomalies, set alerts for drift, and establish protocols for rollback and user notifications.

10) Putting It All Together: Choosing the Right Stack

Your selections will depend on specific goals, constraints, and team capabilities. Here’s a helpful framework:

- Prototype Quickly: Utilize a hosted LLM API, implement basic retrieval, and conduct manual evaluations to accelerate learning.

- Operationalize: Introduce observability, caching strategies, prompt templates, index versioning, and comprehensive red-team tests. Document identified risks and mitigation strategies.

- Scale and Specialize: Explore adapters or fine-tuning based on feedback data. Optimize inference processes with quantization and batching. Assess build versus buy decisions for vector databases and orchestration solutions.

In many scenarios, RAG paired with a robust general-purpose model can deliver outstanding results. Consider fine-tuning when consistent domain styles, structured outputs, or specialized reasoning are necessary beyond what retrieval alone can provide.

Case-Style Examples

Support Assistant for a SaaS Product

- Data: Product documentation, release notes, resolved support tickets.

- Stack: Use embeddings and vector search with metadata filters; incorporate RAG for context grounding; develop prompt templates that cite sources; implement caching for frequent queries.

- Evaluation: Conduct weekly reviews of failure modes; perform checks for factual accuracy and coverage; establish guidelines for bias and tone.

- Governance: Implement guardrails to prevent personal data exposure; create an incident response playbook for sensitive scenarios.

Internal Analytics Copilot

- Data: Data warehouse tables with the necessary governance, role-based access, and documented data contracts.

- Stack: Utilize function calling to execute SQL through a secure query service; enable schema-aware retrieval; implement automatic logging and data lineage features.

- Evaluation: Create synthetic test prompts for each table; conduct human reviews for new query types; perform red-team tests to identify data exfiltration vulnerabilities.

- Security: Use least-privilege credentials; impose limits on result sizes; apply differential privacy techniques for sharing aggregate insights.

Checklists You Can Use

Data Readiness

- Do we have well-represented, deduplicated, and accurately labeled data for our target tasks?

- Are privacy, consent, and data retention policies documented and actively enforced?

- Is a feedback loop in place to collect real outcomes and identify error cases?

Model and Retrieval

- Is the base model appropriately sized for our latency and cost requirements?

- Are prompts and adapters versioned and thoroughly tested?

- Are our indexes current, and do we track retrieval precision and recall?

Evaluation and Operations

- Do we have task-specific tests and human-in-the-loop evaluations in place?

- Are safety and bias checks integrated into our continuous integration processes?

- Do we diligently monitor for drift, failures, expenditures, and user feedback?

Conclusion

Modern AI isn’t just about relying on a single

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article