Day 2: Building LLM from Scratch – Resources We Need: “Attention Is All You Need”

Firstly, it is very cool to see people reading my first blog: Day 1: How I Built My Own Large Language Model (LLM) AI from Scratch. Now, before starting LLM work, let’s discuss the architecture behind LLM and GPT.

After some research, I found some interesting resources that can help us understand and build our own LLM. I need to tell you a secret: building GPT involves a lot of mathematical concepts, and we can’t build GPT or LLM like OpenAI or MistralAI, but we can build a nanoGPT based on some datasets.

We talked before about the book Build a Large Language Model from Scratch. Now, for this blog, I will add all the resources needed for this challenge to build your own LLM.

I found various types of resources:

- Papers: Shared by PhDs, thank you all for sharing.

- Videos: Some people are sharing practical stuff to help others understand and build GPT.

- Code Source: On GitHub, I found many projects about building AI/ML/Deep Learning and more.

- HuggingFace: A lot of datasets needed to train AI, published as open-source.

I want to take a moment to express my deepest gratitude to all the incredible individuals who have generously shared their resources, knowledge, and expertise with us. Your contributions have been invaluable in enriching the content of this blog and providing our readers with high-quality, informative, and engaging material.

Whether you’ve shared articles, research, tools, or personal insights, your willingness to give and collaborate has not gone unnoticed. Your generosity helps foster a community of learning and growth, and for that, I am truly thankful.

A special thank you ❤️ to:

Your support and contributions inspire me and our readers every day. Together, we are building a valuable repository of knowledge that benefits everyone.

From the bottom of my heart, thank you for being an essential part of this journey. I look forward to continuing our collaboration and achieving greater heights together.

Papers: About AI and How to Build LLM from Scratch

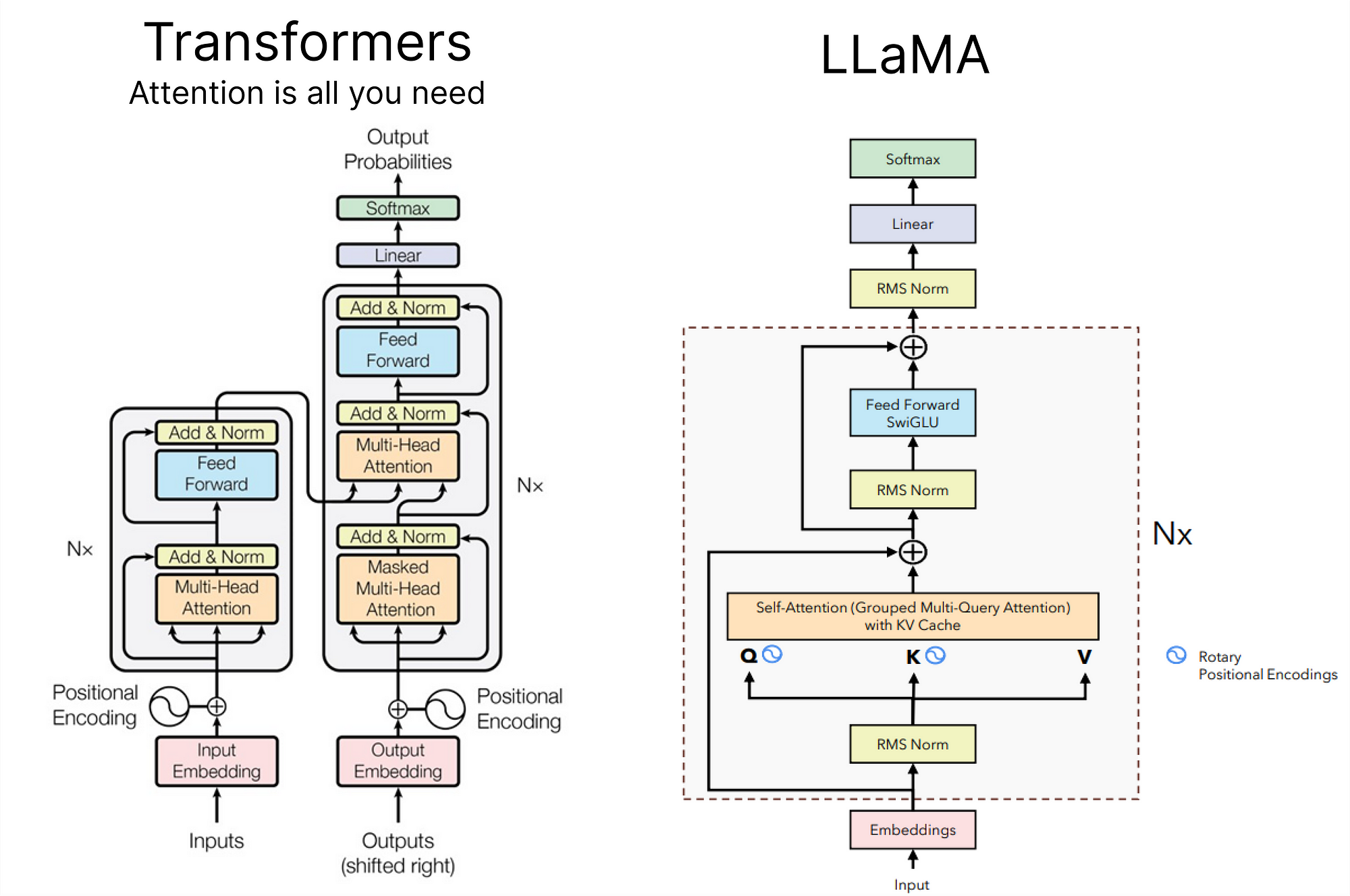

- Attention Is All You Need

- OpenAI GPT-3

- Sparks of Artificial General Intelligence: Early experiments with GPT-4

Blogs: About AI and How to Build LLM from Scratch

GitHub:

Videos:

- Attention Is All You Need

- Sparks of Artificial General Intelligence: Early Experiments with GPT-4

- Transformers Explained – Peter Bloem

- Attention Mechanism – Storrs.io

Conclusion

I will keep updating this post with new information and resources as I continue my journey in building an LLM from scratch. Your support and engagement are incredibly valuable to me, and I am committed to sharing every step of this exciting process with you.

Thank you for your subscription and for following along. Your enthusiasm and feedback motivate me to dig deeper and share more insights. As we explore the intricacies of large language models together, I encourage you to reach out with any questions, suggestions, or topics you’d like to see covered in future posts.

Building an LLM is a complex and rewarding challenge, and I am grateful for the community of learners, researchers, and enthusiasts who are joining me on this path. Stay tuned for more updates, tutorials, and discoveries. Together, we can push the boundaries of what’s possible in AI and machine learning.

Thank you once again for your support, and I look forward to our continued journey in the fascinating world of AI!

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article