Beyond Big Tech: AIDNE’s Vision for Making AI Accessible to All

Beyond Big Tech: AIDNE’s Vision for Making AI Accessible to All

AI is transforming various fields, from search engines to healthcare. Unfortunately, the technology and resources that drive modern AI are mainly held by a select few large corporations. AIDNE is charting a different course: a decentralized, community-driven network aimed at democratizing access to AI, making it more affordable and accountable for everyone.

Why Concentration in AI Matters

In recent years, the costs and complexity involved in developing advanced AI systems have surged. Training cutting-edge models demands specialized hardware, vast datasets, extensive cloud infrastructure, and top-tier talent. As a result, this has shifted the balance of power to a small group of hyperscale providers and leading foundation model creators.

Regulatory bodies and researchers are increasingly vigilant. The UK Competition and Markets Authority has cautioned that control over computational resources, data, and distribution could entrench power dynamics and stifle competition if not addressed (CMA initial review; CMA update). The Stanford AI Index has pointed to the rising expenses of training and the centralization of high-level computing as significant barriers for newcomers (Stanford AI Index 2024).

Policymakers are also looking into establishing safeguards. In the U.S., the Federal Trade Commission has launched a 6(b) inquiry into major AI partnerships and investments to gain insight into competitive dynamics and potential conflicts of interest (FTC 6(b) inquiry). Meanwhile, the EU has passed the AI Act, a comprehensive framework designed to manage risks and promote trustworthy AI while fostering innovation (EU AI Act). These developments emphasize a fundamental truth: how AI is constructed and governed will determine who reaps its benefits.

Introducing AIDNE: A Community-Centric AI Network

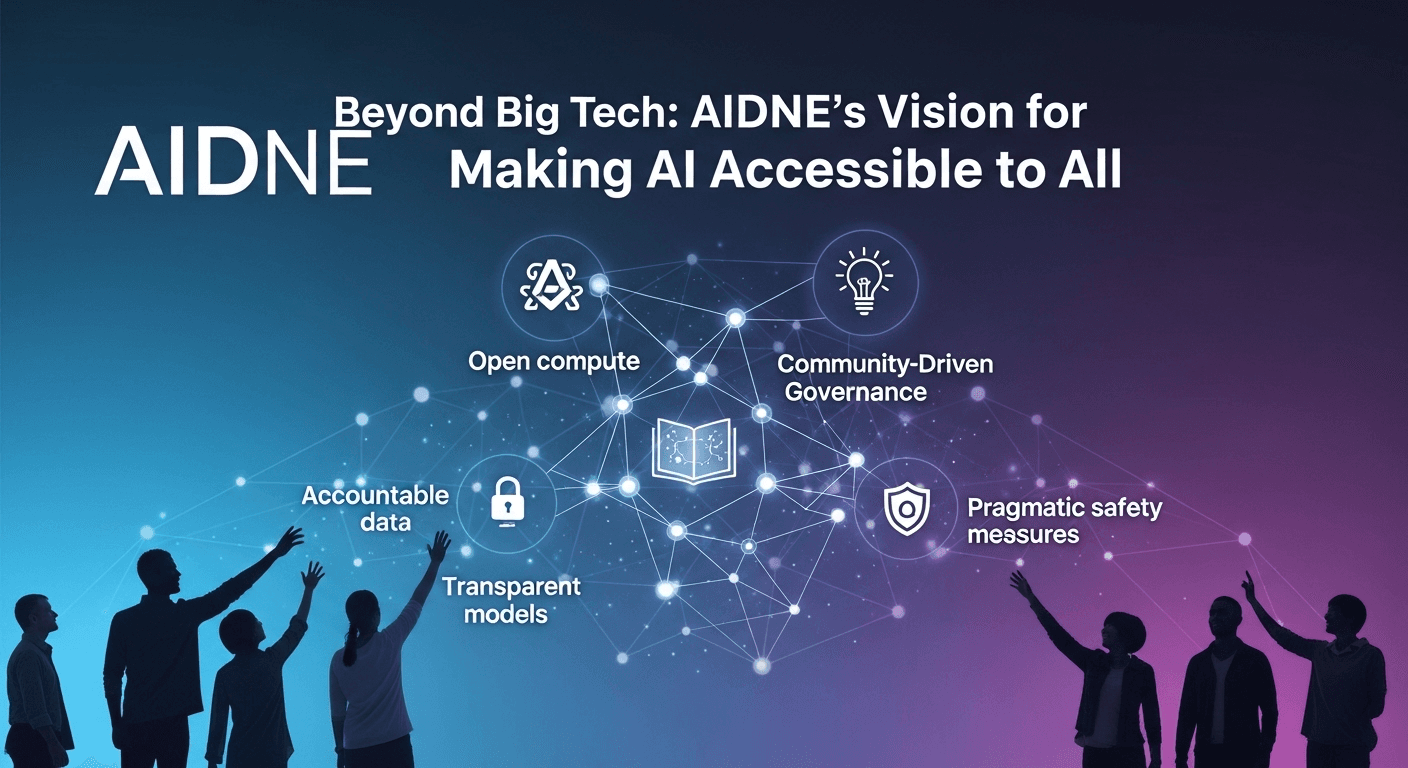

AIDNE is proposing a decentralized network to democratize key components of the AI landscape. Instead of relying on a few centralized providers, AIDNE seeks to distribute work and rewards among a wide array of participants, including compute node operators, data contributors, model creators, auditors, and everyday users. AIDNE’s vision aims to increase accessibility by effectively matching compute supply and demand, curating open and accountable datasets, and promoting transparent model development, all guided by governance mechanisms that align incentives through a native token.

While the specific technical and economic frameworks will evolve as the project progresses, the overarching goals remain clear: reduce barriers in developing and utilizing advanced AI, encourage broader participation, and integrate accountability into the system. Below, you’ll find a closer look at the key components envisioned by AIDNE and how they might integrate.

Components AIDNE Aims to Develop

1) Decentralized Compute Marketplace

The cornerstone of AIDNE is a marketplace connecting those in need of computational power with those who can provide it. Independent node operators could contribute GPUs and CPUs for the training, fine-tuning, or service of AI models, earning network rewards in return. This model has the potential to lower costs and expand access by leveraging underutilized hardware worldwide.

- Users can post jobs specifying requirements such as model type, memory needs, training hours, and privacy constraints.

- Node operators can bid for these jobs, staking tokens to demonstrate their reliability.

- The protocol verifies job completion and distributes rewards based on performance and uptime.

Distributed computing networks have been proven viable through academic and industry research, as shown in discussions about distributed training and inference (On the Opportunities and Risks of Foundation Models). AIDNE seeks to package these concepts into an accessible and user-friendly network.

2) Open and Accountable Data Pipelines

High-quality data often outperforms larger models. Yet, accessing such data can be costly and cumbersome. AIDNE envisions a curated data commons where contributors can submit datasets with clear licensing, provenance, and thorough documentation. Imagine a registry cataloging sources, collection methods, consent status, and known limitations, with rewards linked to dataset utility and compliance.

- Datasets will be registered with metadata detailing origin, licensing, known biases, and intended uses.

- Contributors can earn rewards as their datasets are utilized in training or fine-tuning processes.

- Independent reviewers will audit samples for quality and compliance, offering bounties for identifying issues.

This approach aligns with best practices that researchers advocate for responsible dataset development, such as clear documentation and datasheets (Datasheets for Datasets). AIDNE’s unique twist is to ensure that quality and compliance are more valuable than quantity.

3) Transparent Model Development

AIDNE emphasizes the importance of transparent model development, promoting open-source baselines, reproducible training procedures, and clear licensing for both weights and outputs where feasible. The network plans to support a variety of model sizes and domains, from lightweight tools to expert models, with governance ensuring safety and acceptable application.

- Model creators will publish training configurations, evaluation metrics, and intended use statements.

- Community testing and red-teaming will identify early failure modes.

- Versioned releases and leaderboards will assist users in selecting reliable models.

Open models have spurred innovation by allowing developers to adapt and refine systems swiftly (Stanford AI Index 2024 highlights the surge in open-source contributions). AIDNE hopes to harness this momentum into a cohesive ecosystem with clear incentives.

4) Tokenized Incentives and Governance

In AIDNE’s framework, a native token unites network participants:

- Compute providers earn tokens for reliable and verified work.

- Data contributors and auditors receive rewards proportionate to the value and integrity of their input.

- Model builders and maintainers are encouraged to release models that are transparent and well-documented.

- Token holders will participate in governance, proposing and voting on decisions regarding the protocol, safety policies, and resource allocations.

Effective token design is crucial. Poorly designed incentives could promote low-quality contributions or concentrate power among a few dominant holders. AIDNE’s task will be to find a suitable balance that ensures sufficient rewards to grow the network while implementing safeguards against capture and defining clear rules to manage conflicts of interest.

5) Privacy and Safety Integration

Democratizing AI should not compromise safety or privacy. AIDNE plans to incorporate privacy-preserving techniques, such as federated learning, differential privacy in data pipelines, and stringent access controls. The network may also explore cryptographic methods for job verification, allowing nodes to prove task completion without revealing sensitive information.

These strategies align with industry guidance on responsible AI, including considerations for auditability, documentation, and compliance with regulations like the EU AI Act for high-risk applications (EU AI Act).

6) Marketplace for AI Services

Alongside raw computing resources, AIDNE envisions a marketplace where users can discover and pay for AI services such as text generation, code assistance, image processing, and domain-specific agents. With transparent pricing and usage-based billing, developers can reach a global audience without the burden of creating their own hosting or billing infrastructure. Users benefit from increased choice in a competitive marketplace.

7) Education, Documentation, and Community Building

Open ecosystems flourish when documentation is robust and onboarding is intuitive. AIDNE’s long-term success will depend on creating clear guides for node operators, data contributors, and model creators, as well as resources for community support, hackathons, and grants. The aim is to engage individuals who may not have joined a centralized provider’s partner program but can create significant value within an open network.

A Day in the AIDNE Network

To illustrate this vision, let’s consider a few typical scenarios.

For a Developer Fine-Tuning a Model

- The developer selects a base open model and searches the data commons for a licensed, domain-specific dataset.

- They post a fine-tuning job to the compute marketplace, detailing GPU needs, expected training steps, and budget limits.

- Several node operators bid; the developer chooses a mix to mitigate risk.

- After training, they review evaluation results, publish a model card, and list the model in the marketplace.

- As users adopt the model, both the developer and dataset contributors receive ongoing rewards.

For a Small Business Deploying an AI Assistant

- The business browses the marketplace for a ready-made assistant tailored to their sector.

- They set usage limits and configure privacy settings to ensure sensitive data remains secure.

- They only pay for usage, receiving transparent invoices and the opportunity to switch providers or models when necessary.

- Customer feedback contributes to model evaluation, fostering continuous improvement.

For a Data Contributor Creating a High-Quality Dataset

- The contributor assembles a dataset with clear licensing and consent where required, plus documentation on collection methods and known biases.

- They register the dataset in the commons with accompanying metadata and sample audits.

- As models utilize the dataset, the contributor earns rewards, while independent auditors can earn bounties for identifying issues or enhancing documentation.

The Potential of a Decentralized Approach

While decentralization is not a panacea, its thoughtful application could significantly reshape the AI landscape in three key areas:

- Cost and Accessibility: By aggregating numerous small providers, the network can offer more compute resources to builders and make inference more affordable for smaller teams. This is increasingly significant as demand for AI-capable chips surpasses centralized supply (Stanford AI Index 2024).

- Resilience: A distributed network with multiple providers can mitigate single points of failure, lock-in situations, and pricing volatility. Internal competition can help maintain optimal performance while keeping costs low.

- Alignment: When the individuals who provide compute, data, and models also benefit from the rewards and governance, they are motivated to enhance quality, safety, and transparency over time.

Challenges, Considerations, and Questions Ahead

Any serious effort to democratize AI must confront challenging issues. AIDNE’s ambitious vision will depend on sound solutions to practical questions:

- Trust and Verification: How will the network ensure that remote nodes perform their tasks accurately and avoid tampering with data or model weights? Solutions like cryptographic proofs, redundancy, and reputation systems could help, but each adds complexity.

- Quality Control: Open contribution frameworks can attract low-quality or malicious inputs. Transparent evaluations, audits, and staking-slash mechanisms could assist, but balancing incentives can be intricate.

- Privacy and Compliance: Managing sensitive data and adhering to regulatory guidelines necessitates robust privacy measures and clear policies that align with regulations such as the EU AI Act (EU AI Act) and industry-specific rules.

- Energy Efficiency and Sustainability: Distributed computing may require substantial energy. Advocating for efficient hardware, carbon-aware scheduling, and renewable energy sources will be vital to minimize environmental impact.

- Governance and Control: Token-based governance can still be susceptible to dominance by early adopters. Mechanisms like delegate systems, quadratic voting, and participation thresholds may be necessary to prevent oligarchical outcomes.

- Security: Open networks can attract malicious actors; thus, secure execution, timely patching, and bug bounty programs are essential.

- Economic Viability: The reward system must be sustainable without fostering extractive practices or encouraging speculatory behavior.

These challenges are solvable, but addressing them will require precise engineering, community standards, and transparent iterations. Regulatory bodies also watch this landscape closely, from inquiries into competition to safety frameworks, emphasizing the need for responsible design (FTC AI inquiry; CMA update).

Indicators of Success

What would effective democratization signify in practice? Some concrete metrics could include:

- Growth of Builders: An increase in independent developers and small teams training or deploying models without reliance on a single provider.

- Cost Reduction: Notable decreases in the cost per million tokens generated or trained for typical workloads.

- Open Contributions: An increasing share of network activity stemming from open datasets and open-source models with thorough documentation.

- Diverse Governance: Broader geographical and stakeholder representation in crucial governance decisions.

- Safer Deployments: Evidence showing that privacy- and safety-preserving features are utilized by default and verified through audits.

Implications for Builders and Businesses

If AIDNE’s mission is realized, it could transform how corporations and creators leverage AI:

- Startups could train and deploy models economically, avoiding dependence on any single provider and optimizing cost-performance ratios.

- Enterprises might source compliant datasets with clear origins and audit trails.

- Researchers could reproduce results more effectively with shared configurations, data cards, and traceable tasks.

- Communities could pool their resources to develop models tailored to local languages and specific needs that often go underserved in centralized markets.

Engaging with AIDNE

AIDNE is fundamentally a community-driven initiative. If this vision resonates with you, there are numerous ways to engage in networks like this:

- Run a node and contribute computational resources to support training and inference.

- Curate, document, and maintain datasets that are high-quality and have clear licenses.

- Build, fine-tune, and evaluate models, ensuring reproducible results are published.

- Assist with audits, safety assessments, and red-teaming exercises.

- Take part in governance discussions and propose enhancements about incentives, safety measures, and user experience.

And a vital reminder: if a token is involved in the network, approach it like any other emerging technology. Conduct your own research, understand the risks, and comply with local regulations. Nothing in this article should be interpreted as financial advice.

Conclusion: Towards Open Networks in AI

AI is too vital to be developed solely by a handful of entities. While concentration can offer substantial scale, it also poses the risk of limiting who can innovate and benefit from advancements. AIDNE’s foundational idea is straightforward: unify a global community to contribute compute, data, and expertise, rewarding them for developing an open, trustworthy AI ecosystem.

While much work remains from vision to execution, initiatives like AIDNE could make AI more affordable, auditable, and accessible, shifting the industry towards a more inclusive future. This is a worthy goal to pursue.

Frequently Asked Questions (FAQs)

What does democratizing AI mean?

It means breaking down barriers so a broader range of people can develop, use, and benefit from AI. This includes ensuring affordable computational resources, providing open and well-documented datasets, and establishing transparent governance.

How is AIDNE different from centralized AI providers?

Centralized providers own the infrastructure and dictate the rules. AIDNE, in contrast, intends to distribute computing power, data contributions, and decision-making across a community, using token incentives to align interests and transparent governance for policy and safety matters.

Is decentralized computing reliable for real workloads?

Yes, for many applications, provided that the network can ensure job execution validity, maintain uptime, and manage performance variability. Strategies like redundancy, reputation systems, and secure enclaves will enhance reliability, though they do increase complexity.

How does AIDNE address data privacy and compliance?

The vision incorporates privacy-sensitive strategies such as federated learning and differential privacy where suitable, complemented by robust access controls and audit trails. Compliance will depend on the specific application and governing laws, which must correspond with regulations like the EU AI Act.

What are the primary risks to AIDNE’s vision?

Key concerns include designing viable incentives, safeguarding security and quality on a large scale, preventing governance capture, and adhering to regulatory standards. Effectively tackling these issues will require technical precision and community involvement.

Sources

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article