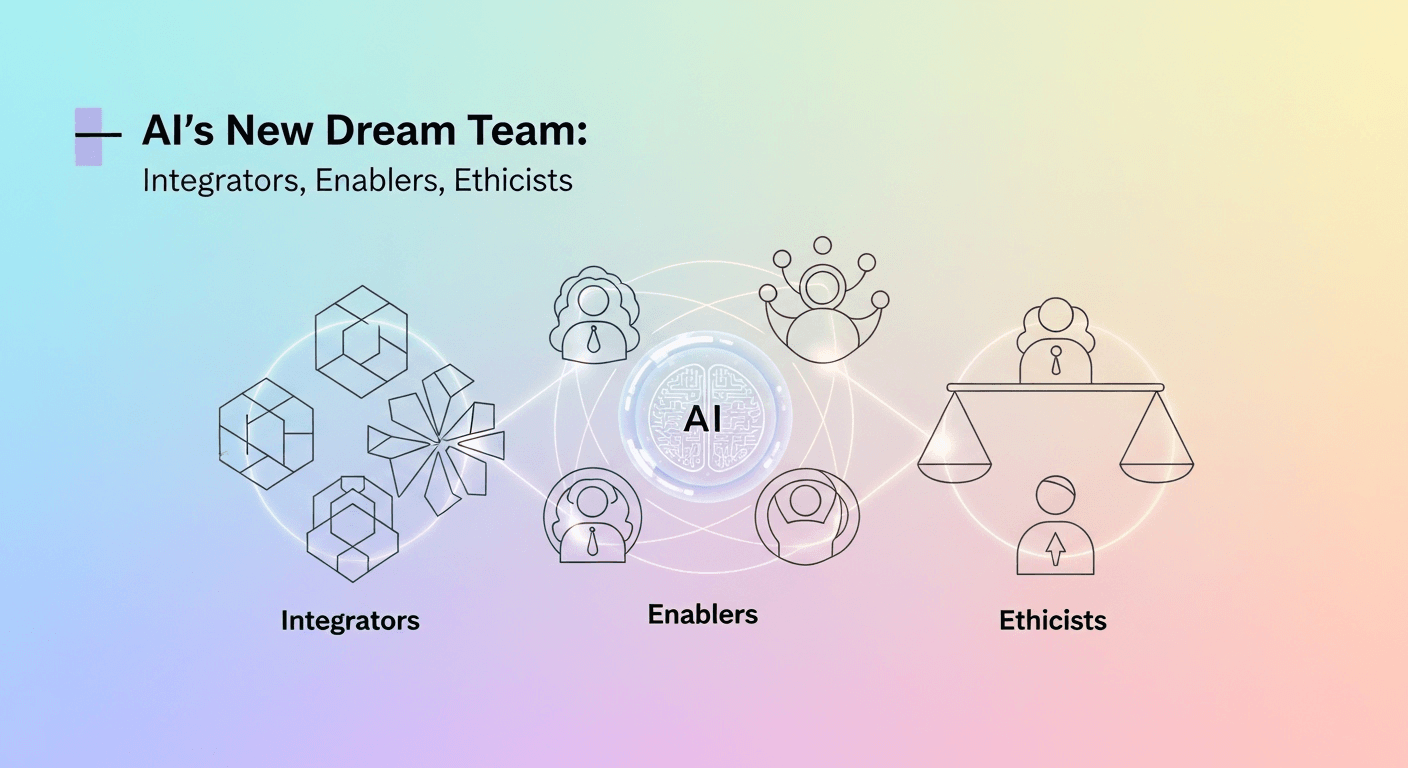

AI’s New Dream Team: Integrators, Enablers, and Ethicists

Introduction

If it seems like artificial intelligence (AI) has rapidly shifted from a lab curiosity to an essential business tool, you’re not mistaken. AI now enhances everything from medical image analysis to customer support and creative applications. As organizations transition from pilot projects to full-scale deployment, three key roles are emerging as critical to fostering responsible and valuable AI: the AI Integrator, the AI Enabler, and the AI Ethicist. Think of them as the builder, the booster, and the conscience of AI. Their responsibility is not to create new algorithms but to ensure that AI is effectively utilized, user-friendly, and trustworthy in real-world scenarios.

Why These Roles, and Why Now

Two major shifts explain this timely evolution. First, the business impact of AI has surged, with projections suggesting that generative AI and similar automation could significantly enhance productivity by transforming a large portion of daily tasks. Second, the expectations for compliance and safety are rising, necessitating that AI systems be developed, governed, and evaluated with the same diligence as any mission-critical system.

- On Impact: McKinsey estimates that current technologies could automate up to 70% of employees’ tasks, and that generative AI could contribute between 0.1 to 0.6 percentage points to annual productivity growth through 2040, provided organizations effectively reinvest this time into higher-value work.

- On Adoption and Workforce Change: The World Economic Forum reports that nearly 75% of companies surveyed anticipate adopting AI, leading to considerable job shifts across functions over the next five years.

- On Governance: The EU’s AI Act is rolling out regulations starting in 2025, with full enforcement expected by August 2026, aiming to assist companies in compliance with guidance for general AI use. This legislation is influencing global standards, even beyond the EU.

- On Standards: NIST’s AI Risk Management Framework (AI RMF 1.0) and its Generative AI Profile offer practical, voluntary guidelines to help organizations implement trustworthy AI.

In this context, the roles of integrator, enabler, and ethicist form the backbone of AI’s new dream team.

The AI Integrator – The Builder

What They Do

AI Integrators bridge the gap between business strategy and actionable solutions. They focus on the complex task of integrating AI models and services into existing workflows, data streams, and systems to drive meaningful outcomes.

Typical Responsibilities Include:

- Identifying areas where AI can enhance core processes (e.g., underwriting, claims processing, supply chain management).

- Designing architecture for data access, feature stores, vector databases, and model deployment.

- Orchestrating the selection of models (whether utilizing vendor APIs, open-source models, or fine-tuned in-house solutions).

- Managing user onboarding, updating standard operating procedures, and measuring the effectiveness of AI applications.

- Ensuring a seamless transition from concept to reliable production systems.

Day in the Life: A Quick Scenario

Consider a regional insurer wanting to expedite claims processing without compromising accuracy or compliance. The integrator devises a retrieval-augmented generation (RAG) service that interprets claims notes, evaluates policy rules, and recommends next steps for adjusters. They establish secure data access, choose an auditable model, incorporate policy-sensitive prompts, and implement dashboards to monitor throughput, accuracy, and override rates. The process involves piloting, iterating, and scaling the solution.

Core Skills and Tools

- Technical Proficiency: Python, APIs, event-driven architecture, cloud services, containerization, and CI/CD for ML (MLOps/LLMOps).

- Data Expertise: Schema design, data quality, lineage, and privacy management.

- Product Acumen: Understanding outcome metrics, user experience design, and feedback mechanisms.

- Security and Reliability: Secrets management, model monitoring, drift detection, and fail-safes.

- Collaborative Skills: Coordinating with product, legal, security, data, and frontline teams.

Security by design is non-negotiable. Government agencies in the US, UK, and allied nations have collaboratively released guidelines for secure AI development that emphasize threat modeling, supply-chain risks, and strengthening AI assets across their lifecycle.

KPI Examples

- Cycle time from concept to production.

- Uptime and latency service level objectives (SLOs) for AI services.

- Improvement in accuracy or quality relative to baseline.

- User adoption metrics and override rates.

- Cost-to-serve per task (inference and operational costs).

Breaking In

For software engineers or solutions architects, enhancing skills in data engineering and MLOps is essential. Deliver one complete AI feature in a production environment. Data scientists should focus on deepening their understanding of systems design, APIs, and product metrics. Collaborating early with security and legal teams will streamline processes later on. NIST’s AI RMF and Generative AI Profile can provide a valuable vocabulary for discussing risk and controls.

The AI Enabler – The Booster

What They Do

Enablers operate the platform and create the playbook. They simplify the process for teams to work with AI, ensuring it is safe, efficient, and cost-effective. Where integrators focus on specific use cases, enablers provide the infrastructure: shared services, governance frameworks, training, procurement strategies, and financial oversight.

Core Responsibilities

- Develop and manage an internal AI platform (which includes data connectors, vector stores, model interfaces, prompt libraries, and evaluation frameworks).

- Define effective procedures and reference architectures for common use cases such as RAG, classification, and summarization.

- Provide integrated risk and compliance templates that align with your software development lifecycle (SDLC), encompassing threat modeling and data protection assessments.

- Host training sessions to promote AI literacy across various teams.

- Oversee vendor partnerships and strategic capacity planning (including GPU and accelerator strategies).

Organizational Insights

Many organizations establish an AI Center of Enablement (or Excellence) to synchronize these efforts. Notably, a 2024 poll from Gartner reveals that the majority of organizations employ an AI leader, with over half having some form of AI governance board, even if most do not designate a formal “Chief AI Officer” title.

The Chief AI Officer Query

Does your organization need a Chief AI Officer? The answer depends on factors such as size, goals, and regulatory concerns. While the role is gaining prominence, most organizations still assign an AI leader without introducing a new C-level position. However, there must be someone responsible for driving strategy, governance, and execution.

KPI Examples

- Time Saved for product teams using shared resources.

- Percentage of AI projects that adhere to established guidelines.

- Mean time for detecting and resolving model-related incidents.

- Training completion rates and improvement in AI literacy.

- Cost per 1,000 calls depending on the model and its application.

Breaking In

Individuals with a background in platform engineering, DevOps, or data engineering are well-positioned to enter this field. Expanding knowledge in model interfaces, vector databases, prompt management, and evaluation tools is crucial. Engaging with guidance from agencies such as CISA, NCSC, and NSA on secure AI deployments will help incorporate necessary safeguards from the outset.

The AI Ethicist – The Conscience

What They Do

Ethicists play a crucial role in anticipating and addressing potential harms, safeguarding rights, and maintaining the social license to operate. They integrate responsible AI practices into daily operations, treating them as essential design considerations from the beginning.

Typical Responsibilities Include

- Defining ethical principles (fairness, privacy, transparency) and translating these into actionable engineering requirements.

- Conducting reviews prior to deployment, alongside bias testing and red teaming exercises.

- Managing documentation (model cards and system cards), incident responses, and escalation procedures.

- Collaborating with legal teams on regulatory compliance and disclosure requirements.

- Advising on matters of data sourcing, consent, copyright, and auditing obligations.

Why This Matters Now

With evolving laws and standards, the EU AI Act prohibits certain practices and imposes strict rules for high-risk AI systems, with enforcement rolling out from 2025 to 2026. In the US, even amid recent policy changes in 2025, the message is clear: demonstrate safety and accountability to avoid conflicts with customers, partners, and regulators.

Standards to Know

- NIST AI RMF 1.0: A widely-accepted, voluntary framework for managing AI-related risks throughout its lifecycle.

- NIST Generative AI Profile: Practical enhancements for generative AI use cases.

- ISO/IEC 42001: Requirements specific to AI management systems (AIMS).

These resources provide checklists and a common language for product teams and compliance departments.

Red Teaming and Evaluation

AI failure modes are rapidly changing. Engaging in red teaming and continuous evaluations helps identify vulnerabilities that static assessments may overlook. Recent guidance underscores the necessity for iterative and adversarial evaluations rather than one-time assessments.

KPI Examples

- Depth and breadth of pre-deployment evaluations conducted.

- Proportion of models that have documented model cards and data sources.

- Trends in the number and severity of noted risk exceptions over time.

- Response time for resolving issues identified in audits or red-team exercises.

- Indicators of user trust, including complaint rates and transparency requests.

Breaking In

For compliance, privacy, UX, social science, or policy professionals, adding technical knowledge of model behavior and evaluation criteria can enhance effectiveness. Learn how to conduct bias tests, run data ablation studies, and work with security to identify misuse scenarios. The goal is to embed ethical considerations into the workflow from the outset: making the right choice the default.

How the Roles Work Together

- The Integrator: Owns the specific use case outcome. They determine the job that needs addressing, build the architecture, and deliver the solution.

- The Enabler: Manages the infrastructure and resources. They provide the tools, standards, and training that facilitate safe and efficient production.

- The Ethicist: Upholds the ethical framework. They implement safeguards and cultivate accountability so the business can expand while maintaining trust.

In practice, senior leaders may take on multiple roles. For instance, a product leader may function as an integrator for one area and an enabler for another by promoting shared tools. In smaller companies, the ethicist role may rotate but be based on clear policy templates and external audits. The essential factor is clarity on responsibilities and decision-making authority.

From Pilots to Production: A Practical Path

- Select the Right Use Cases: Prioritize measurable tasks adjacent to existing processes where AI can enhance human efforts. Understand your baseline and the metrics for success.

- Create Golden Paths: Develop reusable frameworks for RAG, classification, and summarization, ensuring built-in evaluation tools.

- Instrument from Day One: Record prompts, responses, and human feedback with appropriate privacy measures; set up review workflows for sensitive decisions.

- Govern Proportionally: Apply a risk-managed approach aligned with NIST guidance and the regulations applicable in your target markets. Document throughout the process.

- Secure the Lifecycle: Implement secure-by-design practices across data handling, model deployment, and the supply chain. Regularly update security measures.

- Train the Team: Offer role-specific training for users, approvers, developers, and executives to enhance understanding of AI capabilities and responsibilities.

- Audit and Adapt: Treat incidents as learning opportunities to refine prompts, data inputs, models, and controls. Regular red-team exercises should be scheduled.

Effective Team Structures

- Central Platform with Federated Delivery: A small enabling team manages shared services and governance while integrator groups within business units drive use case implementation. The ethicist enforces policies, conducts random audits, and trains evaluators.

- Targeted Teams for High-Impact Initiatives: Temporary integration of ethicists and security specialists within an integrator team can expedite safe solution delivery. Successful practices can then be scaled into broader platforms.

- Leverage Vendors but Control the Core: Utilize external models or APIs but maintain control over your data pipelines, guardrails, and evaluation tools internally. This approach minimizes dependency and maximizes visibility.

Recruitment Guidance and Sample Profiles

AI Integrator

- Background: Solutions architecture, data engineering, or product engineering.

- Portfolio: Experience in shipping AI-enhanced features with measurable outcomes.

- Interview Signals: Systems thinking, reasoning on tradeoffs, understanding of safety, and cost awareness, along with the ability to communicate with non-technical audiences.

AI Enabler

- Background: Platform engineering, DevOps/SRE, data engineering.

- Portfolio: Experience in creating internal components for developer platforms and automation.

- Interview Signals: Enthusiasm for automation and effective pathways, understanding of cost and reliability, and narratives around cross-functional team enablement.

AI Ethicist

- Background: Policy, compliance, privacy, UX research, risk auditing.

- Portfolio: Experience in conducting risk assessments, model evaluations, and incident response management.

- Interview Signals: Practical pragmatism, familiarity with industry standards, and the ability to make compliance measures usable for developers.

Tooling Overview (Illustrative)

- Data Management: Lakehouse, feature store, vector database.

- Model Access and Routing: API gateways, connectors for commercial and open models, safety middleware.

- Evaluation: Offline testing frameworks, human feedback loops, red-team exercises.

- Observability: Logging systems, drift and quality monitoring, feedback dashboards.

- Governance: Model registries, model cards, risk assessments, approval workflows.

- Security Measures: Managing identity and access, executing secret rotations, scanning for vulnerabilities, ensuring separation for sensitive workloads. Rely on guidance frameworks issued by allied governments as a checklist.

Regulatory Context: Changes Since 2025

In early 2025, US federal policy shifted, resulting in the revocation of previous executive orders related to AI risk and prompting agencies to develop a new strategic direction. Regardless, sector-specific regulations, local laws, and international frameworks like the EU AI Act continue to influence governance and transparency expectations. Organizations must prepare for a multi-regulatory landscape.

FAQs

Q1: Do we really need three separate roles?

Not necessarily. In smaller teams, one individual may encompass the responsibilities of an integrator, enabler, and ethicist. The critical point is that all three sets of responsibilities are assigned and transparent: delivering results, facilitating safe reuse, and preserving trust.

Q2: How do these roles relate to data scientists and ML engineers?

Data scientists and ML engineers focus on building and refining models. Integrators turn those models into tangible business outcomes, enablers provide the foundational platform, and ethicists define and ensure the guardrails. Many professionals in this field cycle between these roles throughout their careers.

Q3: Are these roles only relevant to regulated industries?

No. Even consumer applications benefit from established governance, evaluations, and secure development practices. If your organization has customers, data, or models, there are inherent risks to manage and value to protect. Frameworks like NIST AI RMF and ISO/IEC 42001 provide guidance for any organization aiming to operationalize ethical AI.

Q4: How do we measure ROI for this dream team?

Identify a few leading indicators (time-to-market, reuse rates, evaluation thoroughness) alongside some lagging indicators (error rates, customer satisfaction, revenue increases, or cost reductions) to correlate performance improvements with team investments.

Q5: What about red teaming and model evaluations?

Integrate these evaluations into your regular schedule, akin to penetration tests. Employ adaptive adversarial testing methods to detect new failure modes. Track remediation times and retesting outcomes as key performance indicators (KPIs). Researchers emphasize that threats are constantly evolving, necessitating ongoing evaluations.

Closing Thoughts

Generative AI has simplified demonstrating technological capabilities. However, transforming these capabilities into reliable, ethical, and cost-effective products requires a new approach to work. The AI Integrator ensures the delivery of results. The AI Enabler provides the necessary framework for achievement. The AI Ethicist safeguards the ethical commitment. Together, they transition pilot projects into valuable, sustainable operations.

If your organization is serious about leveraging AI in 2025 and beyond, invest in this trio. Clearly define their roles, share success metrics, and empower them to act decisively when necessary. In doing so, you’ll cultivate scalable systems, enhance team learning, and foster trust with both customers and regulators.

Further Reading

- The Emerging Roles in Artificial Intelligence (original concept of these roles)

- NIST AI Risk Management Framework and Generative AI Profile

- EU AI Act timelines and guidance for general AI

- Joint secure AI development and deployment guidelines

Thank You for Reading this Blog and See You Soon! 🙏 👋

Let's connect 🚀

Latest Insights

Deep dives into AI, Engineering, and the Future of Tech.

I Tried 5 AI Browsers So You Don’t Have To: Here’s What Actually Works in 2025

I explored 5 AI browsers—Chrome Gemini, Edge Copilot, ChatGPT Atlas, Comet, and Dia—to find out what works. Here are insights, advantages, and safety recommendations.

Read Article